Difference between revisions of "NVIDIA Jetson TX2 - VI Latency Measurement Techniques"

Jcaballero (talk | contribs) |

m |

||

| (4 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

| − | <seo title="NVIDIA Jetson TX1 TX2 Video Latency | + | <seo title="NVIDIA Jetson TX1 TX2 Video Latency | Tegra TX2 latency measurement | RidgeRun" titlemode="replace" keywords="Linux SDK, Linux BSP, Embedded Linux, Device Drivers, Nvidia, Jetson, TX1, TX2, Xilinx, TI, NXP, Freescale, Embedded Linux driver development, Linux Software development, Embedded Linux SDK, Embedded Linux Application development, GStreamer Multimedia Framework,Latency,Video Latency,Tegra Latency, Jetson TX1 Latency, Tegra TX2 Latency,Jetson TX2 Latency,Tegra TX1 Latency,Jetson TX2 VI." description="This wiki is intended to be used as a reference for the techniques used for measuring the latency on Jetson TX2 Video Input Platform"></seo> |

| + | ==Introduction to NVIDIA Jetson TX2 - VI Latency Measurement Techniques== | ||

| − | In this wiki, the techniques used to measure the Jetson TX2 image capture latency are explained. This wiki assumes that the reader is familiar with the Jetson TX2 VI concepts outlined in [[ | + | In this wiki, the techniques used to measure the Jetson TX2 image capture latency are explained. This wiki assumes that the reader is familiar with the Jetson TX2 VI concepts outlined in [[NVIDIA Jetson TX2 - Video Input Timing Concepts]]. |

| Line 8: | Line 9: | ||

| − | =Techniques that use the SOF timestamp= | + | ==Techniques that use the SOF (Start-Of-Frame) timestamp== |

Whether the capture mechanism uses the ISP or not, the CHANSEL_PXL_SOF timestamp can be obtained from userspace and then used to measure the image capture latency. Consider the following image for a capture that bypasses the ISP: | Whether the capture mechanism uses the ISP or not, the CHANSEL_PXL_SOF timestamp can be obtained from userspace and then used to measure the image capture latency. Consider the following image for a capture that bypasses the ISP: | ||

| Line 19: | Line 20: | ||

Given that the SOF timestamp that is embedded into the captured buffer its in nanoseconds and that t1 can also be obtained in nanoseconds, the latency is expected to be in nanoseconds as well, although, for some reason the SOF timestamp available in user space brings the least significant three digits in zero, then if it is required to obtain these last three digits this can be achieved by enabling the vi traces and searching for the CHANSEL_PXL_SOF timestamp that matches the obtained SOF value. Check the Tracing section for more information on this. | Given that the SOF timestamp that is embedded into the captured buffer its in nanoseconds and that t1 can also be obtained in nanoseconds, the latency is expected to be in nanoseconds as well, although, for some reason the SOF timestamp available in user space brings the least significant three digits in zero, then if it is required to obtain these last three digits this can be achieved by enabling the vi traces and searching for the CHANSEL_PXL_SOF timestamp that matches the obtained SOF value. Check the Tracing section for more information on this. | ||

| − | ==Adjustment of TSC SOF timestamp to be in sync with CLOCK_MONOTONIC== | + | ===Adjustment of TSC SOF timestamp to be in sync with CLOCK_MONOTONIC=== |

| − | Given that the TSC clock used to set the SOF timestamp by the RTCPU is ahead of time with respect to the value returned by clock_gettime for CLOCK_MONOTONIC (a known issue observed in Jetpack 3.2.1, see [[ | + | Given that the TSC clock used to set the SOF timestamp by the RTCPU is ahead of time with respect to the value returned by clock_gettime for CLOCK_MONOTONIC (a known issue observed in Jetpack 3.2.1, see ([[NVIDIA_Jetson_TX2_-_Video_Input_Timing_Concepts#Timestamping_System_Clock | Timestamping System Clock]]), the offset between these clocks must be compensated in order to measure the latency. These methods have the disadvantage that the error introduced by the adjustment might cause the latency to be smaller or bigger than it should be, so the results of these measurements must be double-checked with other techniques. |

| − | ===Sysfs node with offset_ns=== | + | ====Sysfs node with offset_ns==== |

For Jetpack 4.1 NVIDIA provided a patch that allows access from userspace to a sysfs node that contains the offset in nanoseconds of the difference between the TSC clock and the CLOCK_MONOTONIC. This patch can be backported to Jetpack 3.2.1 and the offset_ns can be read from the file /sys/devices/system/clocksource/clocksource0/offset_ns, usually, it contains a positive value in nanoseconds. The Adjusted TSC SOF timestamp can be computed as follows: | For Jetpack 4.1 NVIDIA provided a patch that allows access from userspace to a sysfs node that contains the offset in nanoseconds of the difference between the TSC clock and the CLOCK_MONOTONIC. This patch can be backported to Jetpack 3.2.1 and the offset_ns can be read from the file /sys/devices/system/clocksource/clocksource0/offset_ns, usually, it contains a positive value in nanoseconds. The Adjusted TSC SOF timestamp can be computed as follows: | ||

| Line 31: | Line 32: | ||

Since each buffer brings its own SOF timestamp, then the latency of each frame can be computed by extracting SOF and another timestamp using clock_gettime with CLOCK_MONOTONIC as soon as the frame is available on the application. | Since each buffer brings its own SOF timestamp, then the latency of each frame can be computed by extracting SOF and another timestamp using clock_gettime with CLOCK_MONOTONIC as soon as the frame is available on the application. | ||

| − | ===Adjustment of SOF timestamp on notify code=== | + | ====Adjustment of SOF timestamp on notify code==== |

In Jetpack 4.2 NVIDIA implemented a workaround to adjust the SOF timestamp on the file kernel/t18x/drivers/platform/tegra/rtcpu/vi-notify.c. This workaround can be backported to Jetpack 3.2.1 and roughly consists in extracting TSC values twice and CLOCK_MONOTONIC once, compute the adjustments for each TSC value and if the difference in the adjustments is less than 5us then the first adjustment computed is used to modify the SOF to be in sync with the CLOCK_MONOTONIC time. | In Jetpack 4.2 NVIDIA implemented a workaround to adjust the SOF timestamp on the file kernel/t18x/drivers/platform/tegra/rtcpu/vi-notify.c. This workaround can be backported to Jetpack 3.2.1 and roughly consists in extracting TSC values twice and CLOCK_MONOTONIC once, compute the adjustments for each TSC value and if the difference in the adjustments is less than 5us then the first adjustment computed is used to modify the SOF to be in sync with the CLOCK_MONOTONIC time. | ||

| Line 42: | Line 43: | ||

This technique has the advantage of obtaining both t0 and t1 using the same clock as a reference but with the disadvantage that t1 is obtained before the arrival of the frame to userspace, and then, there might be some delays not being considered and then introducing an error that will cause the estimated latency to be smaller than it should be. | This technique has the advantage of obtaining both t0 and t1 using the same clock as a reference but with the disadvantage that t1 is obtained before the arrival of the frame to userspace, and then, there might be some delays not being considered and then introducing an error that will cause the estimated latency to be smaller than it should be. | ||

| − | ==Obtain TSC value from user space== | + | ===Obtain TSC value from user space=== |

It is possible to use mmap to get the TSC value from userspace. To do this, the registers CNTCV0 and CNTCV1 are maped and then read when each frame arrives to the userspace application. The current TSC value is computed as follows: | It is possible to use mmap to get the TSC value from userspace. To do this, the registers CNTCV0 and CNTCV1 are maped and then read when each frame arrives to the userspace application. The current TSC value is computed as follows: | ||

| Line 49: | Line 50: | ||

Again, to measure latency with this technique, the patch to adjust the SOF timestamp from the notify block must not be applied, otherwise t0 would be modified but not t1. | Again, to measure latency with this technique, the patch to adjust the SOF timestamp from the notify block must not be applied, otherwise t0 would be modified but not t1. | ||

| − | =Techniques that do not use the SOF timestamp= | + | ==Techniques that do not use the SOF timestamp== |

Since the firmware of the RTCPU that sets this timestamp is closed [2][3] and we rely on the documentation assertion of the moment when the SOF timestamp is set and the clock being used for this, sometimes it is desired to perform the estimation without using the SOF timestamp, in order to increase reliability on the latency estimation. | Since the firmware of the RTCPU that sets this timestamp is closed [2][3] and we rely on the documentation assertion of the moment when the SOF timestamp is set and the clock being used for this, sometimes it is desired to perform the estimation without using the SOF timestamp, in order to increase reliability on the latency estimation. | ||

| − | ==LED test== | + | ===LED test=== |

This test involves the use of a led connected to the TX2 GPIO. For this test, the camera must be placed on a dark box with a led covering as much as possible its field of view. The camera must start capturing when the led is still off, and then a kernel module must turn the led on and then record the current CLOCK_MONOTONIC timestamp to be used as t0. Each image that arrives to userspace must be timestamped also with the CLOCK_MONOTONIC at the instant when it's available, this will be t1. This way, the userspace application can either detect the first frame that comes with the led light or save the images to file with the timestamp on its name, so that the images can be reviewed and the timestamp of the first image to show the led light be used as t1 to compute the latency. The figure below illustrates this technique: | This test involves the use of a led connected to the TX2 GPIO. For this test, the camera must be placed on a dark box with a led covering as much as possible its field of view. The camera must start capturing when the led is still off, and then a kernel module must turn the led on and then record the current CLOCK_MONOTONIC timestamp to be used as t0. Each image that arrives to userspace must be timestamped also with the CLOCK_MONOTONIC at the instant when it's available, this will be t1. This way, the userspace application can either detect the first frame that comes with the led light or save the images to file with the timestamp on its name, so that the images can be reviewed and the timestamp of the first image to show the led light be used as t1 to compute the latency. The figure below illustrates this technique: | ||

| Line 63: | Line 64: | ||

Even with the disadvantage mentioned above, the led test is very useful to determine an upper bound of the latency without relying on the VI timestamps. | Even with the disadvantage mentioned above, the led test is very useful to determine an upper bound of the latency without relying on the VI timestamps. | ||

| − | =Tracing= | + | ==Tracing== |

It is possible to enable rtcpu tracing, as well as v4l2 in order to get some other timestamps that might be helpful. The following commands must be executed for this purpose: | It is possible to enable rtcpu tracing, as well as v4l2 in order to get some other timestamps that might be helpful. The following commands must be executed for this purpose: | ||

| Line 80: | Line 81: | ||

The traces can be examined in the file /sys/kernel/debug/tracing/trace after a capture has been started. The RTCPU events on these traces bring a timestamp that must be multiplied by 32 in order to match that of the SOF timestamp and to be on the same scale as the time obtained with clock_gettime using the CLOCK_MONOTONIC option. Each event reported has the CPU number where it was executed and the name of the event along with its timestamp. | The traces can be examined in the file /sys/kernel/debug/tracing/trace after a capture has been started. The RTCPU events on these traces bring a timestamp that must be multiplied by 32 in order to match that of the SOF timestamp and to be on the same scale as the time obtained with clock_gettime using the CLOCK_MONOTONIC option. Each event reported has the CPU number where it was executed and the name of the event along with its timestamp. | ||

| − | = | + | ==See also== |

# https://linuxtv.org/downloads/v4l-dvb-apis/uapi/v4l/vidioc-qbuf.html | # https://linuxtv.org/downloads/v4l-dvb-apis/uapi/v4l/vidioc-qbuf.html | ||

# https://devtalk.nvidia.com/default/topic/1046381/jetson-tx2/v4l2_buf_flag_tstamp_src_soe-clock_realtime-timestamping-for-v4l2-frames-/post/5315242/#5315242 | # https://devtalk.nvidia.com/default/topic/1046381/jetson-tx2/v4l2_buf_flag_tstamp_src_soe-clock_realtime-timestamping-for-v4l2-frames-/post/5315242/#5315242 | ||

# https://devtalk.nvidia.com/default/topic/1046381/jetson-tx2/v4l2_buf_flag_tstamp_src_soe-clock_realtime-timestamping-for-v4l2-frames-/post/5315602/#5315602 | # https://devtalk.nvidia.com/default/topic/1046381/jetson-tx2/v4l2_buf_flag_tstamp_src_soe-clock_realtime-timestamping-for-v4l2-frames-/post/5315602/#5315602 | ||

| + | |||

| + | == Related links == | ||

| + | # [[NVIDIA Jetson TX2 - Video Input Timing Concepts]] | ||

| + | |||

| + | {{ContactUs}} | ||

| + | |||

[[Category:Jetson]] | [[Category:Jetson]] | ||

Latest revision as of 02:21, 12 February 2020

Contents

Introduction to NVIDIA Jetson TX2 - VI Latency Measurement Techniques

In this wiki, the techniques used to measure the Jetson TX2 image capture latency are explained. This wiki assumes that the reader is familiar with the Jetson TX2 VI concepts outlined in NVIDIA Jetson TX2 - Video Input Timing Concepts.

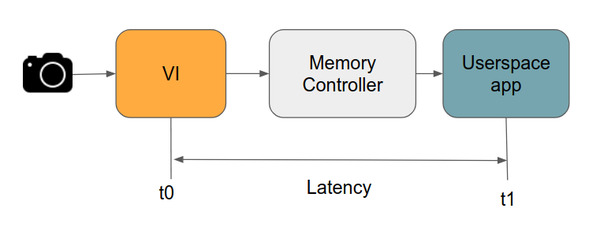

To measure latency it is required to obtain two timestamps, usually, the first timestamp is taken when the pixels of a frame start to arrive at the VI (t0), this is the start-of-frame (SOF). The second timestamp is usually obtained at the time when the frame is available on a userspace application (t1). Sometimes it is desired to employ different methods that do not depend on the VI SOF timestamp and in these cases t0 must be obtained differently. Considering this, the techniques can be divided into two main categories, those that make use of the CHANSEL_PXL_SOF (referred to as SOF) and those that don't.

Techniques that use the SOF (Start-Of-Frame) timestamp

Whether the capture mechanism uses the ISP or not, the CHANSEL_PXL_SOF timestamp can be obtained from userspace and then used to measure the image capture latency. Consider the following image for a capture that bypasses the ISP:

CHANSEL_PXL_SOF can be used as t0 and then, t1 can be the timestamp obtained at the instant when the frame arrives to userspace using ideally the same clock used to set the CHANSEL_PXL_SOF, then the latency can be computed as follows:

Given that the SOF timestamp that is embedded into the captured buffer its in nanoseconds and that t1 can also be obtained in nanoseconds, the latency is expected to be in nanoseconds as well, although, for some reason the SOF timestamp available in user space brings the least significant three digits in zero, then if it is required to obtain these last three digits this can be achieved by enabling the vi traces and searching for the CHANSEL_PXL_SOF timestamp that matches the obtained SOF value. Check the Tracing section for more information on this.

Adjustment of TSC SOF timestamp to be in sync with CLOCK_MONOTONIC

Given that the TSC clock used to set the SOF timestamp by the RTCPU is ahead of time with respect to the value returned by clock_gettime for CLOCK_MONOTONIC (a known issue observed in Jetpack 3.2.1, see ( Timestamping System Clock), the offset between these clocks must be compensated in order to measure the latency. These methods have the disadvantage that the error introduced by the adjustment might cause the latency to be smaller or bigger than it should be, so the results of these measurements must be double-checked with other techniques.

Sysfs node with offset_ns

For Jetpack 4.1 NVIDIA provided a patch that allows access from userspace to a sysfs node that contains the offset in nanoseconds of the difference between the TSC clock and the CLOCK_MONOTONIC. This patch can be backported to Jetpack 3.2.1 and the offset_ns can be read from the file /sys/devices/system/clocksource/clocksource0/offset_ns, usually, it contains a positive value in nanoseconds. The Adjusted TSC SOF timestamp can be computed as follows:

Since each buffer brings its own SOF timestamp, then the latency of each frame can be computed by extracting SOF and another timestamp using clock_gettime with CLOCK_MONOTONIC as soon as the frame is available on the application.

Adjustment of SOF timestamp on notify code

In Jetpack 4.2 NVIDIA implemented a workaround to adjust the SOF timestamp on the file kernel/t18x/drivers/platform/tegra/rtcpu/vi-notify.c. This workaround can be backported to Jetpack 3.2.1 and roughly consists in extracting TSC values twice and CLOCK_MONOTONIC once, compute the adjustments for each TSC value and if the difference in the adjustments is less than 5us then the first adjustment computed is used to modify the SOF to be in sync with the CLOCK_MONOTONIC time.

Obtain TSC value when the v4l2_dqbuf is about to return

If a v4l2 application is used as the capture mechanism, the application must queue buffers, request camera start stream and then dequeue the buffers as they are ready with the ioctl call VIDIOC_DQBUF. More information on this ioctl can be found in [1]. This ioctl is handled by the v4l2 core function v4l_dqbuf.

The v4l_dqbuf function definition is in the file kernel/kernel-4.4/drivers/media/v4l2-core/v4l2-ioctl.c, and can be modified to get the TSC timestamp using the call arch_counter_get_cntvct() right after the buffer dequeue has been requested and before the call returns to userspace. The arch_counter_get_cntvct() must be multiplied by 32 (as it's used on Jetpack 4.2 workaround) and in this way, t1 can be obtained using the same "clock" as t0. For this measurement, the patch of the Jetpack 4.2 adjustment workaround must not be applied since on this patch only t0 gets adjusted and not t1.

This technique has the advantage of obtaining both t0 and t1 using the same clock as a reference but with the disadvantage that t1 is obtained before the arrival of the frame to userspace, and then, there might be some delays not being considered and then introducing an error that will cause the estimated latency to be smaller than it should be.

Obtain TSC value from user space

It is possible to use mmap to get the TSC value from userspace. To do this, the registers CNTCV0 and CNTCV1 are maped and then read when each frame arrives to the userspace application. The current TSC value is computed as follows:

Again, to measure latency with this technique, the patch to adjust the SOF timestamp from the notify block must not be applied, otherwise t0 would be modified but not t1.

Techniques that do not use the SOF timestamp

Since the firmware of the RTCPU that sets this timestamp is closed [2][3] and we rely on the documentation assertion of the moment when the SOF timestamp is set and the clock being used for this, sometimes it is desired to perform the estimation without using the SOF timestamp, in order to increase reliability on the latency estimation.

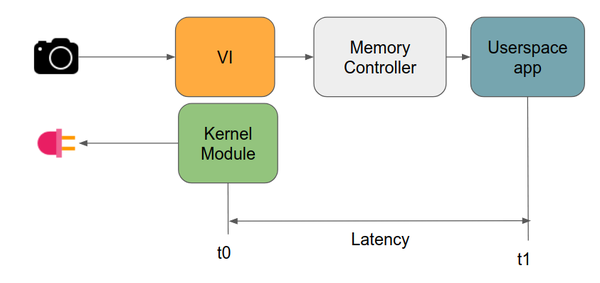

LED test

This test involves the use of a led connected to the TX2 GPIO. For this test, the camera must be placed on a dark box with a led covering as much as possible its field of view. The camera must start capturing when the led is still off, and then a kernel module must turn the led on and then record the current CLOCK_MONOTONIC timestamp to be used as t0. Each image that arrives to userspace must be timestamped also with the CLOCK_MONOTONIC at the instant when it's available, this will be t1. This way, the userspace application can either detect the first frame that comes with the led light or save the images to file with the timestamp on its name, so that the images can be reviewed and the timestamp of the first image to show the led light be used as t1 to compute the latency. The figure below illustrates this technique:

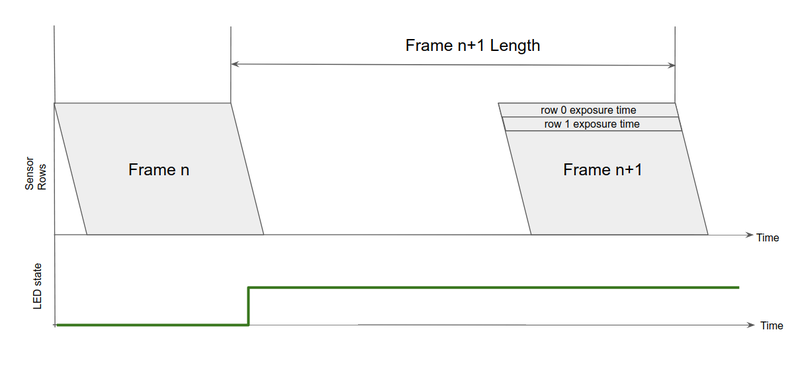

The disadvantage of this technique is that in the worst case, its error could be as big as the frame interval. Consider the image below. Given that the led is turned on at an arbitrary moment during the image capture, it is possible that this occurs right after the sensor exposure time has finished, then causing that the led light get captured until the next frame. Even though the led turns on in the middle of a sensor exposure (frame n), if the exposure is about to finish, not enough light of the led might be captured to be able to identify this frame as the first when the led was on. Then, the first frame to be considered as to have captured the led on will be frame n+1, introducing some additional delay to the latency estimation.

Even with the disadvantage mentioned above, the led test is very useful to determine an upper bound of the latency without relying on the VI timestamps.

Tracing

It is possible to enable rtcpu tracing, as well as v4l2 in order to get some other timestamps that might be helpful. The following commands must be executed for this purpose:

sudo su echo 1 > /sys/kernel/debug/tracing/tracing_on echo 30720 > /sys/kernel/debug/tracing/buffer_size_kb echo 1 > /sys/kernel/debug/tracing/events/tegra_rtcpu/enable echo 1 > /sys/kernel/debug/tracing/events/freertos/enable echo 1 > /sys/kernel/debug/tracing/events/v4l2/enable echo 1 > /sys/kernel/debug/tracing/events/vb2/enable echo 2 > /sys/kernel/debug/camrtc/log-level echo > /sys/kernel/debug/tracing/trace

The traces can be examined in the file /sys/kernel/debug/tracing/trace after a capture has been started. The RTCPU events on these traces bring a timestamp that must be multiplied by 32 in order to match that of the SOF timestamp and to be on the same scale as the time obtained with clock_gettime using the CLOCK_MONOTONIC option. Each event reported has the CPU number where it was executed and the name of the event along with its timestamp.

See also

- https://linuxtv.org/downloads/v4l-dvb-apis/uapi/v4l/vidioc-qbuf.html

- https://devtalk.nvidia.com/default/topic/1046381/jetson-tx2/v4l2_buf_flag_tstamp_src_soe-clock_realtime-timestamping-for-v4l2-frames-/post/5315242/#5315242

- https://devtalk.nvidia.com/default/topic/1046381/jetson-tx2/v4l2_buf_flag_tstamp_src_soe-clock_realtime-timestamping-for-v4l2-frames-/post/5315602/#5315602

Related links

|

RidgeRun Resources | |||||||||||||||||||||||||||||||||||||||||||||||||||||||

| |||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

|||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Visit our Main Website for the RidgeRun Products and Online Store. RidgeRun Engineering informations are available in RidgeRun Professional Services, RidgeRun Subscription Model and Client Engagement Process wiki pages. Please email to support@ridgerun.com for technical questions and contactus@ridgerun.com for other queries. Contact details for sponsoring the RidgeRun GStreamer projects are available in Sponsor Projects page. |

|