Difference between revisions of "GStreamer and in-band metadata"

m |

(→metasrc and metasink in GStreamer Application) |

||

| (26 intermediate revisions by 5 users not shown) | |||

| Line 1: | Line 1: | ||

| + | <seo title="GStreamer In-Band Metadata | In-band metadata | RidgeRun" titlemode="replace" keywords="GStreamer,MISP, Motion Imagery Standards Profile,in-band metadata,KLV, Key Length Value Metadata, GStreamer metadata support, meta-plugin,GStreamer Core 1.0 Pipelines,GStreamer Pipelines,mpegtsmux,GStreamer 1.0 metadata support,GStreamer 1.0" description="Discover the key to understanding GStreamer and in-band metadata with this detailed guide. Learn about MISP motion imagery standards profile at RidgeRun."></seo> | ||

| + | |||

<table> | <table> | ||

<tr> | <tr> | ||

<td><div class="clear; float:right">__TOC__</div></td> | <td><div class="clear; float:right">__TOC__</div></td> | ||

<td> | <td> | ||

| − | + | {{GStreamer debug}} | |

| − | + | <td> | |

| − | + | <center> | |

| − | < | + | {{ContactUs Button}} |

| − | + | </center> | |

| − | {{ | + | </tr> |

| − | </center> | + | </table> |

| − | == | + | == In-band metadata overview == |

| − | As video data is moving | + | As video data is moving through a GStreamer pipeline, it can be convenient to add information related to a specific frame of video, such as the GPS location, in a manner that receivers who understand how to extract metadata can access the GPS location data in a way that keeps it associated with the correct video data. In a similar fashion, if a receiver doesn't understand in-band metadata, the inclusion of such data will no affect the receiver. |

== References == | == References == | ||

| − | *http://www.gwg.nga.mil/misb/ | + | *http://www.gwg.nga.mil/misb/st_pubs.html |

| − | ** http://www.gwg.nga.mil/misb/ | + | **http://www.gwg.nga.mil/misb/trm_pubs.html |

| − | ** http://www.gwg.nga.mil/misb/ | + | **http://www.gwg.nga.mil/misb/misp_pubs.html |

| − | + | *https://gstreamer-devel.narkive.com/IcBgW3YH/accessing-non-video-streams-in-mpegts-sec-unclassified | |

| − | * | + | *https://gstreamer-devel.narkive.com/mwQV7pd9/how-to-pass-smpte-klv-metadata-in-a-pipeline |

| − | * | ||

== MISP Motion Imagery Standards Profile == | == MISP Motion Imagery Standards Profile == | ||

| Line 28: | Line 29: | ||

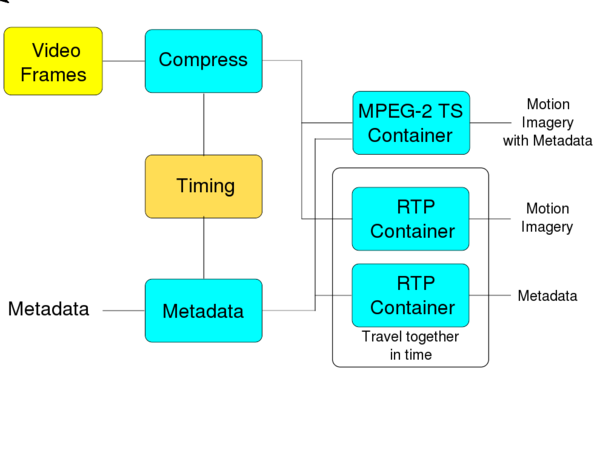

The key statement in the specification is ''Within the media container, all metadata must be in SMPTE KLV (Key-Length-Value) format.'' In MISP, the metadata is tagged with a timestamp so that it can be associated with the right video frame. This is important because GStreamer handles the association slightly differently. To understand the difference, you need to see how MISP combines video frames and metadata so you can compare it to GStreamer. The following diagram is a modified version from the MISBTRM0909 MISP spec. | The key statement in the specification is ''Within the media container, all metadata must be in SMPTE KLV (Key-Length-Value) format.'' In MISP, the metadata is tagged with a timestamp so that it can be associated with the right video frame. This is important because GStreamer handles the association slightly differently. To understand the difference, you need to see how MISP combines video frames and metadata so you can compare it to GStreamer. The following diagram is a modified version from the MISBTRM0909 MISP spec. | ||

| − | [[File:Meta-data-flow-diagram.svg|600px]] | + | [[File:Meta-data-flow-diagram.svg|600px|link=]] |

== KLV Key Length Value Metadata == | == KLV Key Length Value Metadata == | ||

| − | For this discussion, we care about time stamping and transporting KLV data, not what it means. Stated another way, KLV data is any binary data (plus a length indication) that we need to move from one end to the other while keeping the data associated with correct video frame. It is up to the user of the video encoding stream and the user of the video decoding stream to understand the meaning and encoding of the KLV data. | + | For this discussion, we care about time stamping and transporting KLV data, not what it means. Stated another way, KLV data is any binary data (plus a length indication) that we need to move from one end to the other while keeping the data associated with the correct video frame. It is up to the user of the video encoding stream and the user of the video decoding stream to understand the meaning and encoding of the KLV data. |

| − | To give a concrete KLV encoding example, here is a terse description of the SMPTE 336M-2007 Data Encoding Protocol Using Key-Length Value, which is used by MISB standard. | + | To give a concrete KLV encoding example, here is a terse description of the SMPTE 336M-2007 Data Encoding Protocol Using Key-Length Value, which is used by the MISB standard. |

{| border=2 | {| border=2 | ||

| Line 61: | Line 62: | ||

|} | |} | ||

| − | Which could be passed in as a 4 byte binary blob of 0x2A 0x02 0x00 0x03. The transport of the KLV doesn't need to know the actual encoding, just that it is 4 bytes long and the actual KLV data. | + | Which could be passed in as a 4-byte binary blob of 0x2A 0x02 0x00 0x03. The transport of the KLV doesn't need to know the actual encoding, just that it is 4 bytes long and the actual KLV data. |

| − | As another example (not MISB compliant), you could have the length be 8 and the data be 0x46 0x4F 0x4F 0x3D 0x42 0x41 0x52 0x00, which works out to be the NULL terminated ASCII string ''FOO=BAR''. The transport doesn't care about the encoding, just so both the sending and receiver are in agreement. | + | As another example (not MISB compliant), you could have the length be 8 and the data be 0x46 0x4F 0x4F 0x3D 0x42 0x41 0x52 0x00, which works out to be the NULL-terminated ASCII string ''FOO=BAR''. The transport doesn't care about the encoding, just so both the sending and receiver are in agreement. |

== Time stamps == | == Time stamps == | ||

| Line 69: | Line 70: | ||

In addition to being able to provide out-of-band information from the sender to the receiver, the information includes a timestamp that allows the data to maintain a time relationship with the video frames that also include a timestamp. Both the metadata and the video frame timestamps are generated by the same source clock. | In addition to being able to provide out-of-band information from the sender to the receiver, the information includes a timestamp that allows the data to maintain a time relationship with the video frames that also include a timestamp. Both the metadata and the video frame timestamps are generated by the same source clock. | ||

| − | Since both the flow of the metadata and the flow of the video frames can be viewed as data streaming | + | Since both the flow of the metadata and the flow of the video frames can be viewed as data streaming through a pipe, the maximum accuracy in maintaining the time relationship between the two is for both metadata and video frames to be assigned a timestamp value as soon as the data is generated. Any delay or variability in associating the timestamp with either the video frames or the metadata will add an error to the time relationship. |

== MPEG-2 Transport Stream == | == MPEG-2 Transport Stream == | ||

| − | The MPEG-2 Transport Stream protocol adds a TS header to video data, audio data, and metadata. The video data, audio data, and metadata are termed elementary streams. | + | The MPEG-2 Transport Stream protocol adds a TS header to video data, audio data, and metadata. The video data, audio data, and metadata are termed elementary streams. The TS header follows by data is called a packet. The TS header allows the receiving side to use the TS header PID (Packet ID) field to demultiplex the elementary streams. There are many other fields in a [http://en.wikipedia.org/wiki/Transport_stream#Packet TS header] beside the PID field. |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | For this discussion, the important point is the Transport Stream protocol definition already supports the notion of including timestamped metadata in a transport stream. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

=== GStreamer tags === | === GStreamer tags === | ||

| − | GStreamer supports an event called a [http://gstreamer.freedesktop.org/data/doc/gstreamer/head/pwg/html/section-events-definitions.html#section-events-tag tag]. When an element receives a tag it doesn't understand, it simply passes it downstream. | + | GStreamer supports an event called a [http://gstreamer.freedesktop.org/data/doc/gstreamer/head/pwg/html/section-events-definitions.html#section-events-tag tag]. When an element receives a tag it doesn't understand, it simply passes it downstream. Tags are either independent of the stream encoding (like the title of the song for an audio stream) or information that affects how the stream is processed (like the stream bitrate). |

* http://gstreamer.freedesktop.org/data/doc/gstreamer/head/pwg/html/chapter-advanced-tagging.html | * http://gstreamer.freedesktop.org/data/doc/gstreamer/head/pwg/html/chapter-advanced-tagging.html | ||

* http://gstreamer.freedesktop.org/data/doc/gstreamer/head/pwg/html/section-events-definitions.html | * http://gstreamer.freedesktop.org/data/doc/gstreamer/head/pwg/html/section-events-definitions.html | ||

| − | = | + | == GStreamer Core 1.0 (1.8.0) metadata support == |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | = GStreamer Core 1.0 (1.8.0) metadata support = | ||

Since release 1.6, GStreamer has included basic support for muxing KLV metadata into MPEG-TS and demuxing it from MPEG-TS, so it's not necessary to apply any patch in order to enable the elements mpegtsmux and tsdemux to accept and stream metadata, where you can verify this by running the following. Notice that tsdemux allows any capability for the source pad. | Since release 1.6, GStreamer has included basic support for muxing KLV metadata into MPEG-TS and demuxing it from MPEG-TS, so it's not necessary to apply any patch in order to enable the elements mpegtsmux and tsdemux to accept and stream metadata, where you can verify this by running the following. Notice that tsdemux allows any capability for the source pad. | ||

| Line 275: | Line 96: | ||

Long-name MPEG Transport Stream Muxer | Long-name MPEG Transport Stream Muxer | ||

Klass Codec/Muxer | Klass Codec/Muxer | ||

| − | Description Multiplexes media streams into | + | Description Multiplexes media streams into a MPEG Transport Stream |

Author Fluendo <contact@fluendo.com> | Author Fluendo <contact@fluendo.com> | ||

| Line 323: | Line 144: | ||

== The meta-plugin == | == The meta-plugin == | ||

| − | For GStreamer Core 1.0 (1.8.0) it was developed and created here at RidgeRun the meta-plugin, which contains two elements: metasrc, in order to send directly any kind of metadata over a pipeline (similar to gstreamer-0.10) and as a new feature metasrc allows sending metadata periodically and | + | For GStreamer Core 1.0 (1.8.0) it was developed and created here at RidgeRun the meta-plugin, which contains two elements: metasrc, in order to send directly any kind of metadata over a pipeline (similar to gstreamer-0.10) and as a new feature metasrc allows sending metadata periodically and provides [https://www.ridgerun.com/gstreamer-inband-metada-support GStreamer in-band metadata support] with the date-time format. On the other hand, metasink is for receiving the incoming metadata buffers. So, to be able to inject metadata to a pipeline and/or accept any metadata the meta plugin must be compiled and installed correctly. |

<pre> | <pre> | ||

| Line 344: | Line 165: | ||

+-- 2 elements | +-- 2 elements | ||

</pre> | </pre> | ||

| + | |||

| + | === metasrc and metasink in GStreamer Application === | ||

| + | |||

| + | Adding metadata support to your application involves two main tasks: on the sending side injecting metadata on the pipeline and for the receiving side extracting the metadata. In this section, we will review how to achieve this. | ||

| + | |||

| + | To inject metadata, you create a gstbuf, set the timestamp, set the caps to meta/x-klv, copy your metadata into the buffer, and push the gstbuf to the metadata sink pad on the mpegtsmux element. To make this easier you can use the RidgeRun metasrc element. Once you have the pipeline with a metasrc element, you can simply set the metasrc metadata property with the data you want to be injected. The metasrc element will create the gstbuf and set the timestamp and caps for you. You simply set the metasrc metadata element property to contain the data you want to be sent. | ||

| + | |||

| + | In python for instance, once the pipeline is created, you simply | ||

| + | |||

| + | metadata = “arbitrary data” | ||

| + | metasrc = self.pipeline.get_by_name(“metasrc") | ||

| + | metasrc.set_property(“metadata", metadata) | ||

| + | |||

| + | To extract the metadata, you branch out the demuxer to get the metadata src pad and set the caps to meta/x-klv. This pad will output the metadata in gstbufs from the mpegtsdemux element. | ||

== GStreamer Core 1.0 Pipelines examples == | == GStreamer Core 1.0 Pipelines examples == | ||

| Line 349: | Line 184: | ||

<pre> | <pre> | ||

| − | METADATA = "hello world!" | + | METADATA="hello world!" |

| − | TIME_METADATA = "The current time is: %T" | + | TIME_METADATA="The current time is: %T" |

| − | SECONDS = 1 | + | SECONDS=1 |

| − | FILE = test.ts | + | FILE=test.ts |

| − | TEXT = meta.txt | + | TEXT=meta.txt |

| − | PORT = 3001 | + | PORT=3001 |

</pre> | </pre> | ||

| Line 393: | Line 228: | ||

</pre> | </pre> | ||

| − | Coming up next, the server pipelines are H264 or H265 video encoding and streaming over the network along the | + | Coming up next, the server pipelines are H264 or H265 video encoding and streaming over the network along with the periodical metadata sending, where the client pipelines allow the H264 or H265 video decoding of the incoming stream, display the respective video, and dump the metadata content in the console standard output. |

H264-Server: | H264-Server: | ||

| Line 419: | Line 254: | ||

</pre> | </pre> | ||

| − | [[Category:GStreamer | + | {{ContactUs}} |

| + | <br> | ||

| + | |||

| + | [[Category:GStreamer]] | ||

Latest revision as of 17:05, 29 March 2023

|

|

In-band metadata overview

As video data is moving through a GStreamer pipeline, it can be convenient to add information related to a specific frame of video, such as the GPS location, in a manner that receivers who understand how to extract metadata can access the GPS location data in a way that keeps it associated with the correct video data. In a similar fashion, if a receiver doesn't understand in-band metadata, the inclusion of such data will no affect the receiver.

References

- http://www.gwg.nga.mil/misb/st_pubs.html

- https://gstreamer-devel.narkive.com/IcBgW3YH/accessing-non-video-streams-in-mpegts-sec-unclassified

- https://gstreamer-devel.narkive.com/mwQV7pd9/how-to-pass-smpte-klv-metadata-in-a-pipeline

MISP Motion Imagery Standards Profile

The key statement in the specification is Within the media container, all metadata must be in SMPTE KLV (Key-Length-Value) format. In MISP, the metadata is tagged with a timestamp so that it can be associated with the right video frame. This is important because GStreamer handles the association slightly differently. To understand the difference, you need to see how MISP combines video frames and metadata so you can compare it to GStreamer. The following diagram is a modified version from the MISBTRM0909 MISP spec.

KLV Key Length Value Metadata

For this discussion, we care about time stamping and transporting KLV data, not what it means. Stated another way, KLV data is any binary data (plus a length indication) that we need to move from one end to the other while keeping the data associated with the correct video frame. It is up to the user of the video encoding stream and the user of the video decoding stream to understand the meaning and encoding of the KLV data.

To give a concrete KLV encoding example, here is a terse description of the SMPTE 336M-2007 Data Encoding Protocol Using Key-Length Value, which is used by the MISB standard.

| Key | Length | Value |

|---|---|---|

|

Fixed length (1, 2, 4, or 16 bytes), size know to both sender and receiver, encoding the key. There are very specific rules on how keys are encoded and how both the sender and receiver know the meaning of the encoded key. |

Fixed or variable length (1, 2, 4, or BER) indication of the number of bytes of data used to encode the value. |

Variable length value whose meaning is agreed to by both the sender and the receiver. |

As an example (from Wikipedia KLV entry),

| Key | Length | Value | |

|---|---|---|---|

| 42 | 2 | 0 | 3 |

Which could be passed in as a 4-byte binary blob of 0x2A 0x02 0x00 0x03. The transport of the KLV doesn't need to know the actual encoding, just that it is 4 bytes long and the actual KLV data.

As another example (not MISB compliant), you could have the length be 8 and the data be 0x46 0x4F 0x4F 0x3D 0x42 0x41 0x52 0x00, which works out to be the NULL-terminated ASCII string FOO=BAR. The transport doesn't care about the encoding, just so both the sending and receiver are in agreement.

Time stamps

In addition to being able to provide out-of-band information from the sender to the receiver, the information includes a timestamp that allows the data to maintain a time relationship with the video frames that also include a timestamp. Both the metadata and the video frame timestamps are generated by the same source clock.

Since both the flow of the metadata and the flow of the video frames can be viewed as data streaming through a pipe, the maximum accuracy in maintaining the time relationship between the two is for both metadata and video frames to be assigned a timestamp value as soon as the data is generated. Any delay or variability in associating the timestamp with either the video frames or the metadata will add an error to the time relationship.

MPEG-2 Transport Stream

The MPEG-2 Transport Stream protocol adds a TS header to video data, audio data, and metadata. The video data, audio data, and metadata are termed elementary streams. The TS header follows by data is called a packet. The TS header allows the receiving side to use the TS header PID (Packet ID) field to demultiplex the elementary streams. There are many other fields in a TS header beside the PID field.

For this discussion, the important point is the Transport Stream protocol definition already supports the notion of including timestamped metadata in a transport stream.

GStreamer tags

GStreamer supports an event called a tag. When an element receives a tag it doesn't understand, it simply passes it downstream. Tags are either independent of the stream encoding (like the title of the song for an audio stream) or information that affects how the stream is processed (like the stream bitrate).

- http://gstreamer.freedesktop.org/data/doc/gstreamer/head/pwg/html/chapter-advanced-tagging.html

- http://gstreamer.freedesktop.org/data/doc/gstreamer/head/pwg/html/section-events-definitions.html

GStreamer Core 1.0 (1.8.0) metadata support

Since release 1.6, GStreamer has included basic support for muxing KLV metadata into MPEG-TS and demuxing it from MPEG-TS, so it's not necessary to apply any patch in order to enable the elements mpegtsmux and tsdemux to accept and stream metadata, where you can verify this by running the following. Notice that tsdemux allows any capability for the source pad.

#gst-inspect-1.0 mpegtsmux Factory Details: Rank primary (256) Long-name MPEG Transport Stream Muxer Klass Codec/Muxer Description Multiplexes media streams into a MPEG Transport Stream Author Fluendo <contact@fluendo.com> ... Pad Templates: SINK template: 'sink_%d' Availability: On request Has request_new_pad() function: 0x7f6b7db1e8e0 Capabilities: ... meta/x-klv parsed: true ...

#gst-inspect-1.0 tsdemux Factory Details: Rank primary (256) Long-name MPEG transport stream demuxer Klass Codec/Demuxer Description Demuxes MPEG2 transport streams Author Zaheer Abbas Merali <zaheerabbas at merali dot org> Edward Hervey <edward.hervey@collabora.co.uk> ... Pad Templates: ... SRC template: 'private_%04x' Availability: Sometimes Capabilities: ANY ...

The meta-plugin

For GStreamer Core 1.0 (1.8.0) it was developed and created here at RidgeRun the meta-plugin, which contains two elements: metasrc, in order to send directly any kind of metadata over a pipeline (similar to gstreamer-0.10) and as a new feature metasrc allows sending metadata periodically and provides GStreamer in-band metadata support with the date-time format. On the other hand, metasink is for receiving the incoming metadata buffers. So, to be able to inject metadata to a pipeline and/or accept any metadata the meta plugin must be compiled and installed correctly.

#gst-inspect-1.0 meta Plugin Details: Name meta Description Elements used to send and receive metadata Filename /home/$USER/gst_$VERSION/out/lib/gstreamer-1.0/libgstmeta.so Version 1.0.0 License Proprietary Source module gst-plugin-meta Binary package RidgeRun elements Origin URL http://www.ridgerun.com metasrc: Metadata Source metasink: Metadata Sink 2 features: +-- 2 elements

metasrc and metasink in GStreamer Application

Adding metadata support to your application involves two main tasks: on the sending side injecting metadata on the pipeline and for the receiving side extracting the metadata. In this section, we will review how to achieve this.

To inject metadata, you create a gstbuf, set the timestamp, set the caps to meta/x-klv, copy your metadata into the buffer, and push the gstbuf to the metadata sink pad on the mpegtsmux element. To make this easier you can use the RidgeRun metasrc element. Once you have the pipeline with a metasrc element, you can simply set the metasrc metadata property with the data you want to be injected. The metasrc element will create the gstbuf and set the timestamp and caps for you. You simply set the metasrc metadata element property to contain the data you want to be sent.

In python for instance, once the pipeline is created, you simply

metadata = “arbitrary data” metasrc = self.pipeline.get_by_name(“metasrc") metasrc.set_property(“metadata", metadata)

To extract the metadata, you branch out the demuxer to get the metadata src pad and set the caps to meta/x-klv. This pad will output the metadata in gstbufs from the mpegtsdemux element.

GStreamer Core 1.0 Pipelines examples

Variables:

METADATA="hello world!" TIME_METADATA="The current time is: %T" SECONDS=1 FILE=test.ts TEXT=meta.txt PORT=3001

The first pipeline stores the metadata injected to the pipeline into a text file using metasrc element. On the other side, the second pipeline sends time format metadata periodically and stores it into a text file.

gst-launch-1.0 -e metasrc metadata=$METADATA ! 'meta/x-klv' ! filesink sync=false async=true location=$TEXT

gst-launch-1.0 -e metasrc metadata=$TIME_METADATA period=$SECONDS ! 'meta/x-klv' ! filesink sync=false async=true location=$TEXT

The next pipeline uses metasink element for dumping the metadata content of the text file in the standard console output.

gst-launch-1.0 -e filesrc location=$TEXT ! 'meta/x-klv' ! metasink

Also, the following pipelines show that it's possible to inject (periodically or not) regular metadata or time format metadata to a H264 or H265 video encoding and store both metadata and video in the same transport stream file.

gst-launch-1.0 -e metasrc metadata=$METADATA ! 'meta/x-klv' ! mpegtsmux name=mux ! filesink sync=false async=true location=$FILE videotestsrc is-live=true ! \ 'video/x-raw,format=(string)I420,width=320,height=240,framerate=(fraction)30/1' ! x264enc ! mux.

gst-launch-1.0 -e metasrc metadata=$METADATA ! 'meta/x-klv' ! mpegtsmux name=mux ! filesink sync=false async=true location=$FILE videotestsrc is-live=true ! \ 'video/x-raw,format=(string)I420,width=320,height=240,framerate=(fraction)30/1' ! x265enc ! mux.

Using metasink you are able to receive the incoming metadata and show it in the standard output along the H264 or H265 decoding and video displaying pipeline, just like the following.

gst-launch-1.0 -e filesrc location=$FILE ! tsdemux name=demux demux. ! queue ! h264parse ! 'video/x-h264, stream-format=byte-stream, alignment=au' ! \ avdec_h264 ! xvimagesink demux. ! queue ! 'meta/x-klv' ! metasink

gst-launch-1.0 -e filesrc location=$FILE ! tsdemux name=demux demux. ! queue ! h265parse ! 'video/x-h265, stream-format=byte-stream, alignment=au' ! \ avdec_h265 ! xvimagesink demux. ! queue ! 'meta/x-klv' ! metasink

Coming up next, the server pipelines are H264 or H265 video encoding and streaming over the network along with the periodical metadata sending, where the client pipelines allow the H264 or H265 video decoding of the incoming stream, display the respective video, and dump the metadata content in the console standard output.

H264-Server:

gst-launch-1.0 -e metasrc period=$SECONDS metadata=$METADATA ! 'meta/x-klv' ! mpegtsmux name=mux ! udpsink port=$PORT \ videotestsrc is-live=true ! 'video/x-raw,format=(string)I420,width=320,height=240,framerate=(fraction)30/1' ! x264enc ! mux.

H264-Client:

gst-launch-1.0 -e udpsrc port=$PORT ! 'video/mpegts, systemstream=(boolean)true, packetsize=(int)188' ! tsdemux name=demux demux. ! queue ! \ h264parse ! 'video/x-h264, stream-format=byte-stream, alignment=au' ! avdec_h264 ! xvimagesink async=false demux. ! queue ! 'meta/x-klv' ! metasink async=false

H265-Server:

gst-launch-1.0 -e metasrc period=$SECONDS metadata=$METADATA ! 'meta/x-klv' ! mpegtsmux name=mux ! udpsink port=$PORT \ videotestsrc is-live=true ! 'video/x-raw,format=(string)I420,width=320,height=240,framerate=(fraction)30/1' ! x265enc ! mux.

H265-Client:

gst-launch-1.0 -e udpsrc port=$PORT ! 'video/mpegts, systemstream=(boolean)true, packetsize=(int)188' ! tsdemux name=demux demux. ! queue ! \ h265parse ! 'video/x-h265, stream-format=byte-stream, alignment=au' ! avdec_h265 ! xvimagesink async=false demux. ! queue ! 'meta/x-klv' ! metasink async=false

|

RidgeRun Resources | |||||||||||||||||||||||||||||||||||||||||||||||||||||||

| |||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

|||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Visit our Main Website for the RidgeRun Products and Online Store. RidgeRun Engineering informations are available in RidgeRun Professional Services, RidgeRun Subscription Model and Client Engagement Process wiki pages. Please email to support@ridgerun.com for technical questions and contactus@ridgerun.com for other queries. Contact details for sponsoring the RidgeRun GStreamer projects are available in Sponsor Projects page. |

|