Difference between revisions of "GstWebRTC - MCU Demo Application"

m (→Promotional Video) |

|||

| (30 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

| − | {{GstWebRTC | + | {{GstWebRTC/Head|previous=AppRTC Signaler Examples - TX1/TX2/Xavier|next=Contact Us|metakeywords=signaling,application,demo,demo application}} |

| − | |||

| − | |||

==Demo Application Objective== | ==Demo Application Objective== | ||

| − | The Multipoint Control Unit (MCU) application is a system built in Python for | + | The Multipoint Control Unit (MCU) application is a system built in Python for demonstrating the capabilities of GstRrWebRTC. The main function of the MCU is to enable a multiparty conference system between different WebRTC endpoints. To do so it establishes a bidirectional peer-to-peer connection with each device, receives their incoming streams, and returns, for each endpoint, the combination of the streams of the other endpoints. '''Figure 1''' depicts graphically this idea. |

| − | + | <br> | |

| + | <br> | ||

[[File:mcu-use-case.png|600px|thumbnail|center|'''Figure 1.''' MCU system general use case]] | [[File:mcu-use-case.png|600px|thumbnail|center|'''Figure 1.''' MCU system general use case]] | ||

| + | <br> | ||

| + | <br> | ||

| + | The bidirectional channel between an endpoint and the MCU can be audio, video, data, or any combination of them. It is not required that the same configuration be used for each endpoint. For example, endpoints 1 and 2 may be configured with audio+video, while endpoint 3 may be configured with video only. | ||

| − | + | The MCU is composed of three different components: | |

| − | |||

| − | The MCU is composed | ||

* Signaler | * Signaler | ||

* Mixer | * Mixer | ||

* Web Server | * Web Server | ||

| − | These components are completely independent at the point that they could be deployed in different servers each | + | These components are completely independent at the point that they could be deployed in different servers each if desired. '''Figure 2''' shows this concept. |

| − | + | <br> | |

| + | <br> | ||

[[File:mcu-components.png|600px|thumbnail|center|'''Figure 2.''' Component breakdown of the MCU]] | [[File:mcu-components.png|600px|thumbnail|center|'''Figure 2.''' Component breakdown of the MCU]] | ||

| + | <br> | ||

==Features== | ==Features== | ||

| Line 26: | Line 28: | ||

The ''Signaler'' handles the WebRTC handshaking process in order to establish a connection between the endpoints and the MCU. | The ''Signaler'' handles the WebRTC handshaking process in order to establish a connection between the endpoints and the MCU. | ||

* Each connection is able to have a set of video streams. | * Each connection is able to have a set of video streams. | ||

| − | * Connections and disconnections are | + | * Connections and disconnections are dynamic. |

* Each conference occurs in a different room. | * Each conference occurs in a different room. | ||

* A max number of participants per room configuration is enabled. | * A max number of participants per room configuration is enabled. | ||

| Line 36: | Line 38: | ||

* New endpoints are added dynamically. | * New endpoints are added dynamically. | ||

* An endpoint can be removed dynamically. | * An endpoint can be removed dynamically. | ||

| − | * The video streams | + | * The video streams are combined in a mosaic. |

* The mixer can compute the mosaic configuration that best displays the videos. | * The mixer can compute the mosaic configuration that best displays the videos. | ||

| − | |||

| − | |||

* The quality of the streams can be dynamically and independently configured according to the available bandwidth of each connection. | * The quality of the streams can be dynamically and independently configured according to the available bandwidth of each connection. | ||

| Line 48: | Line 48: | ||

* A placeholder for the connection id is provided prior to connecting. | * A placeholder for the connection id is provided prior to connecting. | ||

* A placeholder for the room id is provided. | * A placeholder for the room id is provided. | ||

| − | |||

| − | |||

==Usage Instructions== | ==Usage Instructions== | ||

| Line 62: | Line 60: | ||

===Dependencies=== | ===Dependencies=== | ||

| − | * '''GstD: | + | * '''GstD: GStreamer Daemon.''' GStreamer Daemon is gst-launch on steroids where it is possible to create a GStreamer pipeline, play, pause, change speed, skip around, and even change element parameter settings all while the pipeline is active. GstD is used to perform operations on the pipelines in order to connect and disconnect pipelines in a determined session. Find more information on [[https://developer.ridgerun.com/wiki/index.php?title=GStreamer_Daemon this page]]. |

| + | |||

| + | Install GstD by following [[https://developer.ridgerun.com/wiki/index.php?title=GStreamer_Daemon_-_Building_GStreamer_Daemon this guide]]. | ||

| + | <br><br> | ||

| − | * '''GstInterpipe.''' | + | * '''GstInterpipe.''' GstInterpipe is a GStreamer plug-in that allows communication between two or more independent pipelines. It consists of two elements: interpipesink and interpipesrc. The interpipesrc gets connected with an interpipesink, from which it receives buffers and events. Find more information on [[https://developer.ridgerun.com/wiki/index.php?title=GstInterpipe this page]]. |

| − | Install GstInterpipe by using [https://developer.ridgerun.com/wiki/index.php?title=GstInterpipe_-_Building_and_Installation_Guide this guide] | + | Install GstInterpipe by using [[https://developer.ridgerun.com/wiki/index.php?title=GstInterpipe_-_Building_and_Installation_Guide this guide]]. |

===Installation Instructions=== | ===Installation Instructions=== | ||

| Line 85: | Line 86: | ||

</pre> | </pre> | ||

| − | * Open Google Chrome | + | * Open a new terminal and open the signaler folder: |

| + | <pre> | ||

| + | cd rrtc/signaler | ||

| + | </pre> | ||

| + | |||

| + | * Install the '''xmlhttprequest''' dependency: | ||

| + | <pre> | ||

| + | npm install xmlhttprequest | ||

| + | </pre> | ||

| + | |||

| + | '''NOTE:''' This dependency need to be installed after every new clone. | ||

| + | |||

| + | * Start the signaler: | ||

| + | <pre> | ||

| + | nodejs channel_server.js [SIGNALER_PORT] http://[HOST IP]:[HOST_PORT] | ||

| + | </pre> | ||

| + | |||

| + | For example: | ||

| + | <pre> | ||

| + | nodejs channel_server.js 5050 http://localhost:8080 | ||

| + | </pre> | ||

| + | |||

| + | * In case you want to try the application in one single computer just open Google Chrome normally. | ||

| + | |||

| + | * If you want to try the application remotely with many computers in the same local network, open Google Chrome without security support as follows: | ||

| + | <pre> | ||

| + | google-chrome-stable --unsafely-treat-insecure-origin-as-secure=http://[HOST IP]:[SIGNALER_PORT] --user-data-dir=[USR_DIR]& | ||

| + | </pre> | ||

| + | |||

| + | For example: | ||

| + | <pre> | ||

| + | google-chrome-stable --unsafely-treat-insecure-origin-as-secure=http://10.251.101.18:5050 --user-data-dir=/tmp/foo& | ||

| + | </pre> | ||

| + | |||

| + | * Fill the blank with a session ID and press the '''Join''' and '''Call''' buttons. | ||

| + | |||

| + | * Open new tabs in the opened browser, fill the blanks with different session IDs, press the '''Join''' and '''Call''' buttons on each tab. | ||

| + | |||

| + | * You should be able to see the video streams of all the participants in the call. | ||

| + | |||

| + | * Press the '''Exit''' button to finish your participation in the call. | ||

| + | |||

| + | ==Promotional Video== | ||

| + | <br> | ||

| + | <!--------------- | ||

| + | <center> | ||

| + | <embedvideo service="vimeo">https://vimeo.com/327779248</embedvideo> | ||

| + | </center> | ||

| + | -----------> | ||

| + | <center> | ||

| + | <embedvideo service="vimeo" itemprop="video" itemscope itemtype="https://schema.org/VideoObject"> | ||

| + | <link itemprop="url" href="https://vimeo.com/327779248"> | ||

| + | <meta itemprop="thumbnailUrl" content="GstRRWebRTC-Demo.png"> | ||

| + | <meta itemprop="description" content="GstRRWebRTC-Demo"> | ||

| + | <meta itemprop="name" content="GstRRWebRTC-Demo"> | ||

| + | </embedvideo> | ||

| + | </center> | ||

| + | <br> | ||

| + | |||

| − | | | + | {{GstWebRTC/Foot|previous=AppRTC Signaler Examples - TX1/TX2/Xavier|next=Contact Us}} |

Latest revision as of 12:24, 21 April 2023

| GstWebRTC | ||||||||

|---|---|---|---|---|---|---|---|---|

| ||||||||

| WebRTC Fundamentals | ||||||||

| GstWebRTC Basics | ||||||||

|

||||||||

| Evaluating GstWebRTC | ||||||||

| Getting the code | ||||||||

| Building GstWebRTC | ||||||||

| Examples | ||||||||

|

||||||||

| MCU Demo Application | ||||||||

| Contact Us |

Contents

Demo Application Objective

The Multipoint Control Unit (MCU) application is a system built in Python for demonstrating the capabilities of GstRrWebRTC. The main function of the MCU is to enable a multiparty conference system between different WebRTC endpoints. To do so it establishes a bidirectional peer-to-peer connection with each device, receives their incoming streams, and returns, for each endpoint, the combination of the streams of the other endpoints. Figure 1 depicts graphically this idea.

The bidirectional channel between an endpoint and the MCU can be audio, video, data, or any combination of them. It is not required that the same configuration be used for each endpoint. For example, endpoints 1 and 2 may be configured with audio+video, while endpoint 3 may be configured with video only.

The MCU is composed of three different components:

- Signaler

- Mixer

- Web Server

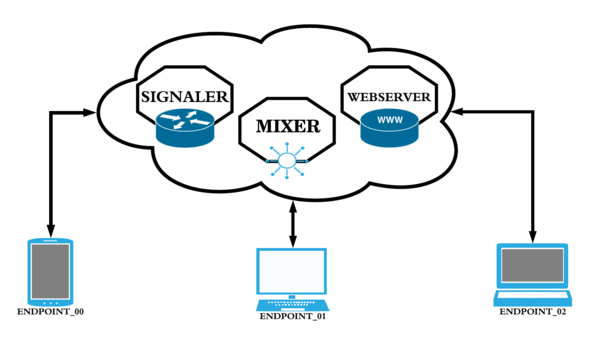

These components are completely independent at the point that they could be deployed in different servers each if desired. Figure 2 shows this concept.

Features

The components shown in Figure 2, although independent, interact with each other. The Signaler is the service that establishes a connection between an endpoint and the MCU. The connection is established peer-to-peer with the Mixer which combines the streams from all the other endpoints and returns that. The Web Server hosts a web page that may be used as a browser endpoint. Each component is described in further detail below:

Signaler

The Signaler handles the WebRTC handshaking process in order to establish a connection between the endpoints and the MCU.

- Each connection is able to have a set of video streams.

- Connections and disconnections are dynamic.

- Each conference occurs in a different room.

- A max number of participants per room configuration is enabled.

- A room id configuration is enabled.

- Each connection is identified by a unique name.

Mixer

The Mixer receives the streams provided by each endpoint, builds a single resulting stream and sends it back to each endpoint.

- New endpoints are added dynamically.

- An endpoint can be removed dynamically.

- The video streams are combined in a mosaic.

- The mixer can compute the mosaic configuration that best displays the videos.

- The quality of the streams can be dynamically and independently configured according to the available bandwidth of each connection.

Web Server

The Web Server hosts a web page that could be used as an endpoint in a conference. It is not required for this endpoint to participate in the conference. It is just an example of one possible endpoint.

- The web page works by using Google Chrome.

- A list of connected endpoints is displayed.

- A placeholder for the connection id is provided prior to connecting.

- A placeholder for the room id is provided.

Usage Instructions

Getting the Code

The source code of the application can be downloaded as follows:

git clone git@github.com:RidgeRun/rrtc.git

Dependencies

- GstD: GStreamer Daemon. GStreamer Daemon is gst-launch on steroids where it is possible to create a GStreamer pipeline, play, pause, change speed, skip around, and even change element parameter settings all while the pipeline is active. GstD is used to perform operations on the pipelines in order to connect and disconnect pipelines in a determined session. Find more information on [this page].

Install GstD by following [this guide].

- GstInterpipe. GstInterpipe is a GStreamer plug-in that allows communication between two or more independent pipelines. It consists of two elements: interpipesink and interpipesrc. The interpipesrc gets connected with an interpipesink, from which it receives buffers and events. Find more information on [this page].

Install GstInterpipe by using [this guide].

Installation Instructions

- Open the downloaded folder on:

cd rrtc/mixer

- Install the application:

sudo ./setup.py install

- Run the application:

rrtc_mixer

- Open a new terminal and open the signaler folder:

cd rrtc/signaler

- Install the xmlhttprequest dependency:

npm install xmlhttprequest

NOTE: This dependency need to be installed after every new clone.

- Start the signaler:

nodejs channel_server.js [SIGNALER_PORT] http://[HOST IP]:[HOST_PORT]

For example:

nodejs channel_server.js 5050 http://localhost:8080

- In case you want to try the application in one single computer just open Google Chrome normally.

- If you want to try the application remotely with many computers in the same local network, open Google Chrome without security support as follows:

google-chrome-stable --unsafely-treat-insecure-origin-as-secure=http://[HOST IP]:[SIGNALER_PORT] --user-data-dir=[USR_DIR]&

For example:

google-chrome-stable --unsafely-treat-insecure-origin-as-secure=http://10.251.101.18:5050 --user-data-dir=/tmp/foo&

- Fill the blank with a session ID and press the Join and Call buttons.

- Open new tabs in the opened browser, fill the blanks with different session IDs, press the Join and Call buttons on each tab.

- You should be able to see the video streams of all the participants in the call.

- Press the Exit button to finish your participation in the call.

Promotional Video