Difference between revisions of "Embedded GStreamer Performance Tuning"

(→Overview) |

|||

| Line 306: | Line 306: | ||

Easy enough, one could increase the queue size to a higher value and expect better results. Doing so, the pipeline should look like: | Easy enough, one could increase the queue size to a higher value and expect better results. Doing so, the pipeline should look like: | ||

| + | |||

| + | gst-launch -e \ | ||

| + | v4l2src queue-size=6 always-copy=false input-src=camera ! \ | ||

| + | video/x-raw-yuv, width=1920, height=1088 ! \ | ||

| + | dmaiaccel ! \ | ||

| + | dmaiperf print-arm-load=true ! \ | ||

| + | queue ! \ | ||

| + | dmaienc_h264 encodingpreset=2 ratecontrol=2 single-nalu=true targetbitrate=12000000 maxbitrate=12000000 ! \ | ||

| + | queue ! \ | ||

| + | qtmux ! \ | ||

| + | filesink location=/media/sdcard/recording.mp4 enable-last-buffer=false | ||

Revision as of 13:02, 22 April 2013

PAGE UNDER CONSTRUCTION

Overview

This wiki is a guide on how to tune performance of a GStreamer pipeline on a DM368 SoC running RidgeRun's SDK. It is based on an example case were 1080p video is to be recorded from a camera at 30fps using a H264 encoder at 12Mbps bitrate.

Contents

Introduction

Performance is a ghost that is always hunting us when dealing with real time tasks. When these tasks are done by an embedded system where resources are typically limited, tuning the system to overcome the real time constrains can become quite a tricky challenge. Multimedia handling is a very common field where time and resource constraints are likely to hinder our desired performance, specially when dealing with live video and/or audio capture.

This wiki is a guide on how to tune performance of a GStreamer pipeline on a DM368 SoC running RidgeRun's SDK. Howerver, the tricks done here should be easily extrapolated to other development environments.

Note: The real time system term is referred to a system that must operate under several real time constraints, not to a system scheduled by a real-time scheduling algorithm.

The Example

In order to guide the reader through the different tuning stages, a simple use case example is used. The goal of this example is to record a video incoming from a camera into a SD card attached to our embedded system. The system must fulfill the following requirements/constraints:

- 1920x1080 Full HD resolution.

- 30 frames per second.

- H264 encoded at 12 Mbps bitrate.

- Write the file to an SD card.

Besides these explicit requirements, it is implicit that the system must operate successfully on real time, since we are capturing from a live camera. All that said, let's get our gloves and helmet and put hands into work.

The Sharp Cliff of Failure

But, what's our actual time constraint? Looking among the requirements it is seen that the only temporal requirement is given by the 30 frames per second. Easy enough, we can translate this into:

T = 1 s /30 frames T = 0.03333 s T = 33.33 ms

This means that we have 33.33 ms do process the frame or we won't be able to keep up to that frame rate. This leads us to our first thumb rule:

You will be as slow as your slowest element.

If our most time consuming element can't beat the 33.33 ms timeline the pipeline can not be scheduled to work at this frame rate.

Initial Pipeline Profiling

The initial pipeline to be used to capture and record the video is the following:

gst-launch -e \ v4l2src input-src=camera ! \ video/x-raw-yuv, width=1920, height=1088 ! \ dmaiaccel ! \ dmaiperf print-arm-load=true ! \ dmaienc_h264 targetbitrate=12000000 maxbitrate=12000000 ! \ qtmux ! \ filesink location=/media/sdcard/recording.mp4

Note how there are no queues in this pipeline. Although our bowels scream for queues, lets learn how to properly place them on the pipeline. An excess of queues can result result harmful rather than helpful.

Start without queues and profile the pipeline to find the sweet spots

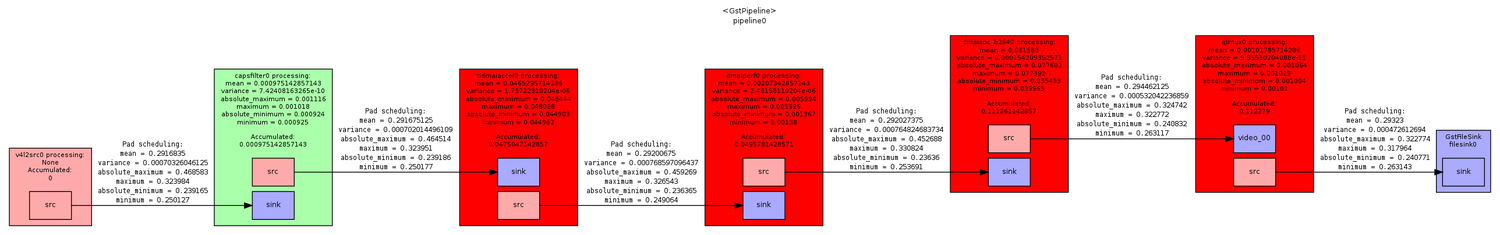

The above pipeline will be profiled as the diagram in Figure 1.

The previous profiling diagram was made by RidgeRun's GstTuner proprietary tool. Contact support@ridgerun.com for more information about it.

In the diagram shown in Figure 1 you will see the current pipeline with a bunch of measurements everywhere. We are now only paying attention to the mean and the accumulated processing time of the elements. The mean is the average time that the element needs to process a buffer, and the accumulated time is the sum of these means and represents the actual processing time that is needed to handle a buffer as a result of chaining the process function of each element. Table 1 summarizes this information for the ease of view.

| Element | Mean (ms) | Accumulated (ms) |

|---|---|---|

| v4l2src | --- | --- |

| capsfilter | 0.98 | 0.98 |

| dmaiaccel | 46.53 | 47.50 |

| dmaiperf | 2.07 | 49.58 |

| dmaienc_h264 | 61.68 | 111.26 |

| qtmux | 1.02 | 112.28 |

| filesink | --- | --- |

INFO: Timestamp: 1:33:29.215087770; bps: 11151032; fps: 3.55; CPU: 88; INFO: Timestamp: 1:33:30.443846226; bps: 12758306; fps: 4.07; CPU: 87; INFO: Timestamp: 1:33:31.665201188; bps: 12831449; fps: 4.09; CPU: 87; INFO: Timestamp: 1:33:32.888005101; bps: 12820949; fps: 4.09; CPU: 87;

It is shown on Table 1 that our slowest element is the encoder with a processing time of 61.68ms so with this configuration it's not possible to schedule the pipeline at 30fps. It is also evident that with all the elements combined it would take 112.28ms to process a single buffer, which is way above our time constraint. The places where the time limit has been violated is marked with red on both the diagram and the table. Lets set up some properties to speed up the pipeline.

Setting properties

Our initial pipeline approach wasn't enough to fulfill our real time requirements. However, we can still configure the elements in our pipeline to gain some processing speed.

Start by tuning the properties of the different elements

The Table 2 shows some recommended element configurations and their respective description. It's important to know that although these configurations are recommended they might not be ideal for every scenario.

| Element | Property | Description |

|---|---|---|

| v4l2src | always-copy=false | Uses v4l2 original video buffers instead of copying them into a new buffer. Besides avoiding the memcpy, video buffers are contiguous which will avoid another memcpy on the accel. |

| dmaienc_h264 | encodingpreset=2 | Configure the encoder to run at high speed |

| dmaienc_h264 | single-nalu=true | Encode the whole image into a single NAL unit, to avoid walking through the buffer. |

| dmaienc_h264 | ratecontrol=2 | Use variable bitrate coding |

| filesink | enable-last-buffer=false | Tell the sink that we don't need him keeping a reference of the last buffer. |

The pipeline is now looking something like the following:

gst-launch -e \ v4l2src always-copy=false input-src=camera ! \ video/x-raw-yuv, width=1920, height=1088 ! \ dmaiaccel ! \ dmaiperf print-arm-load=true ! \ dmaienc_h264 encodingpreset=2 ratecontrol=2 single-nalu=true targetbitrate=12000000 maxbitrate=12000000 ! \ qtmux ! \ filesink location=/media/sdcard/recording.mp4 enable-last-buffer=false

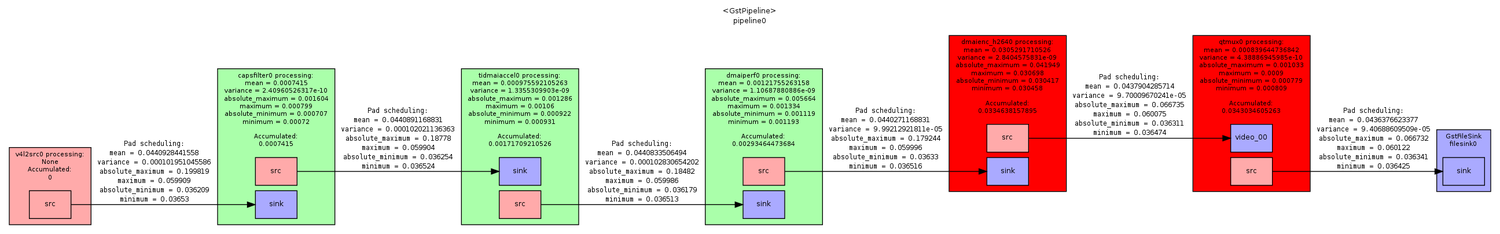

Figure 2 shows the diagram of the profiled pipeline with the respective properties set.

As before, the following table summarizes the important information at this stage of the tuning.

| Element | Mean (ms) | Accumulated (ms) |

|---|---|---|

| v4l2src | --- | --- |

| capsfilter | 0.74 | 0.74 |

| dmaiaccel | 0.98 | 1.71 |

| dmaiperf | 1.22 | 2.93 |

| dmaienc_h264 | 30.53 | 33.46 |

| qtmux | 0.84 | 34.30 |

| filesink | --- | --- |

INFO: Timestamp: 1:35:02.193290601; bps: 64009961; fps: 20.42; CPU: 33; INFO: Timestamp: 1:35:03.194402098; bps: 75127432; fps: 23.97; CPU: 20; INFO: Timestamp: 1:35:04.252337265; bps: 74111636; fps: 23.65; CPU: 17; INFO: Timestamp: 1:35:05.255173848; bps: 75052455; fps: 23.95; CPU: 16;

Note how our timings have improved significantly. Now, our most time consuming element is the encoder with a processing time of 30.95ms, which means that the pipeline should be able to be scheduled to work at 30fps. However, not so happily the accumulated time breaks our limit when the buffer is pushed into the encoder. We need to stop the processing chain before entering the process of the encoder.

Parallelizing with Queues

On the diagram in Figure 2 and the stats on Table 3, it is shown in red the element at which the accumulated time exceeds our time constraint of T=33.33ms. We must break the processing chain before pushing the buffer into the encoder. This is achieved by placing a queue at this location. The queue will receive the buffer from the dmaiperf and return immediately, pushing the buffer to the encoder in a separate thread. In some way, one can say that they are processing in parallel. Also notice how the processing time of the encoder is around 30ms, too near of our limit. It is also a good idea to put a queue after the encoder and let the rest of the pipeline process on a different thread.

Place queues on the spots where the T is exceeded or nearly exceeded.

The pipeline is now something like the following

gst-launch -e \ v4l2src always-copy=false input-src=camera ! \ video/x-raw-yuv, width=1920, height=1088 ! \ dmaiaccel ! \ dmaiperf print-arm-load=true ! \ queue ! \ dmaienc_h264 encodingpreset=2 ratecontrol=2 single-nalu=true targetbitrate=12000000 maxbitrate=12000000 ! \ queue ! \ qtmux ! \ filesink location=/media/sdcard/recording.mp4 enable-last-buffer=false

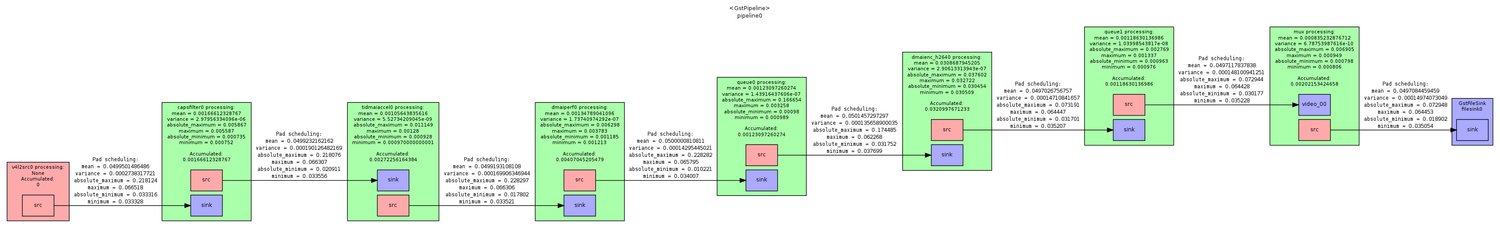

Figure 3 show the profiling diagram with the queues respectively placed and Table 3 shows the stats summary.

| Element | Mean (ms) | Accumulated (ms) |

|---|---|---|

| v4l2src | --- | --- |

| capsfilter | 1.67 | 1.67 |

| dmaiaccel | 1.06 | 2.72 |

| dmaiperf | 1.35 | 4.07 |

| queue | 1.23 | 1.23 |

| dmaienc_h264 | 30.87 | 32.10 |

| queue | 1.19 | 1.19 |

| qtmux | 0.84 | 2.02 |

| filesink | --- | --- |

INFO: Timestamp: 1:35:49.495732469; bps: 70679097; fps: 22.55; CPU: 21; INFO: Timestamp: 1:35:50.499756552; bps: 81144860; fps: 25.89; CPU: 20; INFO: Timestamp: 1:35:51.525898595; bps: 76350877; fps: 24.36; CPU: 21; INFO: Timestamp: 1:35:52.527519641; bps: 78257742; fps: 24.97; CPU: 37;

We have get rid of all the red blocks. Now the pipeline should be able to schedule 30fps. But, why aren't we able to achieve them? There is still work to do.

Finding the Buffer Leak

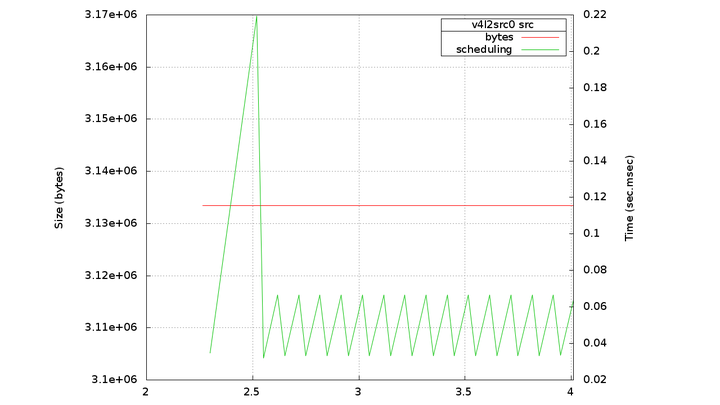

So far, we boosted performance by setting the properties appropriately and placing queues where necessary. Still, we aren't able to achieve our goal of 30 fps. To find out what is going on a more specific analysis is needed. Figure 4 shows the scheduling information of the source pad of the v4l2src element. This measurements express the amount of time between two consecutive buffers are pushed.

Observing the steady state, say after 3 seconds, the buffer push timing jumps between 33.33ms and 66.67ms. It's not a coincidence that the upper value is exactly the double of the first one. To understand what's happening, one must first know that v4l2src has a buffer pool of a fixed number of buffers which are recycled along the whole pipeline lifetime. This means that if the pool runs out of buffers, it must wait until a buffer is freed to write a new image to it.

If v4l2 buffers are not freeing fast enough you will loose images waiting for them to free.

By default v4l2src creates a 3 buffer pool. It is clear from figure 4 that these 3 buffers aren't freed fast enough to keep up the 30 fps and that's why every once a buffer is lost and the next image will be grabbed 33.33ms later giving a total of 66.67ms.

Easy enough, one could increase the queue size to a higher value and expect better results. Doing so, the pipeline should look like:

gst-launch -e \ v4l2src queue-size=6 always-copy=false input-src=camera ! \ video/x-raw-yuv, width=1920, height=1088 ! \ dmaiaccel ! \ dmaiperf print-arm-load=true ! \ queue ! \ dmaienc_h264 encodingpreset=2 ratecontrol=2 single-nalu=true targetbitrate=12000000 maxbitrate=12000000 ! \ queue ! \ qtmux ! \ filesink location=/media/sdcard/recording.mp4 enable-last-buffer=false