RidgeRun Toolbox Demo

Contents

Introduction

RidgeRun has developed several GStreamer plugins, this wiki shows some examples with four test scripts that can be used as a quick demo of their capabilities.

Dependencies

This wiki assumes all the following plugins and dependencies are successfully installed:

Summary

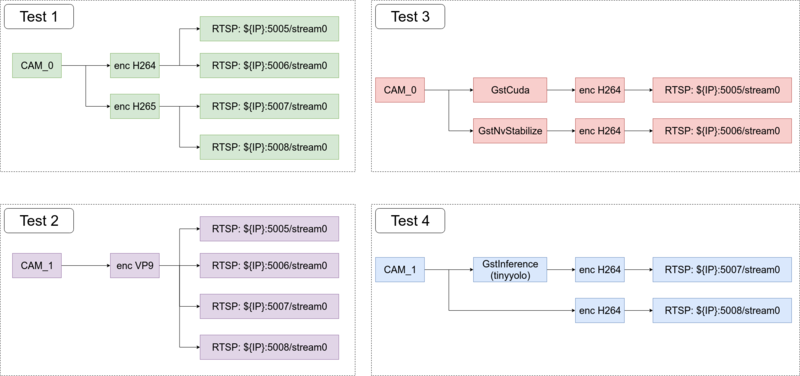

The following image shows the data path for some of the most common use cases that will be presented in this demo:

Visualization

To receive incoming buffers from each of the pipelines you can use programs like vlc or totem. For example to view the output buffers from H264/H265 pipelines you can use:

vlc rtsp://${HOST_IP}:6005/stream0

To view the output buffers from VP9/VP8 pipelines you can use:

totem rtsp://${HOST_IP}:6005/stream0

Considerations

Each script includes some definitions needed to run, you can change them to match your needs. For example camera settings:

CAM0=0

CAM0_WIDTH=1280

CAM0_HEIGHT=720

CAM0_FRAMERATE=120

Please pay special attention to definitions at:

- test3.sh:

Update the PATH_TO_CUDA_FILTER definition to match the path where you downloaded the Gst-cuda eval.

PATH_TO_CUDA_FILTER=/home/user/gst-cuda/tests/examples/cudafilter_algorithms/gray-scale-filter/gray-scale-filter.so

- test4.sh:

Update the MODEL_LOCATION and LABELS definitions needed for the Gst-Inference plugin.

labels.txt: attached to this wiki

graph_tinyyolov2_tensorflow.pb: you can get the tensorflow model for free at the following link.

MODEL_LOCATION='/home/user/graph_tinyyolov2_tensorflow.pb'

LABELS='/home/user/labels.txt'

Tests

Before running each test please ensure gstreamer daemon (gstd) is up and running:

gstd -k

gstd

test1.sh

CAM0=0

CAM0_SRC=cam${CAM0}_src

CAM0_PIPE=${CAM0_SRC}_pipe

CAM0_WIDTH=1280

CAM0_HEIGHT=720

CAM0_FRAMERATE=120

########## GREEN PIPELINE ##########################

#1. Create camera input

echo -e "===> Create the h264 ${CAM0_PIPE}\n"

gstd-client pipeline_create ${CAM0_PIPE} nvarguscamerasrc sensor-id=${CAM0} ! "video/x-raw(memory:NVMM), width=(int)${CAM0_WIDTH}, \\

height=(int)${CAM0_HEIGHT}, format=(string)NV12, framerate=(fraction)${CAM0_FRAMERATE}/1" ! nvvidconv \

! interpipesink name=${CAM0_SRC} sync=false async=false

#2.1 Create h264 encoding

gstd-client pipeline_create x264_pipe interpipesrc name=x264_src listen-to=${CAM0_SRC} \

! omxh264enc ! "video/x-h264,stream-format=(string)byte-stream" \

! h264parse ! "video/x-h264,mapping=/stream0" ! \

interpipesink name=x264_sink sync=false async=false

#2.2 Create h265 encoding

gstd-client pipeline_create x265_pipe interpipesrc name=x265_src listen-to=${CAM0_SRC} \

! omxh265enc ! "video/x-h265,stream-format=(string)byte-stream" \

! h265parse ! "video/x-h265,mapping=/stream0" ! \

interpipesink name=x265_sink sync=false async=false

#3.1 Create rtspsink 1

echo -e "===> Create the output rtsp sinks \n"

gstd-client pipeline_create stream0_pipe interpipesrc name=rtsp0 listen-to=x264_sink \

! rtspsink service=6005

#3.2 Create rtspsink 2

gstd-client pipeline_create stream1_pipe interpipesrc name=rtsp1 listen-to=x264_sink \

! rtspsink service=6006

#3.3 Create rtspsink 2

echo -e "===> Create the output rtsp sinks \n"

gstd-client pipeline_create stream2_pipe interpipesrc name=rtsp0 listen-to=x265_sink \

! rtspsink service=6007

#3.4 Create rtspsink 4

gstd-client pipeline_create stream3_pipe interpipesrc name=rtsp1 listen-to=x265_sink \

! rtspsink service=6008

gstd-client pipeline_play ${CAM0_PIPE}

gstd-client pipeline_play x264_pipe

gstd-client pipeline_play x265_pipe

gstd-client pipeline_play stream0_pipe

gstd-client pipeline_play stream1_pipe

gstd-client pipeline_play stream2_pipe

gstd-client pipeline_play stream3_pipe

####################################################

test2.sh

CAM1=0

CAM1_SRC=cam${CAM1}_src

CAM1_PIPE=${CAM1_SRC}_pipe

CAM1_WIDTH=1280

CAM1_HEIGHT=720

CAM1_FRAMERATE=120

########## PURPLE PIPELINE ##########################

#1. Create camera input

echo -e "===> Create the ${CAM1_PIPE}\n"

gstd-client pipeline_create ${CAM1_PIPE} nvarguscamerasrc sensor-id=${CAM1} ! "video/x-raw(memory:NVMM), width=(int)${CAM1_WIDTH}, \\

height=(int)${CAM1_HEIGHT}, format=(string)NV12, framerate=(fraction)${CAM1_FRAMERATE}/1" \

! interpipesink name=${CAM1_SRC} sync=false async=false

#2.1 Create h264 encoding

gstd-client pipeline_create vp9_pipe interpipesrc name=vp9_src listen-to=${CAM1_SRC} \

! omxvp9enc ! "video/x-vp9, mapping=/stream0" ! queue \

! interpipesink name=vp9_sink sync=false async=false

#3.1 Create rtspsink 1

echo -e "===> Create the output rtsp sinks \n"

gstd-client pipeline_create stream0_pipe interpipesrc name=rtsp0 listen-to=vp9_sink \

! rtspsink service=6005

gstd-client pipeline_create stream1_pipe interpipesrc name=rtsp1 listen-to=vp9_sink \

! rtspsink service=6006

gstd-client pipeline_create stream2_pipe interpipesrc name=rtsp2 listen-to=vp9_sink \

! rtspsink service=6007

gstd-client pipeline_create stream3_pipe interpipesrc name=rtsp3 listen-to=vp9_sink \

! rtspsink service=6008

gstd-client pipeline_play ${CAM1_PIPE}

gstd-client pipeline_play vp9_pipe

gstd-client pipeline_play stream0_pipe

gstd-client pipeline_play stream1_pipe

gstd-client pipeline_play stream2_pipe

gstd-client pipeline_play stream3_pipe

####################################################

test3.sh

CAM0=0

CAM0_SRC=cam${CAM0}_src

CAM0_PIPE=${CAM0_SRC}_pipe

CAM0_WIDTH=1280

CAM0_HEIGHT=720

CAM0_FRAMERATE=120

PATH_TO_CUDA_FILTER=/home/user/gst-cuda/tests/examples/cudafilter_algorithms/gray-scale-filter/gray-scale-filter.so

########## RED PIPELINE ##########################

#1. Create camera input

echo -e "===> Create the ${CAM0_PIPE} pipeline\n"

gstd-client pipeline_create ${CAM0_PIPE} nvarguscamerasrc sensor-id=${CAM0} ! "video/x-raw(memory:NVMM), width=(int)${CAM0_WIDTH}, \\

height=(int)${CAM0_HEIGHT}, format=(string)NV12, framerate=(fraction)${CAM0_FRAMERATE}/1" \

! interpipesink name=${CAM0_SRC} sync=false async=false

#2.1 Use GstNvStabilize element

gstd-client pipeline_create stabilize_pipe interpipesrc name=stabilize_src listen-to=${CAM0_SRC} \

! nvvidconv ! nvstabilize crop-margin=0.1 queue-size=5 ! nvvidconv ! 'video/x-raw(memory:NVMM)' ! nvvidconv \

! interpipesink name=stabilize_sink sync=false async=false

#2.2 Use GstCuda element

gstd-client pipeline_create cuda_pipe interpipesrc name=cuda_src listen-to=${CAM0_SRC} \

! nvvidconv ! "video/x-raw(memory:NVMM),width=1280,height=720,format=I420,framerate=120/1" ! nvvidconv \

! cudafilter in-place=true location=${PATH_TO_CUDA_FILTER} \

! interpipesink name=cuda_sink sync=false async=false

#3.1 Create h264 encoding

gstd-client pipeline_create x264_pipe interpipesrc name=x264_src listen-to=cuda_sink \

! omxh264enc ! "video/x-h264,stream-format=(string)byte-stream" \

! h264parse ! "video/x-h264,mapping=/stream0" ! \

interpipesink name=x264_sink sync=false async=false

#3.2 Create h264 encoding

gstd-client pipeline_create x264_pipe2 interpipesrc name=x264_src2 listen-to=stabilize_sink \

! omxh264enc ! "video/x-h264,stream-format=(string)byte-stream" \

! h264parse ! "video/x-h264,mapping=/stream0" ! \

interpipesink name=x264_sink2 sync=false async=false

#4.1 Create rtspsink 1

echo -e "===> Create the output rtsp sinks \n"

gstd-client pipeline_create stream0_pipe interpipesrc name=rtsp0 listen-to=x264_sink \

! rtspsink service=6005

#4.2 Create rtspsink 2

gstd-client pipeline_create stream1_pipe interpipesrc name=rtsp1 listen-to=x264_sink2 \

! rtspsink service=6006

gstd-client pipeline_play ${CAM0_PIPE}

gstd-client pipeline_play stabilize_pipe

gstd-client pipeline_play cuda_pipe

gstd-client pipeline_play x264_pipe

gstd-client pipeline_play x264_pipe2

gstd-client pipeline_play stream0_pipe

gstd-client pipeline_play stream1_pipe

###################################################

test4.sh

CAM1='/dev/video1'

CAM1_WIDTH=1280

CAM1_HEIGHT=720

MODEL_LOCATION='/home/user/graph_tinyyolov2_tensorflow.pb'

INPUT_LAYER='input/Placeholder'

OUTPUT_LAYER='add_8'

LABELS='/home/user/labels.txt'

############# BLUE PIPELINE ####################

#1. Create camera input

echo -e "===> Create the ${CAM1} pipeline\n"

gstd-client pipeline_create cam1_pipe v4l2src device=$CAM1 \

! "video/x-raw, width=${CAM1_WIDTH}, height=${CAM1_HEIGHT}" \

! interpipesink name=cam1_src sync=false async=false

#2.1 Use Gst-inference plugin

gstd-client pipeline_create inference_pipe interpipesrc name=inference_src listen-to=cam1_src \

! videoconvert ! tee name=t t. ! videoscale ! queue ! net.sink_model t. ! queue \

! net.sink_bypass tinyyolov2 name=net model-location=$MODEL_LOCATION backend=tensorflow \

backend::input-layer=$INPUT_LAYER backend::output-layer=$OUTPUT_LAYER net.src_bypass \

! detectionoverlay labels="$(cat $LABELS)" font-scale=1 thickness=2 ! videoconvert \

! omxh264enc ! "video/x-h264,stream-format=(string)byte-stream" \

! h264parse ! "video/x-h264,mapping=/stream0" \

! rtspsink service=6007

#2.2 Encode h264 video

gstd-client pipeline_create cam1_empty interpipesrc name=cam_src_empty listen-to=cam1_src \

! videoconvert ! omxh264enc ! "video/x-h264,stream-format=(string)byte-stream" \

! h264parse ! "video/x-h264,mapping=/stream0" \

! rtspsink service=6008

gstd-client pipeline_play cam1_pipe

gstd-client pipeline_play cam1_empty

gstd-client pipeline_play inference_pipe

###############################################

labels.txt

The file is needed by the gst-inference demo:

labels.txt

aeroplane;bicycle;bird;boat;bottle;bus;car;cat;chair;cow;diningtable;dog;horse;motorbike;person;pottedplant;sheep;sofa;train;tvmonitor