GstExtractor

Contents

|

|

|

|

|

Overview

GstExtractor is a GStreamer plug-in that extracts data embedded at the end of a video frame and allows the user to do customized parsing to send the embedded data downstream as KLV, H264/5 SEI metadata, or expose the data as a readable GstExtractor parameter, thus allowing the controlling application to retrieve the values of interest. GstExtractor takes care of separating the embedded data from the actual video frame and output them to different pads, so that downstream video-processing elements will not know about the incoming extra data region. This separation is performed in a way that the video frame isn't copied so processing time is mainly due to the customized data parsing.

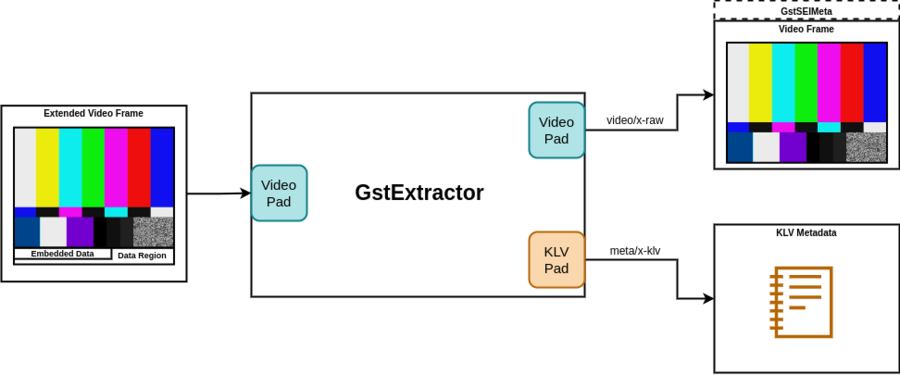

Figure 1 shows the basic functionality of GstExtractor. Here we have an input extended video frame (video frame + embedded data in a data region at the end of the frame) and two outputs:

1. A normal video frame that might or might not carry H264/5 SEI metadata as a GstMeta structure.

2. KLV metadata.

It is the responsibility of the user to implement an appropriate customized parser and add the parser to the GstExtractor. The parser generates the binary SEI and KLV encoded data.

Note: In order to use the SEI metadata feature, you need to also acquire RidgeRun's GstSEIMetadata plugin. |

Getting the Code

When you purchase GstExtractor, you will get a git repository with the source code inside. You need to build the plug-in in your system (since you are adding a customized parser) then add the plug-in to your GStreamer catalogue. Clone the repository using something similar to:

git clone git://git@gitlab.com/RidgeRun/orders/${CUSTOMER_DIRECTORY}/gst-extractor.git

cd gst-extractor

GST_EXTRACTOR_DIR=${PWD}

Where ${CUSTOMER_DIRECTORY} contains the name of your customer directory given by RidgeRun after purchase.

How to Use GstExtractor

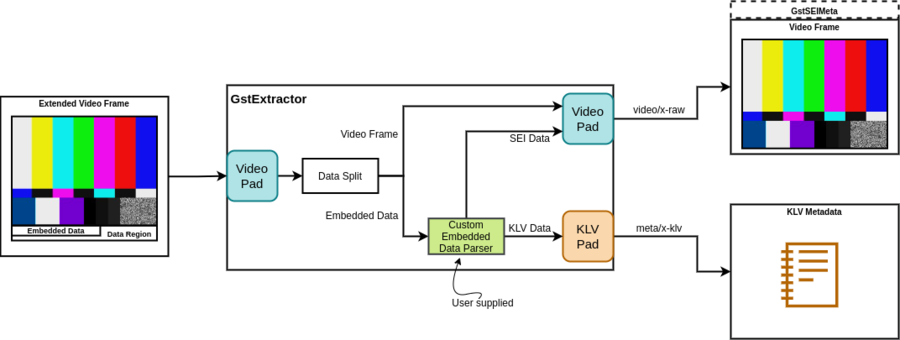

A deeper view of the GstExtractor functionality can be seen in Figure 2.

All the GStreamer functionality for the pads, buffer creation, caps negotiation and others is already taken care of by GstExtractor, you only need to add the custom embedded data parser.

In order to use GstExtractor you need to set up three main things:

- An input video stream with an extended video frame containing a data region.

- Define GstExtractor element properties according to any data you want to make available to the controlling application.

- Implement a custom embedded parser for extracting embedded data.

Extended Video Frame Structure

The extended video frame shall have the following structure:

Where we have first, the video frame memory, and just after it ends, the contiguous memory for the data region. The extended video frame can't just have a size of (video frame size + embedded data size) because it would not be compliant with any video format and such an abraritary frame wouldn't be able to pass through a GStreamer pipeline. Instead, we need to define a height padding in pixels, called the data region, that is big enough to contain our embedded data. For example, if we have a YUY2 video frame of 1280x720 pixels and an embedded data size of 34 bytes, then 1 pixel of height padding would be enough because (1280 * 2 bytes per pixel * 1 pixel) > 34. Then our extended video frame would need to have a resolution of 1280x721 where the data region size is 2560 bytes with the embedded data using 34 bytes in the data region.

GstExtractor Element Properties

The GstExtractor element has the following properties:

| value | Binary value to query some custom information included in the frame embedded data.

flags: readable Boxed pointer of type "GByteArray" |

| data-size | Binary value to query some custom information included in the frame embedded data.

flags: readable Boxed pointer of type "GByteArray" |

| height-padding | Extra height padding added in pixel units.

flags: readable, writable Integer. Range: 0 - 2147483647 Default: 0 |

| filepath | Path to dummy frame to use in replacement of input buffer when a custom condition is met.

flags: readable, writable String. Default: null |

- Where data-size and height-padding must be consistent with the extended video frame structure. So following our last example, you would need to assign data-size=34 and height-padding=1.

- The value property is intended for the user to query it from a controlling application, thus the value property is read-only. The custom embedded data parser is responsible for assigning the appropriate binary value to it.

- The filepath property is optional in case you want to show a dummy image if a given condition is met. This image should be raw (not encoded) and of the same format and size of the normal stream. The custom embedded data parser is responsible for deciding (based on the contents of the embedded data) if the actual frame, a dummy image or if no frame at all (dropping) should go downstream. An example use is if the embedded data includes a flag which indicates if the frame data is empty due to no video signal being received.

Note: Output caps will be set based on height-padding, so this property value will only be valid during caps negotiation and further writes to it won't have an effect. |

Custom Data Parsing Implementation

GstExtractor will be in charge of separating the embedded data from the video frame and handling the embedded data appropriately, such as sending the data as H264/5 SEI metadata and KLV metadata buffer. GstExtractor includes helper functions to make it easy for the custom embedded parser to send SEI and KLV data downstream as shown in Figure 2. In order to make your custom embedded data parser, GstExtractor contains a special code section in the file gst/gstextractor.c for you to modify. This section currently has a basic example of how this could be done, but you must modify it according to your needs:

{

/* TODO: Add your custom parsing here. This is just an example that

simulates that the first sei_size bytes are a timestamp and the

following bytes are some other KLV data. In this example the last

bin_size bytes of KLV data are the value we would like to query from the

user point of view. Also if those bin_size bytes represent a number less

than bin_threshold the dummy frame will be used instead of the input

buffer. There's also the option to drop the frame and/or metadata, in

order to show this feature the drop_frame and drop_meta will be set

according to a given probability. */

const gint bin_size = 5;

const gint bin_threshold = 50;

const gfloat drop_probability = 0.6;

sei_size = 20;

klv_size = 14;

sei_data = (guint8 *) g_memdup (data, sei_size);

klv_data = (guint8 *) g_memdup (data + sei_size, klv_size);

GST_OBJECT_LOCK (self);

if (self->value) {

g_byte_array_free (self->value, TRUE);

}

self->value = g_byte_array_sized_new (bin_size);

self->value->len = bin_size;

memcpy (self->value->data, klv_data + (klv_size - bin_size), bin_size);

/* Determine if the frame and/or meta should be dropped */

drop_frame = ((gfloat) (1.0 * rand () / (RAND_MAX)) < drop_probability);

drop_meta = drop_frame;

if ((atoi ((char *) self->value->data) < bin_threshold) && self->filepath

&& !drop_frame) {

/* Replace input buffer with our dummy buffer */

GST_BUFFER_PTS (self->dummy_buf) = GST_BUFFER_PTS (inbuf);

gst_buffer_unref (inbuf);

gst_buffer_ref (self->dummy_buf);

inbuf = self->dummy_buf;

}

GST_OBJECT_UNLOCK (self);

}

Example Data Parsing Implementation Walkthrough

1. First we declare and assign some variables for the parsing:

const gint bin_size = 5;

const gint bin_threshold = 50;

const gfloat drop_probability = 0.6;

sei_size = 20;

klv_size = 14;

It's mandatory that you assign the sei_size and klv_size to their corresponding value, otherwise they will be set to 0 and the further buffer/metadata creation will fail. bin_size and bin_threshold on the other side, are local variables for the parsing to set the value property and to replace the input buffer with a dummy frame respectively, so they are optional. drop_probability is optional as well, and it's only to show how you could configure the parser also to drop a frame and/or meta.

2. "Parse" and assign the result to the provided pointers:

sei_data = (guint8 *) g_memdup (data, sei_size);

klv_data = (guint8 *) g_memdup (data + sei_size, klv_size);

In this example we are not really parsing anything, just copying the data into the sei_data and klv_data pointers, but this should be the last step after you parse/encode your data. Keep in mind that sei_data and klv_data are null pointers at that point, so you need to allocate them with sei_size and klv_size respectively (in this case the g_memdup function takes care of it).

Also, note that the SEI and KLV data does not necessarily need to be encoded for the pipeline to work, it is just simple binary data that your applications should know how to interpret when it is received.

3. Lock the object since we will modify data that can be accessed from another thread:

GST_OBJECT_LOCK (self);

4. Assign the appropriate binary data to the value property:

if (self->value) {

g_byte_array_free (self->value, TRUE);

}

self->value = g_byte_array_sized_new (bin_size);

self->value->len = bin_size;

memcpy (self->value->data, klv_data + (klv_size - bin_size), bin_size);

If you don't need this step, just remove this code.

5. Determine if the frame and/or meta should be dropped

drop_frame = ((gfloat) (1.0 * rand () / (RAND_MAX)) < drop_probability);

drop_meta = drop_frame;

In this example we are just setting a probability for dropping the frame to show how the feature works but you can omit it if you don't need it.

6. Create a proper custom condition to know when to replace the input buffer with the dummy frame. You should only replace the (atoi ((char *) self->value->data) < bin_threshold) condition. Keep in mind that for this piece of code to work you need to assign the filepath property to extractor element.

if ((atoi ((char *) self->value->data) < bin_threshold) && self->filepath /* Replace with custom condition */

&& !drop_frame) {

/* Replace input buffer with our dummy buffer */

GST_BUFFER_PTS (self->dummy_buf) = GST_BUFFER_PTS (inbuf);

gst_buffer_unref (inbuf);

gst_buffer_ref (self->dummy_buf);

inbuf = self->dummy_buf;

inbuf = gst_buffer_make_writable (inbuf);

}

This custom condition includes the drop_frame flag verification so if you set it to TRUE, it won't use the dummy buffer since it would be useless. If you don't need this step, just remove this code.

7. Unlock the object.

GST_OBJECT_UNLOCK (self);

8. Follow the next section to install and test your changes.

Building GstExtractor

Install Dependencies

GstExtractor has the following dependencies:

- GStreamer

- GStreamer Plugins Base

- Meson

- GstSEIMetadata

In order to install the first three run the following commands:

# GStreamer

sudo apt install libgstreamer1.0-dev libgstreamer-plugins-base1.0-dev gstreamer1.0-plugins-base

# Meson

sudo apt install python3 python3-pip python3-setuptools python3-wheel ninja-build

sudo -H pip3 install git+https://github.com/mesonbuild/meson.git

Then, GstSEIMetadata is a proprietary product from RidgeRun, so check this link to learn how to get and install it.

Compile and Install

First, find the path where GStreamer looks for plug-ins and libraries (LIBDIR). Take as a reference to the following table:

| Platform | LIBDIR path |

|---|---|

| PC 64-bits/x86 | /usr/lib/x86_64-linux-gnu/ |

| NVIDIA Jetson | /usr/lib/aarch64-linux-gnu/ |

Then, run:

cd $GST_EXTRACTOR_DIR # Path to your gst-extractor cloned repository

meson build --prefix /usr --libdir $LIBDIR

ninja -C build

sudo ninja -C build install

Examples

Here are some examples for you to test the gst-extractor.

Simple Examples

Display

This pipeline will allow you to display the normal input video (without metadata) and will send the KLV metadata to a file.

- NVIDIA Elements:

INPUT="<path-to-yuy2-1280-722-file-with-metadata>"

gst-launch-1.0 filesrc location=${INPUT} ! videoparse width=1280 height=722 format=yuy2 framerate=30 ! extractor data-size=34 height-padding=2 name=ext ext.video ! queue ! nvvidconv ! "video/x-raw(memory:NVMM),format=NV12" ! nvoverlaysink sync=false ext.klv ! queue ! filesink location=meta.klv

- Generic Elements:

INPUT="<path-to-yuy2-1280-722-file-with-metadata>"

gst-launch-1.0 filesrc location=${INPUT} ! videoparse width=1280 height=722 format=yuy2 framerate=30 ! extractor data-size=34 height-padding=2 name=ext ext.video ! queue ! videoconvert ! ximagesink sync=false ext.klv ! queue ! filesink location=meta.klv

Display Using Dummy Frame

This pipeline will allow you to display the normal input video (without metadata), but will replace the input buffer with the dummy frame pointed by filepath. Finally, it will send the KLV metadata to a file.

- NVIDIA Elements:

INPUT="<path-to-yuy2-1280-722-file-with-metadata>"

FILEPATH="<path-to-dummy-frame-yuy2>"

gst-launch-1.0 filesrc location=${INPUT} ! videoparse width=1280 height=722 format=yuy2 framerate=30 ! extractor data-size=34 height-padding=2 filepath=${FILEPATH} name=ext ext.video ! queue ! nvvidconv ! "video/x-raw(memory:NVMM),format=NV12" ! nvoverlaysink sync=false ext.klv ! queue ! filesink location=meta.klv

- Generic Elements:

INPUT="<path-to-yuy2-1280-722-file-with-metadata>"

FILEPATH="<path-to-dummy-frame-yuy2>"

gst-launch-1.0 filesrc location=${INPUT} ! videoparse width=1280 height=722 format=yuy2 framerate=30 ! extractor data-size=34 height-padding=2 name=ext filepath=${FILEPATH} ext.video ! queue ! videoconvert ! ximagesink sync=false ext.klv ! queue ! filesink location=meta.klv

Display Dropping Frames and Videorate

This pipeline will allow you to display the normal input video (without metadata), and it will maintain the video frames flowing by using a videorate element that will duplicate the last valid frame when it does not receive a new one, in case you set the drop_frame condition to TRUE. Finally, it will also send the KLV metadata to a file.

- NVIDIA Elements:

INPUT="<path-to-yuy2-1280-722-file-with-metadata>"

FILEPATH="<path-to-dummy-frame-yuy2>"

gst-launch-1.0 filesrc location=${INPUT} ! videoparse width=1280 height=722 format=yuy2 framerate=30 ! extractor data-size=34 height-padding=2 name=ext ext.video ! videorate ! video/x-raw,framerate=30/1 ! queue ! nvvidconv ! "video/x-raw(memory:NVMM),format=NV12" ! nvoverlaysink sync=false ext.klv ! queue ! filesink location=meta.klv

- Generic Elements:

INPUT="<path-to-yuy2-1280-722-file-with-metadata>"

FILEPATH="<path-to-dummy-frame-yuy2>"

gst-launch-1.0 filesrc location=${INPUT} ! videoparse width=1280 height=722 format=yuy2 framerate=30 ! extractor data-size=34 height-padding=2 name=ext ext.video ! videorate ! video/x-raw,framerate=30/1 ! queue ! videoconvert ! ximagesink sync=false ext.klv ! queue ! filesink location=meta.klv

Advanced Examples

This section will show some more real use case examples that will demonstrate how the extractor can be used inside more complex pipelines.

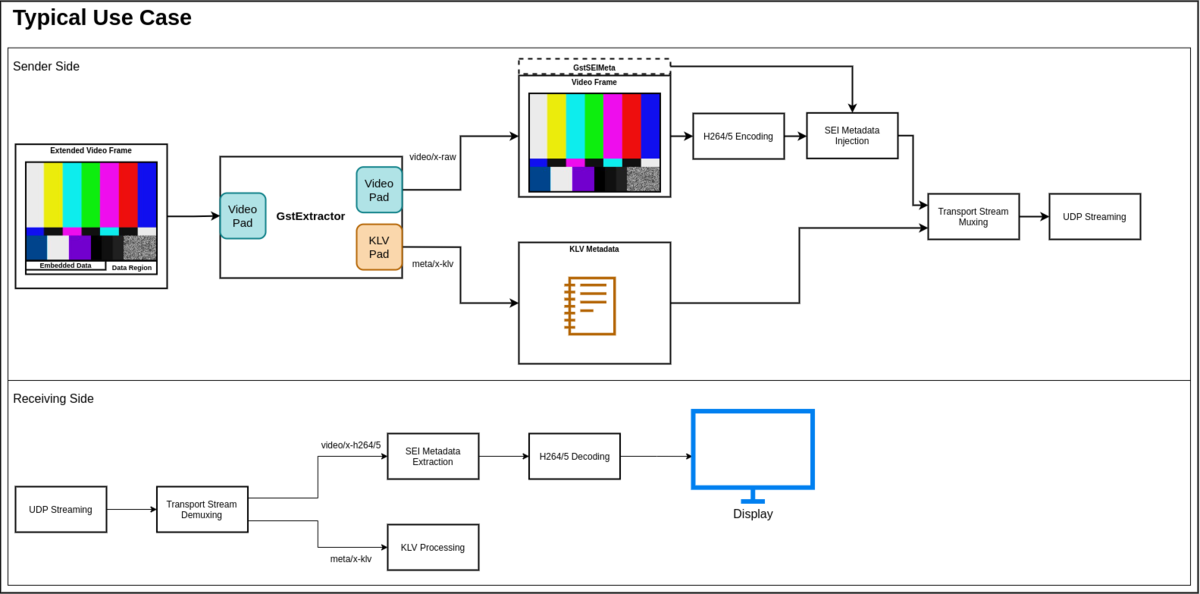

Sending Split Video and Metadata through UDP Using MPEG-TS

One typical use case for the KLV and SEI metadata is to be sent across UDP. The following image shows a diagram of this.

For a case like this we could use the following pipelines:

- Sender Side

IP="224.1.1.1"

PORT="12345"

INPUT="<path-to-yuy2-1280-722-file-with-metadata>"

FILEPATH="<path-to-dummy-frame-yuy2>"

gst-launch-1.0 filesrc location=${INPUT} ! videoparse width=1280 height=722 format=yuy2 framerate=30 ! extractor name=ext data-size=34 height-padding=2 filepath=${FILEPATH} ext.video ! queue ! nvvidconv ! nvv4l2h265enc name=encoder bitrate=2000000 iframeinterval=300 vbv-size=33333 insert-sps-pps=true control-rate=constant_bitrate profile=Main num-B-Frames=0 ratecontrol-enable=true preset-level=UltraFastPreset EnableTwopassCBR=false maxperf-enable=true ! seiinject ! h265parse ! queue ! mux. ext.klv ! meta/x-klv ! queue ! mpegtsmux name=mux alignment=7 ! udpsink host=${IP} port=${PORT} auto-multicast=true

- Receiving Side

IP="224.1.1.1" PORT="12345" GST_DEBUG=2,*seiextract*:MEMDUMP gst-launch-1.0 udpsrc port=12345 address=224.1.1.1 ! tsdemux name=demux demux. ! queue ! h265parse ! seiextract ! avdec_h265 ! autovideosink sync=false demux. ! queue ! 'meta/x-klv' ! fakesink sync=false

Note that to use this pipeline you should have installed GstSEI on the receiving side too. The debug flags GST_DEBUG=2,*seiextract*:MEMDUMP will allow you to see the extracted SEI metadata into the GStreamer debug. Also, if you would like to see the KLV metadata in the terminal output too, you can check our GStreamer In-Band Metadata for MPEG Transport Stream plugin and replace the fakesink element with metasink. Then you should see something similar to this on the receiving side:

# KLV meta

00000000 (0x7f893402e590): 73 6f 6d 65 20 6b 6c 76 20 30 31 34 39 00 some klv 0149.

# SEI meta

0:00:43.188390365 146210 0x55b6049b0f60 LOG seiextract gstseiextract.c:386:gst_sei_extract_prepare_output_buffer:<seiextract0> prepare_output_buffer

0:00:43.188486504 146210 0x55b6049b0f60 MEMDUMP seiextract gstseiextract.c:356:gst_sei_extract_extract_h265_data:<seiextract0> ---------------------------------------------------------------------------

0:00:43.188530839 146210 0x55b6049b0f60 MEMDUMP seiextract gstseiextract.c:356:gst_sei_extract_extract_h265_data:<seiextract0> The extracted data is:

0:00:43.188578709 146210 0x55b6049b0f60 MEMDUMP seiextract gstseiextract.c:356:gst_sei_extract_extract_h265_data:<seiextract0> 00000000: 30 35 2d 31 31 2d 32 30 32 31 2d 31 35 3a 34 33 05-11-2021-15:43

0:00:43.188620166 146210 0x55b6049b0f60 MEMDUMP seiextract gstseiextract.c:356:gst_sei_extract_extract_h265_data:<seiextract0> 00000010: 3a 30 30 20 :00

0:00:43.188650823 146210 0x55b6049b0f60 MEMDUMP seiextract gstseiextract.c:356:gst_sei_extract_extract_h265_data:<seiextract0> ---------------------------------------------------------------------------

Where the injected metadata was some klv 0149 and 05-11-2021-15:43:00 for KLV and SEI, respectively.

Handling the Dropping Frames Gracefully

We can improve the last example by handling with an application how the pipeline should behave if frames are being dropped. The GstExtractor element provides a message mechanism for the applications to know when the stream is lost (frames are being dropped) and when it has recovered (it stopped dropping frames). These messages have the following structure:

- stream-recovered: Posted to the bus when it pushes a frame downstream after having dropped one or more.

"stream-recovered" : {

}

- stream-lost: Posted to the bus every time a video frame is dropped, it increases the drop-count accordingly.

"stream-lost" : {

"drop-count" : 49

}

So in the next example with the help of those messages we will query the amount of dropped frames and if it goes above a threshold we will switch the input stream to a dummy stream that will be playing a file on loop with a message of Signal Lost.

Extra Requirements

For this example we will be using Gstreamer Daemon and gst-interpipe RidgeRun products, so make sure you install them first.

Also, make sure you have the dummy video already created. This can be done with the following gst-launch-1.0 pipeline:

gst-launch-1.0 videotestsrc pattern=ball num-buffers=300 ! video/x-raw,width=1280,height=720,format=NV12 ! textoverlay text="Signal Lost" valignment=center ! nvvidconv ! nvv4l2h265enc ! mpegtsmux ! filesink location=dummy_video.ts

Full Example

#include <glib.h>

#include <signal.h>

#include "libgstc.h"

#define EXTRACTOR_NAME "pipe"

#define EXTRACTOR_PIPE "filesrc location=<path-to-yuy2-1280-722-file-with-metadata> ! \

videoparse width=1280 height=722 format=yuy2 framerate=30 ! identity sync=true ! \

extractor name=ext data-size=34 height-padding=2 ext.video ! nvvidconv ! \

nvv4l2h265enc name=encoder bitrate=2000000 iframeinterval=30 vbv-size=33333 \

insert-sps-pps=true control-rate=constant_bitrate profile=Main num-B-Frames=0 \

ratecontrol-enable=true preset-level=UltraFastPreset EnableTwopassCBR=false \

maxperf-enable=true ! seiinject ! h265parse ! mux. ext.klv ! meta/x-klv ! \

queue ! mux. mpegtsmux name=mux alignment=7 ! interpipesink name=extractor \

\

interpipesrc name=ext_src listen-to=extractor ! udpsink host=<client-ip> port=<client-port> async=false"

#define DUMMY_NAME "dummy"

#define DUMMY_PIPE "multifilesrc location=<path-to-your-dummy-video> loop=true \

! tsdemux ! mpegtsmux ! interpipesink name=dummy_video sync=true"

#define DROP_STR_PARSE "\"drop-count\" : "

#define DROP_STR_LEN 15

#define RECOVER_STR_PARSE "\"stream-recovered\""

static int running = 1;

static void

sig_handler (int sig)

{

g_print ("Closing...\n");

running = 0;

}

gint

main (gint argc, gchar * argv[])

{

GstClient *client = NULL;

GstcStatus ret = 0;

gboolean listening_dummy = FALSE;

gchar *message = NULL;

gchar *str = NULL;

gint total_drop_count = 0;

const gchar *address = "127.0.0.1";

const guint port = 5000;

const long wait_time = -1;

const gint keep_open = 1;

const gint max_dropped = 5;

const guint64 bus_timeout = 5000000000;

ret = gstc_client_new (address, port, wait_time, keep_open, &client);

if (GSTC_OK != ret) {

g_printerr ("There was a problem creating a GstClient: %d\n", ret);

goto out;

}

gstc_pipeline_create (client, EXTRACTOR_NAME, EXTRACTOR_PIPE);

gstc_pipeline_create (client, DUMMY_NAME, DUMMY_PIPE);

gstc_pipeline_play (client, DUMMY_NAME);

gstc_pipeline_play (client, EXTRACTOR_NAME);

g_print ("Press ctrl+c to stop the pipeline...\n");

signal (SIGINT, sig_handler);

/* Loop for waiting on the stream-lost and stream-recovered messages. If no

message is received in 5 seconds, exit the loop */

while (running) {

/* The extractor messages are from the 'element' category */

ret =

gstc_pipeline_bus_wait (client, EXTRACTOR_NAME, "element", bus_timeout,

&message);

if (GSTC_OK == ret) {

/* Parse the message to find the number of frames continuously dropped */

str = g_strstr_len (message, -1, DROP_STR_PARSE);

if (str) {

/* According to the message structure the number of frames is right

after the string we looked for */

gint drop_count = atoi (str + DROP_STR_LEN);

total_drop_count++;

if (drop_count >= max_dropped && !listening_dummy) {

/* If the drop count is above the limit and we are not already on the

dummy stream, switch to it */

gstc_element_set (client, EXTRACTOR_NAME, "ext_src", "listen-to",

"dummy_video");

listening_dummy = TRUE;

}

continue;

}

/* Parse the message to find out if the stream is recovered */

str = g_strstr_len (message, -1, RECOVER_STR_PARSE);

if (str) {

/* The stream is recovered, switch back to the extractor pipe */

gstc_element_set (client, EXTRACTOR_NAME, "ext_src", "listen-to",

"extractor");

listening_dummy = FALSE;

}

/* Reset the pointer */

str = NULL;

} else {

g_printerr ("Unable to read from bus: %d\n", ret);

break;

}

}

g_print ("Total dropped %d\n", total_drop_count);

gstc_pipeline_stop (client, EXTRACTOR_NAME);

gstc_pipeline_stop (client, DUMMY_NAME);

gstc_pipeline_delete (client, EXTRACTOR_NAME);

gstc_pipeline_delete (client, DUMMY_NAME);

gstc_client_free (client);

out:

return ret;

}

Example Walkthrough

1. Include headers required for using signals, gstd and GLib functions.

#include <glib.h>

#include <signal.h>

#include "libgstc.h"

2. Define pipelines descriptions.

#define EXTRACTOR_NAME "pipe"

#define EXTRACTOR_PIPE "filesrc location=<path-to-yuy2-1280-722-file-with-metadata> ! \

videoparse width=1280 height=722 format=yuy2 framerate=30 ! identity sync=true ! \

extractor name=ext data-size=34 height-padding=2 ext.video ! nvvidconv ! \

nvv4l2h265enc name=encoder bitrate=2000000 iframeinterval=30 vbv-size=33333 \

insert-sps-pps=true control-rate=constant_bitrate profile=Main num-B-Frames=0 \

ratecontrol-enable=true preset-level=UltraFastPreset EnableTwopassCBR=false \

maxperf-enable=true ! seiinject ! h265parse ! mux. ext.klv ! meta/x-klv ! \

queue ! mux. mpegtsmux name=mux alignment=7 ! interpipesink name=extractor \

\

interpipesrc name=ext_src listen-to=extractor ! udpsink host=<client-ip> port=<client-port> async=false"

#define DUMMY_NAME "dummy"

#define DUMMY_PIPE "multifilesrc location=<path-to-your-dummy-video> loop=true \

! tsdemux ! mpegtsmux ! interpipesink name=dummy_video sync=true"

Remember to set the <path-to-yuy2-1280-722-file-with-metadata>, <path-to-your-dummy-video>, <client-ip> and <client-port> variables according to your environment.

3. Define some important global variables and macros.

#define DROP_STR_PARSE "\"drop-count\" : "

#define DROP_STR_LEN 15

#define RECOVER_STR_PARSE "\"stream-recovered\""

These are for parsing the bus messages that we are sending from the extractor element.

4. Create a signal mechanism to stop the program properly.

static int running = 1;

static void

sig_handler (int sig)

{

g_print ("Closing...\n");

running = 0;

}

This piece of code here will control the while thread in case of some Ctrl+C interruption to properly free all the resources.

5. Define the main function and some local variables we will be using.

gint

main (gint argc, gchar * argv[])

{

GstClient *client = NULL;

GstcStatus ret = 0;

gboolean listening_dummy = FALSE;

gchar *message = NULL;

gchar *str = NULL;

gint total_drop_count = 0;

const gchar *address = "127.0.0.1";

const guint port = 5000;

const long wait_time = -1;

const gint keep_open = 1;

const gint max_dropped = 5;

const guint64 bus_timeout = 5000000000;

6. Create the Gstreamer Daemon client.

ret = gstc_client_new (address, port, wait_time, keep_open, &client);

if (GSTC_OK != ret) {

g_printerr ("There was a problem creating a GstClient: %d\n", ret);

goto out;

}

Keep in mind that this function will fail if you haven't started the gstd server.

7. Create the pipelines and set them to play.

gstc_pipeline_create (client, EXTRACTOR_NAME, EXTRACTOR_PIPE);

gstc_pipeline_create (client, DUMMY_NAME, DUMMY_PIPE);

gstc_pipeline_play (client, DUMMY_NAME);

gstc_pipeline_play (client, EXTRACTOR_NAME);

g_print ("Press ctrl+c to stop the pipeline...\n");

signal (SIGINT, sig_handler);

8. Start the main loop to listen on bus messages of the extractor pipeline.

/* Loop for waiting on the stream-lost and stream-recovered messages. If no

message is received in 5 seconds, exit the loop */

while (running) {

/* The extractor messages are from the 'element' category */

ret =

gstc_pipeline_bus_wait (client, EXTRACTOR_NAME, "element", bus_timeout,

&message);

if (GSTC_OK == ret) {

Here as per simplicity and our gst-extractor custom parser code we expect frames to be dropped within less than 5 seconds so it's ok to have a limited timeout for waiting for the message, but you are free to handle this according to your use case.

9. Parse the received element message to see if it has the stream-lost message structure.

str = g_strstr_len (message, -1, DROP_STR_PARSE);

if (str) {

/* According to the message structure the number of frames is right

after the string we looked for */

gint drop_count = atoi (str + DROP_STR_LEN);

total_drop_count++;

if (drop_count >= max_dropped && !listening_dummy) {

/* If the drop count is above the limit and we are not already on the

dummy stream, switch to it */

gstc_element_set (client, EXTRACTOR_NAME, "ext_src", "listen-to",

"dummy_video");

listening_dummy = TRUE;

}

continue;

}

Here we are looking for the drop-count message part, and if it exists we query the number of frames that have been dropped consecutively. If this value is greater than the threshold and we are not already listening to the dummy source, then switch to the dummy source to let the streaming client know that something happened.

10. Parse the received message to see if it has the stream-recovered structure instead.

/* Parse the message to find out if the stream is recovered */

str = g_strstr_len (message, -1, RECOVER_STR_PARSE);

if (str) {

/* The stream is recovered, switch back to the extractor pipe */

gstc_element_set (client, EXTRACTOR_NAME, "ext_src", "listen-to",

"extractor");

listening_dummy = FALSE;

}

/* Reset the pointer */

str = NULL;

If the message was not a stream-lost message then it can be a stream-recovered message, which leads us to switch back to the main source with the extractor element.

11. Exit the loop if no message was received during the given timeout.

} else {

g_printerr ("Unable to read from bus: %d\n", ret);

break;

}

}

In our case this will only happen when the video file with embedded metadata has reached the end of the stream.

12. Get the total dropped frames count and free the resources.

g_print ("Total dropped %d\n", total_drop_count);

gstc_pipeline_stop (client, EXTRACTOR_NAME);

gstc_pipeline_stop (client, DUMMY_NAME);

gstc_pipeline_delete (client, EXTRACTOR_NAME);

gstc_pipeline_delete (client, DUMMY_NAME);

gstc_client_free (client);

out:

return ret;

}

Testing the Example

In order to properly test the example follow the next steps:

1. Run Gstreamer Daemon server:

gstd

2. Run the receiving pipeline on the client side:

PORT=12345

GST_DEBUG=ERROR,*seiextract*:MEMDUMP gst-launch-1.0 udpsrc port={PORT} ! tsdemux name=demux demux. ! queue ! h265parse ! seiextract ! avdec_h265 ! autovideosink sync=false demux. ! queue ! 'meta/x-klv' ! metasink sync=false

3. In another terminal of the server side run the example:

./example

Now you should see on the screen of the receiving side that every time the extractor drops more than 5 frames it will switch to the dummy video stream, and if it recovers it will go back to the extractor pipeline stream.

Sending Video with SEI Meta + Synchronous Meta + Asynchronous Meta Using MPEG-TS

GstExtractor provides the capability to send both synchronous and asynchronous KLV metadata. In this example we will show how to send video and synchronous KLV metadata from the extractor element, but also we will add a second source of metadata coming from a TCP port which will be asynchronous. With this example you will learn how to differentiate between the 2 types of metadata on the receiver side.

1. First we start the TCP asynchronous metadata source:

TCP_SERVER_IP="127.0.0.1"

TCP_PORT="3001"

gst-launch-1.0 metasrc is-live=true period=1 metadata=test_async ! meta/x-klv ! tcpserversink host=${TCP_SERVER_IP} port=${TCP_PORT} -v

2. Then we start the final receiver pipeline which will receive the video with the SEI metadata and both types of KLV metadata through MPEG-TS.

FINAL_CLIENT_PORT="12345"

GST_DEBUG=2,*seiextract*:9 gst-launch-1.0 udpsrc port=${FINAL_CLIENT_PORT} ! tsdemux name=demux demux. ! queue ! h265parse ! seiextract ! avdec_h265 ! autovideosink sync=false demux. ! queue ! meta/x-klv,stream_type=6 ! metasink sync=false async=false demux. ! queue ! meta/x-klv,stream_type=21 ! metasink sync=false async=false

Note that here the stream_type part of the caps is used to identify what kind of KLV metadata we are expecting to receive from each pad. The stream_type=6 is used for asynchronous metadata, while stream_type=21 is for synchronous metadata. On the other side the debug of the seiextract element allows us to see in a quick way the contents of the SEI metadata of the video buffers.

3. Finally to see everything working, run the intermediate pipeline, which is the one having the extractor element.

TCP_SERVER_IP="127.0.0.1"

TCP_PORT="3001"

FINAL_CLIENT_IP="192.168.0.19"

FINAL_CLIENT_PORT="12345"

INPUT="<path-to-yuy2-1280-722-file-with-metadata>"

gst-launch-1.0 mpegtsmux name=mux alignment=7 ! udpsink host=${FINAL_CLIENT_IP} port=${FINAL_CLIENT_PORT} filesrc location=${INPUT} ! videoparse width=1280 height=722 format=yuy2 framerate=30 ! extractor name=ext data-size=34 height-padding=2 ! queue ! videorate ! nvvidconv ! nvv4l2h265enc name=encoder bitrate=2000000 iframeinterval=300 vbv-size=33333 insert-sps-pps=true control-rate=constant_bitrate profile=Main num-B-Frames=0 ratecontrol-enable=true preset-level=UltraFastPreset EnableTwopassCBR=false maxperf-enable=true ! seiinject ! h265parse ! queue ! mux. ext.klv ! meta/x-klv,stream_type=21 ! queue ! mux. tcpclientsrc host=${TCP_SERVER_IP} port=${TCP_PORT} ! queue ! meta/x-klv,stream_type=6 ! mux.meta_54 -v

Note that this pipeline is in charge of muxing all the streams into the MPEG-TS container and it requires the TCP metadata source to be available when starting.

Once this pipeline is running you will see on the receiver side the SEI metadata messages as part of the Gstreamer debug and the hexadecimal/ascii dump of the KLV messages on the standard output similar to this:

# Sync KLV

00000000 (0x7f335402f4d5): 73 6f 6d 65 20 6b 6c 76 20 30 31 32 30 00 some klv 0120.

# Async KLV

00000000 (0x7f335402f4f0): 74 65 73 74 5f 61 73 79 6e 63 00 test_async.

# SEI Metadata

0:01:04.052874550 6320 0x55ef8bcbaf70 LOG seiextract gstseiextract.c:386:gst_sei_extract_prepare_output_buffer:<seiextract0> prepare_output_buffer

0:01:04.052893552 6320 0x55ef8bcbaf70 MEMDUMP seiextract gstseiextract.c:357:gst_sei_extract_extract_h265_data:<seiextract0> ---------------------------------------------------------------------------

0:01:04.052900152 6320 0x55ef8bcbaf70 MEMDUMP seiextract gstseiextract.c:357:gst_sei_extract_extract_h265_data:<seiextract0> The extracted data is:

0:01:04.052905523 6320 0x55ef8bcbaf70 MEMDUMP seiextract gstseiextract.c:357:gst_sei_extract_extract_h265_data:<seiextract0> 00000000: 30 35 2d 31 31 2d 30 31 31 38 2d 31 35 3a 34 33 05-11-0118-15:43

0:01:04.052910196 6320 0x55ef8bcbaf70 MEMDUMP seiextract gstseiextract.c:357:gst_sei_extract_extract_h265_data:<seiextract0> 00000010: 3a 30 30 20 :00

0:01:04.052913389 6320 0x55ef8bcbaf70 MEMDUMP seiextract gstseiextract.c:357:gst_sei_extract_extract_h265_data:<seiextract0> ---------------------------------------------------------------------------

|

RidgeRun Resources | |||||||||||||||||||||||||||||||||||||||||||||||||||||||

| |||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

|||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Visit our Main Website for the RidgeRun Products and Online Store. RidgeRun Engineering informations are available in RidgeRun Professional Services, RidgeRun Subscription Model and Client Engagement Process wiki pages. Please email to support@ridgerun.com for technical questions and contactus@ridgerun.com for other queries. Contact details for sponsoring the RidgeRun GStreamer projects are available in Sponsor Projects page. |

|