NVIDIA Jetson Reference Designs

Contents

QuickStart Jetson AI by RidgeRun

Quickstart Jetson AI is RidgeRun's OpenSource Docker-based project. It is targeted to those looking for an out-of-the-box solution to test the Machine Learning and Artificial Intelligence capabilities of NVIDIA Jetson boards. It offers a portable and easy-to-use environment that can be executed on any NVIDIA Jetson board. The project includes a set of example applications that exercise ML and AI frameworks such as DeepStream, OpenCV, GStreamer, GstInference, among others.

Each example comes with the source code and they are implemented in different languages and using different frameworks (bash scripts, python, C/C++, GStreamer, etc). You can find the example that is closer to your use case, and use it as a starting point for your demos and exploratory tests. Instructions for creating your own demos are provided so you can easily play around with them.

The project is provided as a Docker image so it is easy to set up in your system and start playing around with the examples in no time. No additional and bothering system setup or package installation is required. The web service interface allows you to run the demos remotely from your cell phone or a desktop computer as well as locally on your Jetson board.

Promo Video

How to Use

Setting up environment

The following are the steps to use the provided Docker image

- Install JetPack 4.5.1 in the Jetson board

- Install the SDK components, more importantly the CUDA and NVIDIA Container Runtime

- Use an ethernet connection to connect the board to the internet

- Connect a USB webcam to the board

Use the docker container

1. If you wish to use the image right away, you may do so with:

BOARD=nano

TAG=0.2.0

docker run --gpus all --runtime nvidia --device /dev/video0 -v /tmp/argus_socket:/tmp/argus_socket -v /tmp/.X11-unix:/tmp/.X11-unix --net=host --restart=always ridgerun/jetsonai-${BOARD}:${TAG} bash

Just change the BOARD variable to what you desire. Currently, the boards for which the project is available are:

- Xavier NX (BOARD=xaviernx)

- Nano (BOARD=nano)

After this, you will be using the image interactively through the command-line interface.

2. To create a docker container with the image provided in the repository, first you need to pull it. For example:

BOARD=nano

TAG=0.2.1

docker pull ridgerun/jetsonai-${BOARD}:${TAG}

3. Then you can create a docker container with that pulled image:

BOARD=nano

TAG=0.2.1

docker create -ti --gpus all --runtime nvidia --device /dev/video0 -v /tmp/argus_socket:/tmp/argus_socket -v /tmp/.X11-unix:/tmp/.X11-unix --net=host --restart=always ridgerun/jetsonai-${BOARD}:${TAG} bash

4. You can then start the container first by searching it with:

docker ps -a

And start it with:

sudo docker start <CONTAINER_ID>

Then everything is set to look out for the Example Applications! You may want to explore the source code from these applications. You may do so with:

sudo docker exec -ti <CONTAINER_ID> bash

cd /root/home/ridgerun/demo # This directory holds all the demos' source code!

Example Applications Gallery

When you run the Docker container in your Jetson system, you will be able to access the demos gallery by entering the following URL in the Jetson browser:

http://<BOARD_IP>:8000

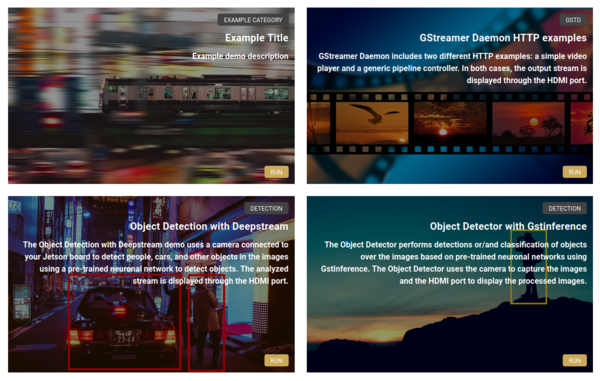

Note that BOARD_IP is the IP of the Jetson board. A web page with the demos gallery will show up as shown in the following figure:

The application gallery provides the following demo examples:

- GstCUDA / OpenCV Filters Example: This example uses GstCUDA opencvfilter element to provide a set of image filters from the popular computer vision framework OpenCV. The following figure shows some of the available filters applied to the same image.

- GstCUDA / OpenCV Warp Example: This demo uses GstCUDA to perform image warping over an image using an efficient buffer handling. The resulting image will be rotated over the 3D space as shown in the following figure:

- GStreamer Daemon Controller and Player Example: This demo uses GStreamer Daemon to manipulate a set of DeepStream pipelines from its Javascript Web Interface, allowing to change their properties and states dynamically. It also allows you to create and manipulate your own pipelines in an easy way.

- DeepStream Object Detection Example: This example runs DeepStream from a Python application in order to perform object detection.

- GstInference Object Detection Example: This example uses GstInference to run an object detection task. The example shows how to run it from a simple bash script using GStreamer pipeline descriptions.

- TensorRT Python Example: Under development

- SLAM Example: Under development

How to create your own demo application

You can use QuickStart Jetson AI to generate your own demo applications and use the provided framework to test them without worrying about the system setup. The easiest way to add a new demo is to use any of the existent examples as a starting point. For this reason, we have provided several demos using different execution flavors such as:

- Plain bash scripts using GStreamer

- C/C++ sample applications

- GStreamer Daemon (GSTD) based C/C++, python, and bash.

And using different frameworks such as OpenCV, GStreamer, DeepStream, CUDA, etc.

You only need to find the application that matches your use case and use it as a reference for your own design.

Example applications are located at: <path to demos>

Every demo is composed of four main components as shown in Figure 4. The arrows show information dependency/use between components.

Information File: A JSON file containing all the meta-information regarding the demos and how to execute them. The JSON file should look like follows:

{

"title": "My demo title",

"category": "Detection",

"hero": "demo_image.jpg",

"description" : "This is a demo example",

"entrypoint" :{

"cmd": "python3",

"cmdargs": "examples/demo_directory/web_page.py",

"redirect": "8004"

}

Title: The title of the demo application - this will be displayed on the main page.

Category: A category for the application

- Hero: The image displayed in the main page for the demo application

- Description: A description of the application - this will be displayed in the main page.

- Entrypoint: This stores the information required to execute the demo application

Display Image: This is just an image file that will be used in the demo gallery as a background image.

Demo Web Page: This is the entry point for the demo page and it is meant to be either loaded when executed from the main page or executed in a standalone fashion. It brings up the basic web interface for the demo and the corresponding controls. The demo web page exposes the necessary controls to run the specific demo as shown in the following image:

Demo Source Code: This is the core of the demo, it contains the source code for the demo in the corresponding language or framework. It is meant to interface with the demo web page.

Once you find the demo that matches the most your target application, just copy and paste the demo folder into the same directory and rename it accordingly. The final layout should look like follows - assuming your new demo is named my_example_app:

examples/

├── my_example_app

│ ├── example.jpg

| ├── example.py

| ├── web_page.py

│ └── example.json

├── gstcuda_opencv_filters

│ ├── gstcuda_opencv_filters.jpg

│ ├── gstcuda_opencv_filters.json

│ ├── gstcuda_opencv_filters.py

│ └── web_page.py

├── gstcuda_opencv_warp

│ ├── gstcuda_opencv_warp.jpg

│ ├── gstcuda_opencv_warp.json

│ ├── gstcuda_opencv_warp.py

│ └── web_page.py

└── object_detection_with_deepstream

├── deepstream_camera_detection.py

├── object_detection_with_deepstream.jpg

├── object_detection_with_deepstream.json

└── web_page.py

After this, feel free to modify the demo files to properly match your application. The demo will automatically appear on the main web page after reboot as follows:

Licensing

These reference designs are dual-licensed, with both licenses being RidgeRun proprietary licenses. The development license allows you to use the code for internal and demonstration purposes at no cost. If you wish to use the code in a product you are selling, or in other production environments, please contact us to acquire a product license.

|

RidgeRun Resources | |||||||||||||||||||||||||||||||||||||||||||||||||||||||

| |||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

|||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Visit our Main Website for the RidgeRun Products and Online Store. RidgeRun Engineering informations are available in RidgeRun Professional Services, RidgeRun Subscription Model and Client Engagement Process wiki pages. Please email to support@ridgerun.com for technical questions and contactus@ridgerun.com for other queries. Contact details for sponsoring the RidgeRun GStreamer projects are available in Sponsor Projects page. |

|