Difference between revisions of "CUDA ISP for NVIDIA Jetson/Examples/GStreamer usage"

| (74 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

<noinclude> | <noinclude> | ||

| − | {{CUDA ISP for NVIDIA Jetson/Head|previous=|next=|metakeywords=|metadescription=}} | + | {{CUDA ISP for NVIDIA Jetson/Head|previous=Examples/_API_usage|next=Performance/Library|metakeywords=|metadescription=}} |

</noinclude> | </noinclude> | ||

{{DISPLAYTITLE:CUDA ISP for NVIDIA Jetson: GStreamer usage|noerror}} | {{DISPLAYTITLE:CUDA ISP for NVIDIA Jetson: GStreamer usage|noerror}} | ||

| − | + | == General == | |

| − | + | CUDA ISP provides GStreamer plugins that facilitate the usage of the library with GStreamer pipelines. CUDA ISP provides three different GStreamer elements for image signal processing. The three elements are: | |

| − | |||

| − | |||

| − | |||

| − | CUDA ISP provides three different GStreamer elements | ||

* cudashift | * cudashift | ||

| Line 17: | Line 13: | ||

* cudaawb | * cudaawb | ||

| − | In the following sections, you can see | + | In the following sections, you can see examples of how to use them. You can see more information about the algorithms these elements apply in [[CUDA_ISP_for_NVIDIA_Jetson/CUDA_ISP_for_NVIDIA_Jetson_Basics|CUDA ISP Basics]]. Consider that a patch must be applied to <code>v4l2src</code> to enable captures in bayer10. You can see how to apply this patch in this link: [[CUDA_ISP_for_NVIDIA_Jetson/Getting_Started/Building_custom_v4l2src_element|Apply patch to v4l2src]]. |

| − | + | == GStreamer Elements == | |

=== cudashift === | === cudashift === | ||

| − | + | This element applies the shifting algorithm. For more information about the element properties and capabilities, check here: [https://ridgerun.github.io/libcudaisp-docs/md__builds_ridgerun_rnd_libcudaisp_docs_cudashift.html cudashift element]. | |

| + | |||

| + | * '''Choosing the shift value''' | ||

| + | On Jetson platforms, the capture subsystem unit (Video Input) has particular formats to pack the RAW data received from the CSI channels. This modifies the RAW Pixel bits organization on the image captured. When capturing, the MSB can be found on positions 13, 14, 15 (depending on the platform), and the lower bits will be replicated MSBs. The values of the MSB have more impact on the image than LSB. To choose a proper value for shifting, please consider which shift value places the 8 MSB data bits into the lower 8 positions. | ||

| − | + | For example, when capturing a RAW10 image of a Jetson Xavier, the information will be organized as shown in the next image: | |

| − | + | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | <br> | |

| + | <br><br> | ||

| + | [[File:Shiftingxavierv1.jpeg|800px|frameless|center|CUDA ISP library ]] | ||

| + | <br> | ||

| + | <br><br> | ||

| + | According to the previous image, we have the data (D9-D0) from bit 15 to bit 6 and have replicated bits from bit 5 to bit 0. In this case, the proper shift value would be 8. In practice, the best image quality may be obtained using different shift values than theoretical ones. | ||

| − | + | Here we can see an example of the same scene being captured on the Jetson Nano using a shift value of 0 (left) and a shift value of 2 (right): | |

| + | <gallery mode=packed heights=250px> | ||

| + | File:Shift0 debayer4.jpeg | ||

| + | File:Shift2 debayer4.jpeg | ||

| + | </gallery> | ||

| + | We can see that a higher shift value darkens the image. A higher shift value reduces the resulting pixel value by moving the pixel data bits to less significant positions. The green tint observed in these images is a biproduct of the debayering process, this can be corrected with White Balancing. | ||

The following pipeline receives a bayer 10 image with 4k resolution from a camera sensor, applies a shifting with a value of 5 and outputs a bayer 8 image. | The following pipeline receives a bayer 10 image with 4k resolution from a camera sensor, applies a shifting with a value of 5 and outputs a bayer 8 image. | ||

| Line 43: | Line 47: | ||

<br> | <br> | ||

<br><br> | <br><br> | ||

| − | [[File: | + | [[File:Shiftpipelinev2.jpeg|800px|frameless|center|CUDA ISP library ]] |

<br> | <br> | ||

<br><br> | <br><br> | ||

| − | |||

| − | |||

=== cudadebayer === | === cudadebayer === | ||

| − | + | This element applies the debayering algorithm. For more information about the element properties and capabilities, check here: [https://ridgerun.github.io/libcudaisp-docs/md__builds_ridgerun_rnd_libcudaisp_docs_cudadebayer.html cudadebayer element] | |

| − | + | Next, you will see examples of how to use the cudadebayer element. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | The following pipeline receives a bayer 10 image with 4K resolution from a camera sensor and outputs an RGB image. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

<source lang=bash> | <source lang=bash> | ||

gst-launch-1.0 -ve v4l2src io-mode=userptr ! 'video/x-bayer, bpp=10, width=3840, height=2160' ! cudadebayer ! fakesink | gst-launch-1.0 -ve v4l2src io-mode=userptr ! 'video/x-bayer, bpp=10, width=3840, height=2160' ! cudadebayer ! fakesink | ||

| Line 86: | Line 78: | ||

=== cudaawb === | === cudaawb === | ||

| − | + | This element applies the auto white balance algorithm. Next, you will see examples of how to use the cudaawb element. | |

| − | + | The following pipeline receives an RGB image with 4K resolution, applies the auto white balance algorithm with the Gray World algorithm set by default, and outputs an RGB image. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | The following pipeline receives | ||

<source lang=bash> | <source lang=bash> | ||

| − | gst-launch-1.0 | + | gst-launch-1.0 videotestsrc ! 'video/x-raw, width=3840,height=2160' ! cudaawb ! fakesink |

</source> | </source> | ||

| + | |||

<br> | <br> | ||

<br><br> | <br><br> | ||

| − | [[File: | + | [[File:Onlyawbpipeline.jpeg|800px|frameless|center|CUDA ISP library ]] |

<br> | <br> | ||

<br><br> | <br><br> | ||

| − | + | The following pipeline receives a bayer10 image with 4K resolution from a camera sensor and outputs an RGB image after applying the auto white balance algorithm with the Gray World algorithm. This element requires an RGB image input. So, when using a camera sensor, before using the cudaawb element, we should add the cudadebayer element to obtain the RGB image that the AWB element will use as input. | |

| − | |||

<source lang=bash> | <source lang=bash> | ||

| − | gst-launch-1.0 -ve v4l2src io-mode=userptr ! 'video/x-bayer, bpp=10, width=3840, height=2160' ! cudadebayer ! cudaawb ! 'video/x-raw, format= | + | gst-launch-1.0 -ve v4l2src io-mode=userptr ! 'video/x-bayer, bpp=10, width=3840, height=2160' ! cudadebayer ! cudaawb algorithm=1 ! 'video/x-raw, format=RGB' ! fakesink |

</source> | </source> | ||

| + | <br> | ||

| + | <br><br> | ||

| + | [[File:Awbpipelinergb.jpeg|800px|frameless|center|CUDA ISP library ]] | ||

| + | <br> | ||

| + | <br><br> | ||

| + | |||

| − | + | The following pipeline receives a bayer10 image with 4K resolution from a camera sensor, applies debayering, auto white balance with the Histogram Stretch algorithm and outputs an I420 image. | |

| + | <source lang=bash> | ||

| + | gst-launch-1.0 -ve v4l2src io-mode=userptr ! 'video/x-bayer, bpp=10, width=3840, height=2160' ! cudadebayer ! cudaawb algorithm=2 confidence=0.85 ! 'video/x-raw, format=I420' ! fakesink | ||

| + | </source> | ||

<br> | <br> | ||

<br><br> | <br><br> | ||

| − | [[File: | + | [[File:Awbpipelinei420v2.jpeg|800px|frameless|center|CUDA ISP library ]] |

<br> | <br> | ||

<br><br> | <br><br> | ||

| − | === | + | The following images show examples of the auto white balance element in action. If the element is used with the <code>cudaawb algorithm=1</code> option, then the Gray World White Balancing algorithm is used. This algorithm is used to produce the image on the left. If the element is used with the <code>cudaawb algorithm=2 confidence=0.85</code> option, then the Histogram Stretch White Balancing algorithm is used. The confidence option adjusts the histogram confidence interval that is stretched to produce the White Balancing. A value closer to 1 has a smaller effect on the image, but has a smaller data loss. A value closer to 0 has a larger effect on the image, but produces a larger data loss. A value of 0.85 was used to produce the image on the right: |

| − | + | <gallery mode=packed heights=250px> | |

| − | + | File:Shift0 debayer awb4.jpeg | |

| − | + | File:Shift0 debayer awb85 4.jpeg | |

| + | </gallery> | ||

| − | + | === Using the three elements === | |

| + | The following pipeline receives a bayer 10 image with 4K resolution and outputs a RGB image, using the three elements. | ||

<source lang=bash> | <source lang=bash> | ||

gst-launch-1.0 -ve v4l2src io-mode=userptr ! 'video/x-bayer, bpp=10, width=3840, height=2160' ! cudashift shift=5 ! cudadebayer ! cudaawb ! fakesink | gst-launch-1.0 -ve v4l2src io-mode=userptr ! 'video/x-bayer, bpp=10, width=3840, height=2160' ! cudashift shift=5 ! cudadebayer ! cudaawb ! fakesink | ||

| Line 132: | Line 128: | ||

<br> | <br> | ||

<br><br> | <br><br> | ||

| − | [[File: | + | [[File:3elementspipelinev2.jpeg|800px|frameless|center|CUDA ISP library ]] |

<br> | <br> | ||

<br><br> | <br><br> | ||

| + | |||

| + | == Use cases == | ||

| + | In this section, you can find some use cases for the CUDA ISP GStreamer plugins. Both examples were tested on a Jetson Xavier NX. | ||

| + | |||

| + | |||

| + | |||

| + | *'''Recording''' | ||

| + | The following pipeline records a video from a camera sensor, applies the three different algorithms and saves the video into an MP4 file. | ||

| + | <source lang=bash> | ||

| + | GST_DEBUG=WARNING,*cudaawb*:LOG,*cudadebayer*:LOG gst-launch-1.0 -ve v4l2src io-mode=userptr ! 'video/x-bayer, bpp=10, width=3840, height=2160' ! cudashift shift=5 ! cudadebayer ! cudaawb algorithm=2 confidence=0.80 ! 'video/x-raw,format=I420' ! nvvidconv ! nvv4l2h264enc ! h264parse ! qtmux ! filesink location=test.mp4 -e | ||

| + | </source> | ||

| + | |||

| + | |||

| + | *'''Streaming''' | ||

| + | The following pipeline is an example of a streaming application with the three elements. These pipelines use another RidgeRun product for high-performance streaming called [[GstRtspSink]] . | ||

| + | <source lang=bash> | ||

| + | GST_DEBUG=*:ERROR gst-launch-1.0 v4l2src do-timestamp=1 io-mode=userptr ! "video/x-bayer,bpp=10,width=3840,height=2160,framerate=30/1" ! cudashift shift=7 ! queue ! cudadebayer ! queue ! cudaawb algorithm=2 confidence=0.85 ! queue ! "video/x-raw,format=I420" ! nvvidconv ! queue ! nvv4l2h264enc insert-sps-pps=true idrinterval=30 ! "video/x-h264,mapping=/stream1" ! rtspsink service=5000 -vvv | ||

| + | </source> | ||

<noinclude> | <noinclude> | ||

| − | {{CUDA ISP for NVIDIA Jetson/Foot||}} | + | {{CUDA ISP for NVIDIA Jetson/Foot|Examples/_API_usage|Performance/Library}} |

</noinclude> | </noinclude> | ||

Latest revision as of 13:53, 14 June 2023

| CUDA ISP for NVIDIA Jetson | |

|---|---|

| |

| CUDA ISP for NVIDIA Jetson Basics | |

|

|

|

| Getting Started | |

|

|

|

| User Manual | |

|

|

|

| GStreamer | |

|

|

|

| Examples | |

|

|

|

| Performance | |

|

|

|

| Contact Us | |

|

|

Contents

General

CUDA ISP provides GStreamer plugins that facilitate the usage of the library with GStreamer pipelines. CUDA ISP provides three different GStreamer elements for image signal processing. The three elements are:

- cudashift

- cudadebayer

- cudaawb

In the following sections, you can see examples of how to use them. You can see more information about the algorithms these elements apply in CUDA ISP Basics. Consider that a patch must be applied to v4l2src to enable captures in bayer10. You can see how to apply this patch in this link: Apply patch to v4l2src.

GStreamer Elements

cudashift

This element applies the shifting algorithm. For more information about the element properties and capabilities, check here: cudashift element.

- Choosing the shift value

On Jetson platforms, the capture subsystem unit (Video Input) has particular formats to pack the RAW data received from the CSI channels. This modifies the RAW Pixel bits organization on the image captured. When capturing, the MSB can be found on positions 13, 14, 15 (depending on the platform), and the lower bits will be replicated MSBs. The values of the MSB have more impact on the image than LSB. To choose a proper value for shifting, please consider which shift value places the 8 MSB data bits into the lower 8 positions.

For example, when capturing a RAW10 image of a Jetson Xavier, the information will be organized as shown in the next image:

According to the previous image, we have the data (D9-D0) from bit 15 to bit 6 and have replicated bits from bit 5 to bit 0. In this case, the proper shift value would be 8. In practice, the best image quality may be obtained using different shift values than theoretical ones.

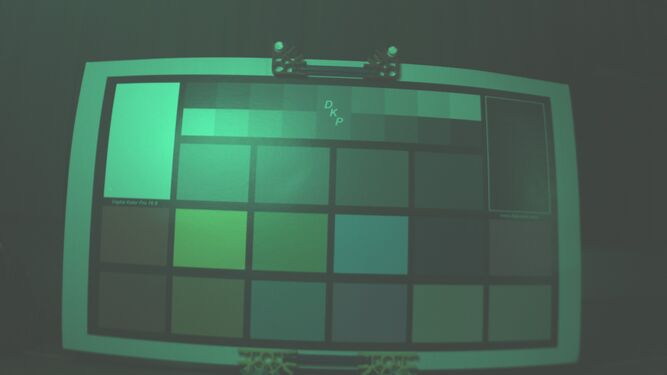

Here we can see an example of the same scene being captured on the Jetson Nano using a shift value of 0 (left) and a shift value of 2 (right):

We can see that a higher shift value darkens the image. A higher shift value reduces the resulting pixel value by moving the pixel data bits to less significant positions. The green tint observed in these images is a biproduct of the debayering process, this can be corrected with White Balancing.

The following pipeline receives a bayer 10 image with 4k resolution from a camera sensor, applies a shifting with a value of 5 and outputs a bayer 8 image.

gst-launch-1.0 -ve v4l2src io-mode=userptr ! 'video/x-bayer, bpp=10, format=rggb' ! cudashift shift=5 ! fakesink

cudadebayer

This element applies the debayering algorithm. For more information about the element properties and capabilities, check here: cudadebayer element

Next, you will see examples of how to use the cudadebayer element.

The following pipeline receives a bayer 10 image with 4K resolution from a camera sensor and outputs an RGB image.

gst-launch-1.0 -ve v4l2src io-mode=userptr ! 'video/x-bayer, bpp=10, width=3840, height=2160' ! cudadebayer ! fakesink

This next pipeline receives a bayer 10 image with 4K resolution and outputs an I420 image. You can see that source caps were added to choose which output format you desire,in this case it would be I420.

gst-launch-1.0 -ve v4l2src io-mode=userptr ! 'video/x-bayer, bpp=10, width=3840, height=2160' ! cudadebayer ! 'video/x-raw, format=I420' ! fakesink

This next pipeline receives a bayer 10 image with 4K resolution and outputs an RGB image with shifting added. In this case the output format is RGB.

gst-launch-1.0 -ve v4l2src io-mode=userptr ! 'video/x-bayer, bpp=10, width=3840, height=2160' ! cudadebayer shift=5 ! 'video/x-raw, format=RGB' ! fakesink

cudaawb

This element applies the auto white balance algorithm. Next, you will see examples of how to use the cudaawb element.

The following pipeline receives an RGB image with 4K resolution, applies the auto white balance algorithm with the Gray World algorithm set by default, and outputs an RGB image.

gst-launch-1.0 videotestsrc ! 'video/x-raw, width=3840,height=2160' ! cudaawb ! fakesink

The following pipeline receives a bayer10 image with 4K resolution from a camera sensor and outputs an RGB image after applying the auto white balance algorithm with the Gray World algorithm. This element requires an RGB image input. So, when using a camera sensor, before using the cudaawb element, we should add the cudadebayer element to obtain the RGB image that the AWB element will use as input.

gst-launch-1.0 -ve v4l2src io-mode=userptr ! 'video/x-bayer, bpp=10, width=3840, height=2160' ! cudadebayer ! cudaawb algorithm=1 ! 'video/x-raw, format=RGB' ! fakesink

The following pipeline receives a bayer10 image with 4K resolution from a camera sensor, applies debayering, auto white balance with the Histogram Stretch algorithm and outputs an I420 image.

gst-launch-1.0 -ve v4l2src io-mode=userptr ! 'video/x-bayer, bpp=10, width=3840, height=2160' ! cudadebayer ! cudaawb algorithm=2 confidence=0.85 ! 'video/x-raw, format=I420' ! fakesink

The following images show examples of the auto white balance element in action. If the element is used with the cudaawb algorithm=1 option, then the Gray World White Balancing algorithm is used. This algorithm is used to produce the image on the left. If the element is used with the cudaawb algorithm=2 confidence=0.85 option, then the Histogram Stretch White Balancing algorithm is used. The confidence option adjusts the histogram confidence interval that is stretched to produce the White Balancing. A value closer to 1 has a smaller effect on the image, but has a smaller data loss. A value closer to 0 has a larger effect on the image, but produces a larger data loss. A value of 0.85 was used to produce the image on the right:

Using the three elements

The following pipeline receives a bayer 10 image with 4K resolution and outputs a RGB image, using the three elements.

gst-launch-1.0 -ve v4l2src io-mode=userptr ! 'video/x-bayer, bpp=10, width=3840, height=2160' ! cudashift shift=5 ! cudadebayer ! cudaawb ! fakesink

Use cases

In this section, you can find some use cases for the CUDA ISP GStreamer plugins. Both examples were tested on a Jetson Xavier NX.

- Recording

The following pipeline records a video from a camera sensor, applies the three different algorithms and saves the video into an MP4 file.

GST_DEBUG=WARNING,*cudaawb*:LOG,*cudadebayer*:LOG gst-launch-1.0 -ve v4l2src io-mode=userptr ! 'video/x-bayer, bpp=10, width=3840, height=2160' ! cudashift shift=5 ! cudadebayer ! cudaawb algorithm=2 confidence=0.80 ! 'video/x-raw,format=I420' ! nvvidconv ! nvv4l2h264enc ! h264parse ! qtmux ! filesink location=test.mp4 -e

- Streaming

The following pipeline is an example of a streaming application with the three elements. These pipelines use another RidgeRun product for high-performance streaming called GstRtspSink .

GST_DEBUG=*:ERROR gst-launch-1.0 v4l2src do-timestamp=1 io-mode=userptr ! "video/x-bayer,bpp=10,width=3840,height=2160,framerate=30/1" ! cudashift shift=7 ! queue ! cudadebayer ! queue ! cudaawb algorithm=2 confidence=0.85 ! queue ! "video/x-raw,format=I420" ! nvvidconv ! queue ! nvv4l2h264enc insert-sps-pps=true idrinterval=30 ! "video/x-h264,mapping=/stream1" ! rtspsink service=5000 -vvv