GstInference with TinyYoloV3 architecture

Make sure you also check GstInference's companion project: R2Inference |

Contents

Description

TinyYOLO (also called tiny Darknet) is the light version of the YOLO(You Only Look Once) real-time object detection deep neural network. TinyYOLO is lighter and faster than YOLO while also outperforming other light model's accuracy. The following table presents a comparison between YOLO, Alexnet, SqueezeNet, and tinyYOLO.

| Model | Top-1 | Top-5 | Ops | Size |

|---|---|---|---|---|

| AlexNet | 57.0 | 80.3 | 2.27 Bn | 238 MB |

| Darknet | 61.1 | 83.0 | 0.81 Bn | 28 MB |

| SqueezeNet | 57.5 | 80.3 | 2.17 Bn | 4.8 MB |

| Tiny Darknet | 58.7 | 81.7 | 0.98 Bn | 4.0 MB |

TinyYOLO results are also very similar to YOLO's. The following image compares both results:

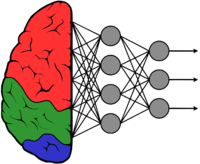

Architecture

Tinyyolov3 uses a lighter model with fewer layers compared to Yolov3, but it has the same input image size of 416x416.

GStreamer Plugin

The GStreamer plugin uses the pre-process and post-process described in the original paper. Please take into consideration that not all deep neural networks are trained the same even if they use the same model architecture. If the model is trained differently, details like label ordering, input dimensions, and color normalization can change.

This element was based on this tinyyolov3 implementation, where this implementation uses the ANCHORS of the paper in the training of the model and have a section for normalizing the outputs in the model. The pre-trained model used to test the element may be downloaded from our R2I Model Zoo for the different frameworks.

Pre-process

Input parameters:

- Input size: 416 x 416

- Format RGB

TinyYoloV3 works with float32 data, the pre-process stage consists of taking the input image and transforming it to float values.

Post-process

Output parameters:

- Grid dimensions: 13x13

- Grid cell dimensions: 32x32 px

- Number of classes: 80

- Number of boxes per cell: 15

- Box dim: 4 [x_top_left, y_top, x_bottom_right, x_bottom]

- Objectness threshold: 0.50 (configurable)

- Probability threshold: 0.50 (configurable)

- Intersection over union threshold: 0.30 (configurable)

The post-process of the YOLOV3 network is a lot more complex than the pre-process:

- The output tensor has 2535 boxes per image, tensor shape = 13x13x15 grid_dimensions x boxes_per_cell.

- Each box has 85 values with the following order: 4 coordinates (x_top_left,y_top,x_bottom_right,y_bottom), 1 Objectness score and 80 Class scores.

The post-process considers only probabilities that surpass the user-defined objectness threshold (0.50) and class probability threshold (0.50). Then it extracts the boxes from the final part of the array corresponding to the probabilities above the threshold. An example result can be seen in the following image:

Now an algorithm is needed to remove or merge duplicated boxes. We use the intersection over union metric to determine that 2 boxes that surpass a configurable intersection over union threshold (0.30) on this metric are considered the same object. Then, we keep the object with the highest probability and remove the other one. The final result can be seen on the following figure:

Examples

Please refer to the TinyYOLO section on the examples page.