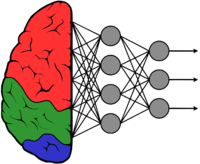

GstInference with TensorFlow backend

Make sure you also check GstInference's companion project: R2Inference |

TensorFlow™ is an open-source software library for high-performance numerical computation. Its flexible architecture allows easy deployment of computation across a variety of platforms (CPUs, GPUs, TPUs). It is originally developed by researchers and engineers from the Google Brain team within Google’s AI organization. Tensorflow is widely used to develop machine learning, deep learning applications.

To use the Tensorflow backend on Gst-Inference be sure to run the R2Inference configure with the flag -Denable-tensorflow=true and use the property backend=tensorflow on the Gst-Inference plugins. GstInference depends on the C/C++ API of Tensorflow.

Installation

GstInference depends on the C/C++ API of Tensorflow. The installation process consists of downloading the library, extracting, and linking. You can install the C/C++ Tensorflow API for x86 using the TensorFlow official installation guide.

Please notice that currently only Tensorflow V1 is supported.

TensorFlow Python API and utilities can be installed with python pip, but it is not needed by GstInference.

Enabling the backend

To enable Tensorflow as a backend for GstInference you need to install R2Inference with TensorFlow support. To do this, use the option -Denable-tensorflow=true during R2Inference configure following this wiki.

Generating a Graph

GstInference uses Tensorflow's frozen models for inference. You can generate a frozen model from a checkpoint file or from a saved session. For examples on how to generate a graph please check the Generating a model for R2I section on the R2Inference wiki.

Properties

TensorFlow Lite API Reference and Create an op has full documentation of the Tensorflow C/C++ API. Gst-Inference uses only the C/C++ API of Tensorflow and R2Inference takes care of devices, loading the models, and setting the name of the layers taken as input and output layers.

The following syntax is used to change backend options on Gst-Inference plugins:

backend::<property>

For example to change the Tensorflow model namesinput-layer and output-layer of the googlenet plugin you need to run the pipeline like this:

gst-launch-1.0 \

googlenet name=net model-location=graph_googlenet.pb backend::input-layer=input backend::output-layer=InceptionV4/Logits/Predictions backend=tensorflow \

videotestsrc ! tee name=t \

t. ! queue ! videoconvert ! videoscale ! net.sink_model \

t. ! queue ! net.sink_bypass \

net.src_bypass ! fakesink

To learn more about the Tensorflow C API, please check the Tensorflow API section on the R2Inference sub wiki.

Tools

The TensorFlow Python API installation includes a tool named Tensorboard, that can be used to visualize a model. If you want some examples and a more complete description please check the Tools section on the R2Inference wiki.