Evaluating the Stitcher

| Image Stitching for NVIDIA®Jetson™ | |

|---|---|

| |

| Image Stitching for NVIDIA Jetson Basics | |

|

|

|

| Getting Started | |

|

|

|

| User Guide | |

|

|

|

| Compiling OpenCV for Image Stitching | |

|

|

|

| Examples | |

|

|

|

| 360 Video | |

|

|

|

| Performance | |

|

|

|

| Contact Us |

|

Contents

Requesting the Evaluation Binary

RidgeRun can provide you with an evaluation binary of the Stitcher to help you test it, in order to request an evaluation binary for a specific architecture, please contact us providing the following information:

- Platform (i.e.: NVIDIA Jetson TX1/TX2, Xavier, Nano, or x86)

- Jetpack version

- The number of cameras to be used with the stitcher

- The kind of lenses to be used and whether or not you need to apply distortion correction

- Input resolutions and frame rates

- Expected output resolution and frame rate

- Latency requirements

Features of the Evaluation

To help you test our Stitcher, RidgeRun can provide an evaluation version of the plug-in.

The following table summarizes the features available in both the professional and evaluation version of the element.

| Feature | Professional | Evaluation |

|---|---|---|

| Stitcher Examples | Y | Y |

| GstStitcher Element | Y | Y |

| Unlimited Processing Time | Y | N (1) |

| Source Code | Y | N |

(1) The evaluation version will limit the processing to a maximum of 1800 frames.

Evaluating the Stitcher

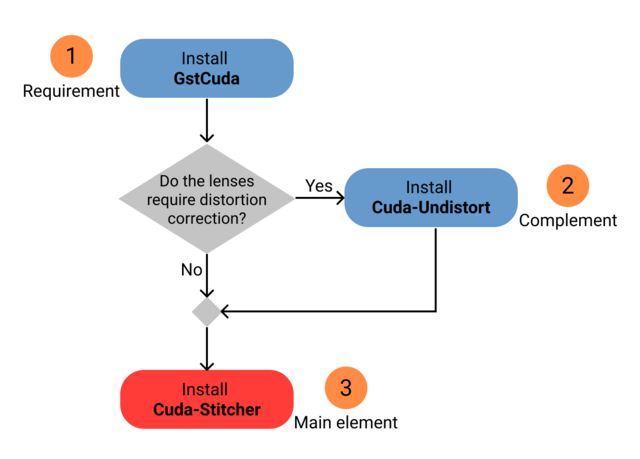

The stitcher requires the GstCuda plugin in order to work properly, and also depending on your setup, it may require the cuda-undistort element, as represented in the diagram below.

RidgeRun provided you with three evaluation tarballs, one for each of the elements mentioned above. The installation should follow that specific order.

For a better experience, we recommend you to download and follow the Stitcher evaluation guide. |

Install and test the evaluation binaries

GstCUDA

- If you already own a professional version of this plugin, skip to the cuda undistort element below.

- To install the evaluation binaries follow the instructions found in the GstCuda Installation guide

Cuda Undistort

- This element is only required if the lenses you are using need distortion correction. If that is not the case, skip to the Stitcher element below.

- In order to install the evaluation binaries for this element, follow the Undistort installation guide.

Cuda Stitcher

- Install OpenCV with CUDA support from the OpenCV for Image Stitching guide.

- The stitcher requires a specific version of RidgeRun's OpenCV Fork, so make sure to follow the guide above even if you already have OpenCV with CUDA support.

- Install GLib JSON

sudo apt install libjsoncpp-dev

sudo apt install libjson-glib-dev

- Once the requirements above are fulfilled, proceed with the installation of the tarball. RidgeRun should've provided you with the following compressed tar package/s:

- cuda-stitcher-X.Y.Z-P-J-eval.tar.gz

- Extract the contents of the file with the following command:

tar xvzf <path-to-evaluation-tar-file>

- The provided cuda-stitcher evaluation version should have the following structure:

cuda-stitcher-X.Y.Z-P-J-eval/

├── examples

│ ├── desert_center.jpg

│ ├── desert_left.jpg

│ ├── desert_right.jpg

│ ├── ImageA.png

│ ├── ImageB.png

│ └── ocvcuda

│ ├── stitcher_example

│ └── three_image_stitcher

├── scripts

│ └── homography_estimation.py

└── usr

└── lib

└── aarch64-linux-gnu

├── gstreamer-1.0

│ └── libgstcudastitcher.so

├── librrstitcher-X.Y.Z.so -> librrstitcher-X.Y.Z.so.0

├── librrstitcher-X.Y.Z.so.0 -> librrstitcher-X.Y.Z.so.X.Y.Z

├── librrstitcher-X.Y.Z.so.X.Y.Z

└── pkgconfig

└── rrstitcher-X.Y.Z.pc

- Then copy the binaries into the standard GStreamer plug-in search path:

sudo cp -r ${PATH_TO_EVALUATION_BINARY}/usr /

- Where

PATH_TO_EVALUATION_BINARYis set to the location in your file system where you have stored the binary provided by RidgeRun (i.e: cuda-stitcher-X.Y.Z-P-J-eval).

- Finally validate that the installation was successful with the following command, you should see the inspect output for the evaluation binary.

gst-inspect-1.0 cudastitcher

How to use the Stitcher

The process of configuring and using the stitcher is explored in depth in the Stitcher user guide.

Testing the Stitcher

You can test the stitcher by running the following example scripts, these perform a stitch between two and three images respectively. It is a great starting point to make sure the installation was successful before moving into more complex examples.

For both examples, you will need to download the example images that will be stitched. You will find the image links in the next sections and an example of how to get them using curl.

Two images example

This example will get two images and stitch them together.

Get the images

| ImageA | Image B |

| https://drive.google.com/uc?export=download&id=15abfY3IM-_3d6Mb6L2EZmYknP5BHhgp_ | https://drive.google.com/uc?export=download&id=1j2-zvXd7Us3nPdQwEB52bcrrNbfQz6Vm |

cd ${PATH_TO_EVALUATION_BINARY}/examples

curl -L -o ImageA.png "https://drive.google.com/uc?export=download&id=15abfY3IM-_3d6Mb6L2EZmYknP5BHhgp_"

curl -L -o ImageB.png "https://drive.google.com/uc?export=download&id=1j2-zvXd7Us3nPdQwEB52bcrrNbfQz6Vm"

Execute the code

cd ${PATH_TO_EVALUATION_BINARY}/examples

./ocvcuda/stitcher_example -f ImageA.png -s ImageB.png

Result

Examine the output image and make sure the stitching was successful, it should look like the following image.

Three images example

This example will get three images and stitch them together.

Get the images

cd ${PATH_TO_EVALUATION_BINARY}/examples

curl -L -o desert_left.png "https://drive.google.com/uc?export=download&id=1XFJu40LweGZgl1zz4ZHpTiUGjiMLSsrF"

curl -L -o desert_center.png "https://drive.google.com/uc?export=download&id=1NS8l0sCCLeJ0ph3hZIwuCbXBlG-WTke6"

curl -L -o desert_right.png "https://drive.google.com/uc?export=download&id=1jlIYOiYGzmrzdXx-o1RC5uKnoxbN8Q4D"

Execute the code

cd ${PATH_TO_EVALUATION_BINARY}/examples

./ocvcuda/three_image_stitcher -l desert_left.png -c desert_center.png -r desert_right.png

Result

Examine the output image and make sure the stitching was successful, it should look like the following image.

Basic validation pipeline

Create a homographies.json file with the following contents:

{

"homographies":[

{

"images":{

"target":0,

"reference":1

},

"matrix":{ "h00": 1.0, "h01": 0.0, "h02": 640.0,

"h10": 0.0, "h11": 1.0, "h12": 0.0,

"h20": 0.0, "h21": 0.0, "h22": 1.0 }

},{

"images":{

"target":2,

"reference":1

},

"matrix":{ "h00": 1.0, "h01": 0.0, "h02": -640.0,

"h10": 0.0, "h11": 1.0, "h12": 0.0,

"h20": 0.0, "h21": 0.0, "h22": 1.0 }

}

]

}

Run the following pipeline, this uses three 640x480 video test sources:

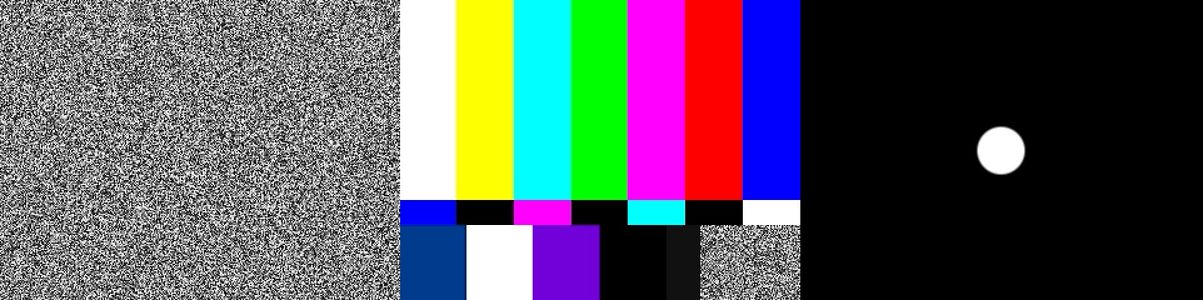

CAPS="video/x-raw(memory:NVMM), width=640, height=480, format=RGBA" HOMOGRAPHY_LIST="`cat homographies.json | tr -d "\n" | tr -d " "`" OUTPUT=output.mp4 gst-launch-1.0 -e \ cudastitcher name=stitcher homography-list=$HOMOGRAPHY_LIST \ videotestsrc pattern=ball num-buffers=30 ! nvvidconv ! $CAPS ! queue ! stitcher.sink_0 \ videotestsrc pattern=smpte num-buffers=30 ! nvvidconv ! $CAPS ! queue ! stitcher.sink_1 \ videotestsrc pattern=snow num-buffers=30 ! nvvidconv ! $CAPS ! queue ! stitcher.sink_2 \ stitcher. ! queue ! nvvidconv ! \ nvv4l2h264enc bitrate=20000000 ! h264parse ! mp4mux ! filesink location=$OUTPUT

For x86 platform, run the following pipeline:

gst-launch-1.0 -e \ cudastitcher name=stitcher homography-list=$HOMOGRAPHY_LIST \ videotestsrc pattern=ball num-buffers=30 ! videoconvert ! $CAPS ! queue ! stitcher.sink_0 \ videotestsrc pattern=smpte num-buffers=30 ! videoconvert ! $CAPS ! queue ! stitcher.sink_1 \ videotestsrc pattern=snow num-buffers=30 ! videoconvert ! $CAPS ! queue ! stitcher.sink_2 \ stitcher. ! queue ! videoconvert ! \ x264enc ! h264parse ! mp4mux ! filesink location=$OUTPUT

The pipeline above generates a 1s 1920x480 output.mp4 video, the first frame should look like the following image.

Running more examples

The Stitcher Examples wiki has many pipeline examples to test the stitcher's functionality; however, make sure to read the Stitcher user guide first.

Troubleshooting

The first level of debugging to troubleshoot a failing evaluation binary is to inspect GStreamer debug output.

GST_DEBUG=2 gst-launch-1.0

If the output doesn't help you figure out the problem, please contact support@ridgerun.com with the output of the GStreamer debug and any additional information you consider useful.

RidgeRun also offers professional support hours that you can invest in any embedded Linux-related task you want to assign to RidgeRun, such as hardware bring-up tasks, application development, GStreamer pipeline fine-tuning, drivers, etc...

Project Evaluation In Docker Container

In this section, we are going to explain the steps of how to run the project inside a docker container.

Important Note: All these steps need to be executed in a Jetson board. |

Installation Steps

1- First verify if you have installed Docker.You can check the docker version installed on the Jetson with the following command:

docker --version

If you don't have docker installed you can use the following command:

sudo apt install docker

2- Install the NVIDIA Container Runtime package. This package is necessary because the container will need to enable the NVIDIA libraries inside the container. You can install it with the following command:

sudo apt install nvidia-container-runtime

3- In this step, you will need to copy the Docker image that RidgeRun sent you into your Jetson device and then you just need to run the following command to load the image into Docker:

sudo docker load -i <path to docker image>

4- In this step, you will start a container with the project inside of it. To start the container for the first time use the following command:

sudo docker run -it --runtime nvidia -v /tmp/argus_socket:/tmp/argus_socket --name=stitcher-eval-container cuda-stitcher-eval /bin/bash

In the previous command in the flag --name= we gave the name stitcher-eval-container but you can give it the name that you want to the container.

The docker run command only needs to be executed once. If you close the container and want to open it again just use the following commands:

sudo docker start stitcher-eval-container

sudo docker attach stitcher-eval-container

5- Finally you can test the stitcher project. Please refer to testing the stitcher section.

Note: When you open the container, by default you will be in the path: PATH_TO_EVALUATION_BINARY. |