Image Stitching for NVIDIA Jetson Basics

| Image Stitching for NVIDIA®Jetson™ | |

|---|---|

| |

| Image Stitching for NVIDIA Jetson Basics | |

|

|

|

| Getting Started | |

|

|

|

| User Guide | |

|

|

|

| Compiling OpenCV for Image Stitching | |

|

|

|

| Examples | |

|

|

|

| 360 Video | |

|

|

|

| Performance | |

|

|

|

| Contact Us |

|

This page describes the fundamentals of RidgeRun's Stitcher. After reading this page it should be clear if the plugin meets your application requirements with any of its different configurations.

Stitching fundamentals

Image stitching is a process where multiple input images with overlap are merged in order to create a larger image. The image stitching algorithm can be divided into the following stages:

Registration

This stage consists in matching features between the input images in order to obtain the best arrangement possible before stitching; in other words, this stage finds the transformations that should be applied to have a better match and better stitching overall. RidgeRun's stitcher uses a homography estimation tool to find the required transformation as a homography matrix.

Calibration

This stage is divided into alignment and compositing, where each image is first transformed by the previously calculated homography, and once arranged properly they are combined into one larger image.

Blending

The blending stage aims to minimize the difference between images on the overlapping section, this difference may be a product of different camera configurations such as exposure or gain.

The cuda-stitcher is not limited to any number of inputs, it has support for panoramic stitching of up to N images; however, for the sake of simplicity the sections below use only 2 inputs.

The following graphic summarizes the main stages for stitching.

Stitching Example

This section showcases the stitching stages between two real images.

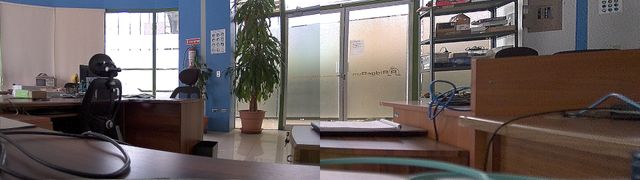

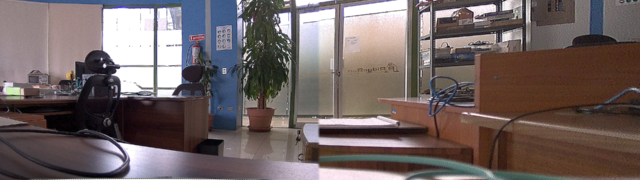

On the following image the two inputs are shown:

Both of these images need to have common features, such as the potted plant and the door; the algorithm will take these common features and obtain a homography which is used to transform the input images. An example of the matched features can be seen in the following image:

This transformation is only applied to one of the images, while the other is kept as a reference. In this example, the left image is kept as a reference while the right image is warped. The result is as follows:

Note that the stitch is quite evident due to different exposure and gain in the cameras; in the same region of the image, both have different intensities. This can be reduced by ensuring that both cameras run on the same parameters and using blending on the border: