NVIDIA Jetson Orin - RidgeRun Demo on Panoramic Stitching and WebRTC Streaming

NVIDIA Jetson Orin RidgeRun documentation is currently under development. |

|

This wiki serves as a user guide for the Panoramic Stitching and WebRTC Streaming on NVIDIA Jetson reference design.

Overview of Panoramic Stitching and WebRTC Streaming

The demo makes use of a Jetson AGX Orin devkit to create a 360 panoramic image in real time from 3 different fisheye cameras. The result is then streamed to a remote browser via WebRTC. The following image summarizes the overall functionality.

1. The system captures three independent video streams from three different RTSP cameras. Each camera captures an image size of 3840x2160 (4K) at a rate of 30 frames per second. Furthermore, they are equipped with a 180° equisolid fisheye lens. The cameras are arranged so that they each capture perspectives at 120° between them. The following image explains graphically this concept.

As you can see, the cameras, combined, cover the full 360° field of view of the scene. Since each camera is able to capture a 180° field of view, and they facing 120° apart from each other, there is a 60° overlap between them. This overlap is important because it gives the system some safeguard to blend smoothly the two images. Besides this configuration, it is important that the cameras have little to none tangential or radial angles.

2. The Jetson AGX Orin receives these RTSP streams and decodes them. These images are then used to create a panoramic representation of the full 360° view of the scene. The different HW accelerators in the SoM are carefully configured to achieve processing in real-time. The image below shows an example of the resulting panorama.

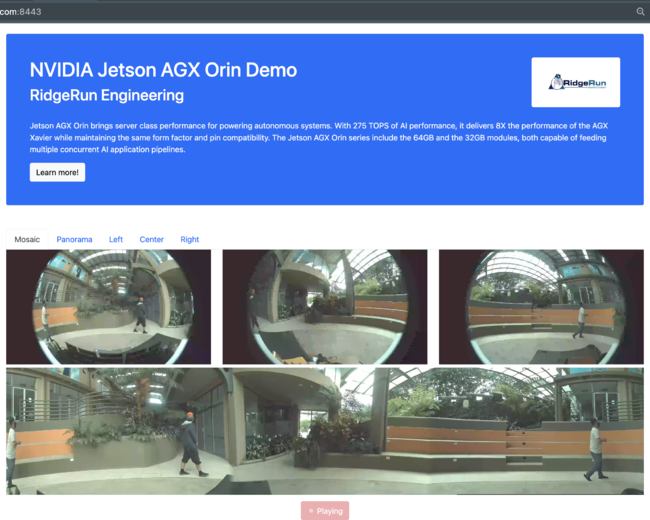

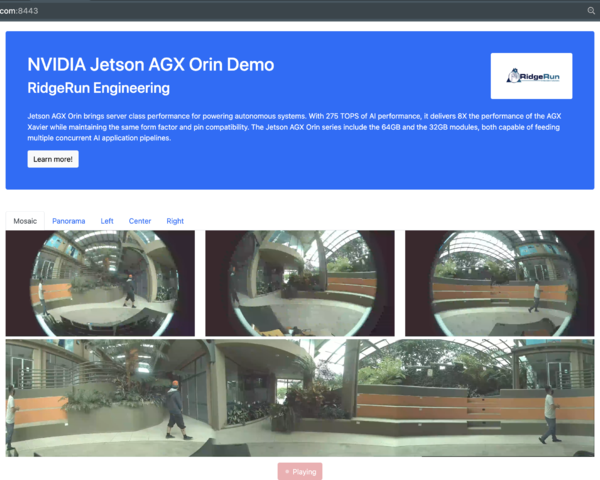

3. Finally, the panorama is encoded and streamed through the network to a client browser using WebRTC. Along with the panorama, the original fisheye images are also sent. The client is presented with an interactive web page that allows them to explore each camera stream independently. The following image shows the implemented web page in mid-operation.

System Description

While the system should be portable to different platforms of the Jetson family, the results presented in this guide were obtained using a Jetson AGX Orin. More specifically, the configuration used in this demo follows the information detailed in the table below.

| Configuration | Value |

|---|---|

| SoM | Jetson AGX Orin NX |

| Memory | 64GB |

| Carrier | Jetson Orin AGX Devkit |

| Camera protocol | RTSP |

| Image size | 4K (3840x2160) |

| Framerate | 30fps |

| Codec | AVC (H.264) |

Resource Scheduling

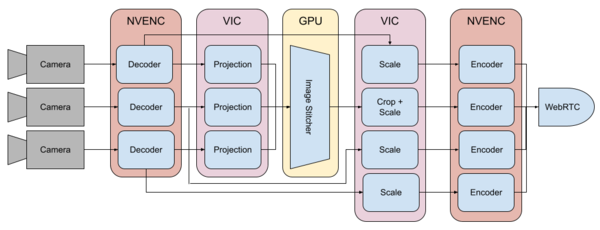

To maintain a real-time operation of steady 30fps, this reference design makes use of different hardware modules in SoM. The following image shows graphically how the different processing stages of the system were scheduled to different partitions in the system.

To access these units GStreamer is used. In a GStreamer pipeline, each processing accelerator is abstracted in a GStreamer element. The following table describes the different processing stages, the HW accelerators used for them, and the software framework used to access them.

| Processing Stage | Amount | HW Unit | Software Framework | Notes |

|---|---|---|---|---|

| Camera | 3 | CPU | GStreamer (gstrtspsrc) | |

| Decoder | 3 | NVENC | GStreamer (gstnvv4l2decoder) | |

| Projection | 3 | VIC | VPI (remap) + GstVPI | Converts fisheye projections into a partial equirectangular image. |

| Image Stitching | 1 | GPU | CUDA + GstCUDA + CudaStitcher | Takes the partial equirectangular images and merges them into a representation. Blends seams smoothly. |

| Crop and Scaling | 4 | VIC | GStreamer (gstnvvidconv) | Crops out black borders resulting from the stitching process. Also scales images to a proper network 1080P transmission size. |

| Encoder | 4 | NVENC | GStreamer (gstnvv4l2h264enc) | |

| WebRTC | 4 | CPU | GStreamer (webrtcbin) + GstWebRTCWrapper | Encrypted network streaming of each camera and the panoramic representation. |

Running the Demo

Prepare the camera sources

In order to perform panoramic stitching of a set of cameras, a thorough calibration process is needed. The details of the calibration are out of the scope of this document. In order to reproduce the exact same results presented in this guide, we provide 3 pre-recorded videos of the cameras calibrated for the demo. You can use these videos to simulate RTSP cameras from a host PC, for example. Being an emulated video source, synchronization may likely be a little off. Download and store the videos in a known location:

Install VLC, which is the tool we are going to use to simulate the RTSP cameras:

# In Debian based systems

sudo apt install vlc

The following commands are used to loop each file indefinitely:

# Run each one in a different terminal

cvlc -vvv --loop panoramic_webrtc_demo_left.mp4 --sout="#gather:rtp{sdp=rtsp://127.0.0.1:8554/left}" --sout-keep --sout-all &

cvlc -vvv --loop panoramic_webrtc_demo_center.mp4 --sout="#gather:rtp{sdp=rtsp://127.0.0.1:8554/center}" --sout-keep --sout-all &

cvlc -vvv --loop panoramic_webrtc_demo_right.mp4 --sout="#gather:rtp{sdp=rtsp://127.0.0.1:8554/right}" --sout-keep --sout-all &

If everything is working as expected, you should be able to test the camera RTSP source:

# Run each one in a different terminal

HOST_IP_ADDRESS=127.0.0.1

vlc rtsp://${HOST_IP_ADDRESS}:8554/left

vlc rtsp://${HOST_IP_ADDRESS}:8554/center

vlc rtsp://${HOST_IP_ADDRESS}:8554/right

A video player showing the camera should open. The system where the cameras are going to be run should be in the same local network as the Jetson AGX Orin.

Run the Docker Image

Once you can simulate camera sources, you need to download and run the Docker image that contains the demo binaries. For this, you'll need a Jetson AGX Orin board with the newest L4T version installed. We specifically developed the demo for JetPack 5.0 Developer Preview. The Docker container requires the host (the Orin) to have CUDA and GPU drivers properly installed and running. An install via de SDK Manager should take care of this automatically.

In the Orin terminal, type the following Docker command to pull and run a container from the pre-built image:

docker run --gpus all -it --rm --net=host --privileged dockerhub.com/ridgerun/panoramic_stitching_webrtc_demo:latest

Due to all the software dependencies, the image is quite big (> 2GB) so it may take a while to download and build the container. In the command above you'll see the following mandatory options:

- --gpus all

- Makes all GPUs available to the container.

- -it

- Makes the container interactive by allocating a terminal so you can interact with it.

- --rm

- Remove the container after usage.

- --net=host

- Connects the container to the host network. Necessary for proper device execution.

- --privileged

- Grants the container access to the host resources.

After the container has been created, you'll be welcomed with a brand new terminal you can interact with. Type exit when you wish to return to the host system and discard the container.

Start the Web Services

The web services have two purposes:

- Host the web page

- Perform WebRTC signaler between the Orin and the browser

For convenience, these are provided within the Docker container to be run in the Orin. To run them, execute the following in the Orin terminal:

cd ~/web_services

HTTPS_KEY=demo.key HTTPS_CERT=demo.crt ./start_services

The HTTPS_KEY and HTTPS_CERT point to the key and certificate necessary to perform an HTTPS connection between the browser, Orin, and the services. This is required for proper WebRTC streaming.

If everything went okay, you should be able to open a browser and navigate to https://ORIN_IP_ADDRESS:8443

Regenerating Expired Key Pairs

The container provides an example key and certificate for demonstration purposes. These, however, have an expiration data and may need to be regenerated. If this is your case, please run the following:

cd ~/web_services

openssl req -newkey rsa:2048 -nodes -keyout demo_new.key -x509 -days 365 -out demo_new.crt

Warning: A word of caution, NEVER deploy these demo keys to production or a public server. |

Running the Services Independently

The signaler and the web server are independent processes and may, as such, be run separately. To do so, run the following:

# The WebRTC signaler

HTTPS_KEY=demo.key HTTPS_CERT=demo.crt ./start_signaler

# The web server

HTTPS_KEY=demo.key HTTPS_CERT=demo.crt ./start_web_server

They can even be run from a different machine. If you decide to do so, please make sure you update the IP address in the GStreamer pipelines and from the web page.

Running the Demo

We are finally ready to run the demo.

1. In a host computer, open Google Chrome and browse to https://ORIN_IP_ADDRESS:8554. Note that, this computer must be in the same local network as the Jetson Orin and, in consequence, as the RTSP cameras.

2. In the web page, click on the Connect! button. You should see a spinner indicating that the system is ready and waiting for the Jetson Orin to connect.

3. In the Jetson Orin container, start the processing pipeline by running the following:

cd ~/processing_pipeline

./run_pipeline

4. The webpage should recognize the Orin connection and replace the spinner with a video component. Be aware that, for various reasons, this may take up to a few seconds.

5. At any point, you should be able to disconnect the Orin and the web page will return to the spinner view. By reconnecting the Orin the videos will resume.

Troubleshooting

First of all, make sure you are using Google Chrome. WebRTC is very picky with the browser and we've found that other browsers may present various different problems.

If the problem persists, please: 1. Refresh the web page. 2. Open the browser console: Ctrl + Shift + J (Or Command + Option + J on Mac) 3. Start the processing pipeline with debug activated:

cd ~/processing_pipeline

GST_DEBUG=3 ./run_pipeline

6. Email us the problem, what you see, and both the terminal and browser console log.

Results and Performance

Performance was measured on the NVIDIA Jetson AGX Orin 64 GB, as well as the Jetson AGX Orin NX and Jetson AGX Orin Nano using the emulation features provided by the NVIDIA Jetson AGX Orin.

Jetson AGX Orin 64 GB

The following video shows the demo in action by streaming to the web page.

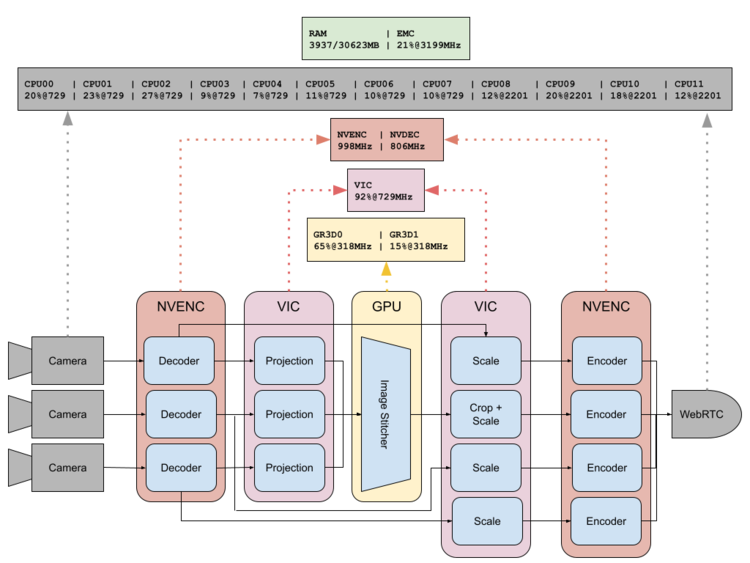

The following figure presents the resource usage of the different HW unit involved in the processing.

In a similar way, the table below summarizes the same information but in a tabular fashion.

| HW Unit | Measurement | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| CPU (% @ MHz) | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 |

| 20%@729 | 23%@729 | 27%@729 | 9%@729 | 7%@729 | 11%@729 | 10%@729 | 10%@729 | 12%@2201 | 20%@2201 | 18%@2201 | 12%@2201 | |

| Mem | RAM (MB) | EMC (% @ MHz) | ||||||||||

| 3937/30623MB | 21%3199MHz | |||||||||||

| Codec | NVENC (MHz) | NVDEC (MHz) | ||||||||||

| 998MHz | 806MHz | |||||||||||

| Image | VIC (% @ MHz) | |||||||||||

| 92%729MHz | ||||||||||||

| GPU | GR3D (% @ MHz) | GR3D2 (% @ MHz) | ||||||||||

| 65%318 | 15%@318 | |||||||||||

Jetson AGX Orin NX 16 GB (Emulated)

The following table summarizes the performance results obtained while running the reference design in the Jetson Orin emulating the Jetson Orin NX 16 GB.

| HW Unit | Measurement | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| CPU (% @ MHz) | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | ||||

| 18%@1984 | 27%@1984 | 14%@1340 | 25%@1190 | 18%@729 | 19%@729 | 28%@729 | 28%@729 | |||||

| Mem | RAM (MB) | EMC (% @ MHz) | ||||||||||

| 4955/14433MB | 22%@3200MHz | |||||||||||

| Codec | NVENC (MHz) | NVDEC (MHz) | ||||||||||

| 793MHz | 806MHz | |||||||||||

| Image | VIC (% @ MHz) | |||||||||||

| 69%@678MHz | ||||||||||||

| GPU | GR3D (% @ MHz) | GR3D2 (% @ MHz) | ||||||||||

| 70%611 | 70%@611 | |||||||||||

Known Issues

At the time being, the design is under development, and are certain issues that need to be addressed:

- Image quality on individual cameras is very poor. This is a work in progress and is expected to be fixed in the near future.

- Delay during initialization.