Difference between revisions of "Image Stitching for NVIDIA Jetson/User Guide/Controlling the Stitcher"

m (→Workflow overview: clarify target images) |

m |

||

| (11 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

<noinclude> | <noinclude> | ||

| − | {{Image_Stitching_for_NVIDIA_Jetson/Head|previous=User Guide|next=User Guide/Homography estimation| | + | {{Image_Stitching_for_NVIDIA_Jetson/Head|previous=User Guide|next=User Guide/Homography estimation|metakeywords=Image Stitching, CUDA, Stitcher, OpenCV, Panorama}} |

</noinclude> | </noinclude> | ||

| − | This page provides an explanation of the workflow used when working with the stitcher. | + | {{DISPLAYTITLE: User Guide on Controlling the Stitcher|noerror}} |

| + | |||

| + | This page provides a basic description of the parameters required when building a cudastitcher pipeline. As well as an explanation of the workflow used when working with the stitcher. | ||

| + | |||

| + | == Workflow diagram == | ||

| + | The following diagram provides a visual representation of the workflow needed when using the stitcher, as well as the auxiliary tools required. The [[Image_Stitching_for_NVIDIA_Jetson/User_Guide/Controlling_the_Stitcher#Workflow_overview| workflow overview]] provides a more detailed written version of the workflow and links to the required wikis. | ||

| + | |||

| + | [[File:Stitcher workflow diagram.png|700px|center|none|2 Images Stitching Example]] | ||

== Workflow parameters == | == Workflow parameters == | ||

| − | When using the stitcher, parameter acquirement and selection is a crucial | + | When using the stitcher, parameter acquirement and selection is a crucial steps in order to get the expected output. These parameters can be obtained from scripts provided within the stitcher itself. |

These parameters are: | These parameters are: | ||

| + | |||

| + | ==== Undistort parameters ==== | ||

| + | :If you are using the stitcher as well as the cuda-undistort element, there are more parameters to be obtained, information about those and how to set them can be found in the [[CUDA_Accelerated_GStreamer_Camera_Undistort/User_Guide | cuda undistort wiki]] | ||

==== Homography List==== | ==== Homography List==== | ||

| − | :This parameter defines the transformations between | + | :This parameter defines the transformations between pairs of images. It is specified with the option <code>homography-list</code> and is set as a JSON formatted string, the JSON is constructed manually based on the individual homographies calculated with the homography estimation tool. |

| − | :Read the [[Image_Stitching_for_NVIDIA_Jetson/User_Guide/Homography_estimation|Homography estimation guide]] on how to calculate the homography between | + | :Read the [[Image_Stitching_for_NVIDIA_Jetson/User_Guide/Homography_estimation|Homography estimation guide]] on how to calculate the homography between two images. |

:Then visit the [[Image_Stitching_for_NVIDIA_Jetson/User_Guide/Homography_list|Homography list guide]] to better understand its format and how to construct it based on individual homographies. | :Then visit the [[Image_Stitching_for_NVIDIA_Jetson/User_Guide/Homography_list|Homography list guide]] to better understand its format and how to construct it based on individual homographies. | ||

| + | |||

| + | ==== Refinement parameters ==== | ||

| + | :The stitcher is capable of refining the homographies during execution, there are multiple parameters associated with this feature, see the [[Image_Stitching_for_NVIDIA_Jetson/User_Guide/Homography_Refinement|Homography refinement guide]] for more information. | ||

==== Blending Width ==== | ==== Blending Width ==== | ||

:This parameter sets the amount of pixels to be blended between two images. It can be set with the <code>border-width</code> option. [[Image_Stitching_for_NVIDIA_Jetson/User_Guide/Blending|This guide]] provides more information on the topic. | :This parameter sets the amount of pixels to be blended between two images. It can be set with the <code>border-width</code> option. [[Image_Stitching_for_NVIDIA_Jetson/User_Guide/Blending|This guide]] provides more information on the topic. | ||

| − | |||

| − | |||

| − | |||

== Workflow overview == | == Workflow overview == | ||

| Line 26: | Line 36: | ||

#Know your input sources (N) | #Know your input sources (N) | ||

| − | #Apply distortion correction to the inputs (only if necessary), see [[ | + | #Apply distortion correction to the inputs (only if necessary), see [[CUDA_Accelerated_GStreamer_Camera_Undistort/User_Guide/Camera_Calibration | CUDA Accelerated GStreamer Camera Undistort Camera Calibration User Guide]] for more details |

#*Run the calibration tool for each source that requires it | #*Run the calibration tool for each source that requires it | ||

| − | #**input: Multiple images of a calibration pattern | + | #**'''input''': Multiple images of a calibration pattern |

| − | #**output: Camera matrix and distortion parameters | + | #**'''output''': Camera matrix and distortion parameters |

| − | #*Save the camera matrix and distortion parameters for each camera | + | #*Save the camera matrix and distortion parameters for each camera since they will be required to build the pipelines |

#*Repeat until every input has been corrected | #*Repeat until every input has been corrected | ||

| − | #Calculate all (N-1) homographies between pairs of adjacent images, see [[Image_Stitching_for_NVIDIA_Jetson/User_Guide/Homography_estimation| | + | #Calculate all (N-1) homographies between pairs of adjacent images, see [[Image_Stitching_for_NVIDIA_Jetson/User_Guide/Homography_estimation|Image Stitching Homography estimation User Guide]] for more details |

#*Run the homography estimation tool for each image (target) and its reference (fixed) | #*Run the homography estimation tool for each image (target) and its reference (fixed) | ||

| − | #**input: Two still images from adjacent sources with overlap and a JSON config file | + | #**'''input''': Two still images from adjacent sources with overlap and a JSON config file |

| − | #**output: Homography matrix that describes the transformation between input sources | + | #**'''output''': Homography matrix that describes the transformation between input sources |

#*Save the homography matrices; they will be required in the next steps | #*Save the homography matrices; they will be required in the next steps | ||

#*Repeat until every (N-1) image has been a target (except for the original reference image) | #*Repeat until every (N-1) image has been a target (except for the original reference image) | ||

| − | # | + | #Assemble the homographies list JSON file |

| − | #*This step is done manually, see [[Image_Stitching_for_NVIDIA_Jetson/User_Guide/Homography_list| | + | #*This step is done manually, see [[Image_Stitching_for_NVIDIA_Jetson/User_Guide/Homography_list|Image Stitching Homography list User Guide]] for more details |

| − | #Set the blending width, see [[Image_Stitching_for_NVIDIA_Jetson/User_Guide/Blending| | + | #Set the blending width, see [[Image_Stitching_for_NVIDIA_Jetson/User_Guide/Blending|Image Stitching Blending User Guide]] for more details |

| − | #Build and launch the stitcher | + | #Build and launch the stitcher pipeline, see [[Image Stitching for NVIDIA Jetson/Examples|Image Stitching GStreamer Pipeline Examples]] |

| − | |||

| − | |||

| − | |||

| − | |||

| − | [[ | ||

Latest revision as of 12:00, 26 February 2023

| Image Stitching for NVIDIA®Jetson™ | |

|---|---|

| |

| Image Stitching for NVIDIA Jetson Basics | |

|

|

|

| Getting Started | |

|

|

|

| User Guide | |

|

|

|

| Compiling OpenCV for Image Stitching | |

|

|

|

| Examples | |

|

|

|

| 360 Video | |

|

|

|

| Performance | |

|

|

|

| Contact Us |

|

This page provides a basic description of the parameters required when building a cudastitcher pipeline. As well as an explanation of the workflow used when working with the stitcher.

Contents

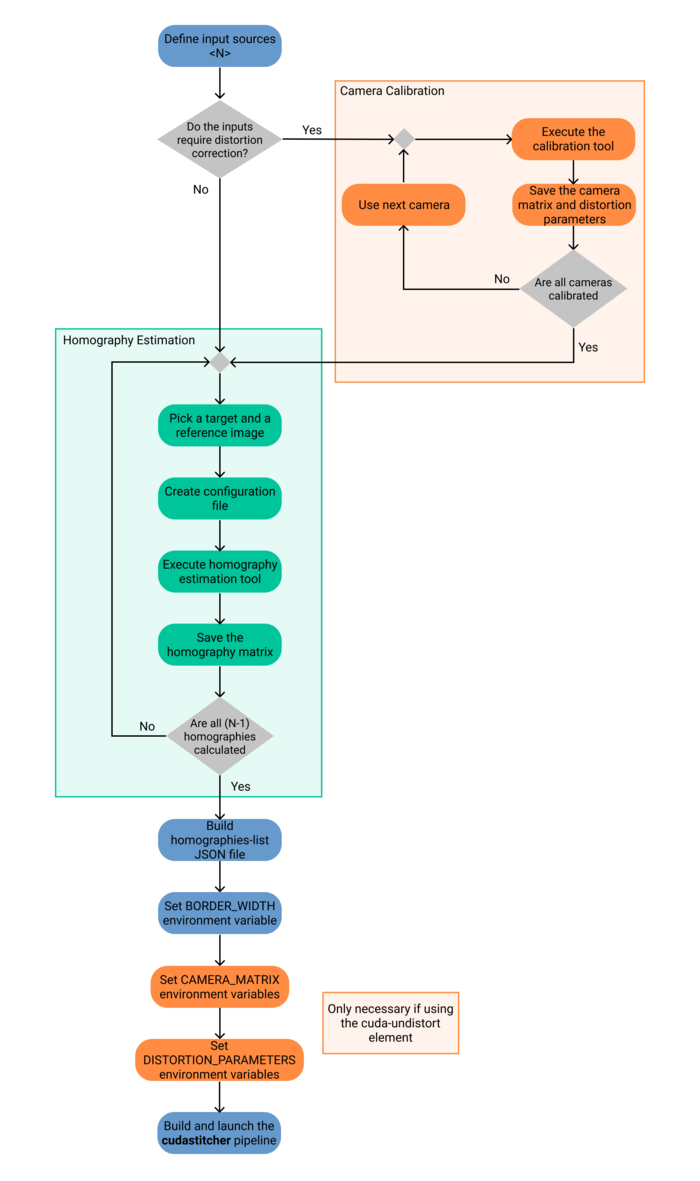

Workflow diagram

The following diagram provides a visual representation of the workflow needed when using the stitcher, as well as the auxiliary tools required. The workflow overview provides a more detailed written version of the workflow and links to the required wikis.

Workflow parameters

When using the stitcher, parameter acquirement and selection is a crucial steps in order to get the expected output. These parameters can be obtained from scripts provided within the stitcher itself. These parameters are:

Undistort parameters

- If you are using the stitcher as well as the cuda-undistort element, there are more parameters to be obtained, information about those and how to set them can be found in the cuda undistort wiki

Homography List

- This parameter defines the transformations between pairs of images. It is specified with the option

homography-listand is set as a JSON formatted string, the JSON is constructed manually based on the individual homographies calculated with the homography estimation tool.

- Read the Homography estimation guide on how to calculate the homography between two images.

- Then visit the Homography list guide to better understand its format and how to construct it based on individual homographies.

Refinement parameters

- The stitcher is capable of refining the homographies during execution, there are multiple parameters associated with this feature, see the Homography refinement guide for more information.

Blending Width

- This parameter sets the amount of pixels to be blended between two images. It can be set with the

border-widthoption. This guide provides more information on the topic.

Workflow overview

Here are presented the basic steps and execution order that needs to be followed in order to configure the stitcher properly and acquire the parameters for its usage.

- Know your input sources (N)

- Apply distortion correction to the inputs (only if necessary), see CUDA Accelerated GStreamer Camera Undistort Camera Calibration User Guide for more details

- Run the calibration tool for each source that requires it

- input: Multiple images of a calibration pattern

- output: Camera matrix and distortion parameters

- Save the camera matrix and distortion parameters for each camera since they will be required to build the pipelines

- Repeat until every input has been corrected

- Run the calibration tool for each source that requires it

- Calculate all (N-1) homographies between pairs of adjacent images, see Image Stitching Homography estimation User Guide for more details

- Run the homography estimation tool for each image (target) and its reference (fixed)

- input: Two still images from adjacent sources with overlap and a JSON config file

- output: Homography matrix that describes the transformation between input sources

- Save the homography matrices; they will be required in the next steps

- Repeat until every (N-1) image has been a target (except for the original reference image)

- Run the homography estimation tool for each image (target) and its reference (fixed)

- Assemble the homographies list JSON file

- This step is done manually, see Image Stitching Homography list User Guide for more details

- Set the blending width, see Image Stitching Blending User Guide for more details

- Build and launch the stitcher pipeline, see Image Stitching GStreamer Pipeline Examples