Difference between revisions of "GstInference/Benchmarks"

(→FPS Measurements) |

(→FPS Measurements) |

||

| Line 1,193: | Line 1,193: | ||

'TinyYoloV3 \n TensorRT'], //Column 6 | 'TinyYoloV3 \n TensorRT'], //Column 6 | ||

['Nano', 30.560, 0, 9.129, 4.823, 14.602, 0], | ['Nano', 30.560, 0, 9.129, 4.823, 14.602, 0], | ||

| − | ['TX2', | + | ['TX2', 62.511, 0, 20.240, 11.150, 33.756, 0], |

['Xavier', 92.559, 0, 24.854, 13.560, 69.715, 0] | ['Xavier', 92.559, 0, 24.854, 13.560, 69.715, 0] | ||

]); | ]); | ||

| Line 1,201: | Line 1,201: | ||

'TensorRT \n TX2', //Column 2 | 'TensorRT \n TX2', //Column 2 | ||

'TensorRT \n Xavier'], //Column 3 | 'TensorRT \n Xavier'], //Column 3 | ||

| − | ['InceptionV1', 36.754, | + | ['InceptionV1', 36.754, 62.511, 92.559], //row 1 |

['InceptionV2', 0, 0, 0], //row 2 | ['InceptionV2', 0, 0, 0], //row 2 | ||

| − | ['InceptionV3', 10.892, | + | ['InceptionV3', 10.892, 20.240, 24.854], //row 3 |

| − | ['InceptionV4', 4.823, | + | ['InceptionV4', 4.823, 11.150, 13.560], //row 4 |

| − | ['TinyYoloV2', 14.602, | + | ['TinyYoloV2', 14.602, 33.756, 69.715], //row 5 |

['TinyYoloV3', 0, 0, 0] //row 6 | ['TinyYoloV3', 0, 0, 0] //row 6 | ||

]); | ]); | ||

Revision as of 09:11, 14 July 2020

Make sure you also check GstInference's companion project: R2Inference |

Contents

GstInference Benchmarks

The following benchmarks were run with a source video (1920x1080@60). With the following base GStreamer pipeline, and environment variables:

$ VIDEO_FILE='video.mp4'

$ MODEL_LOCATION='graph_inceptionv1_tensorflow.pb'

$ INPUT_LAYER='input'

$ OUTPUT_LAYER='InceptionV1/Logits/Predictions/Reshape_1'

The environment variables were changed accordingly with the used model (Inception V1,V2,V3 or V4)

GST_DEBUG=inception1:1 gst-launch-1.0 filesrc location=$VIDEO_FILE ! decodebin ! videoconvert ! videoscale ! queue ! net.sink_model inceptionv1 name=net model-location=$MODEL_LOCATION backend=tensorflow backend::input-layer=$INPUT_LAYER backend::output-layer=$OUTPUT_LAYER net.src_model ! perf ! fakesink -v

The Desktop PC had the following specifications:

- Intel(R) Core(TM) i7-3770 CPU @ 3.40GHz

- 8 GB RAM

- Cedar [Radeon HD 5000/6000/7350/8350 Series]

- Linux 4.15.0-54-generic x86_64 (Ubuntu 16.04)

The Jetson Xavier power modes used were 2 and 6 (more information: Supported Modes and Power Efficiency)

- View current power mode:

$ sudo /usr/sbin/nvpmodel -q

- Change current power mode:

sudo /usr/sbin/nvpmodel -m x

Where x is the power mode ID (e.g. 0, 1, 2, 3, 4, 5, 6).

Summary

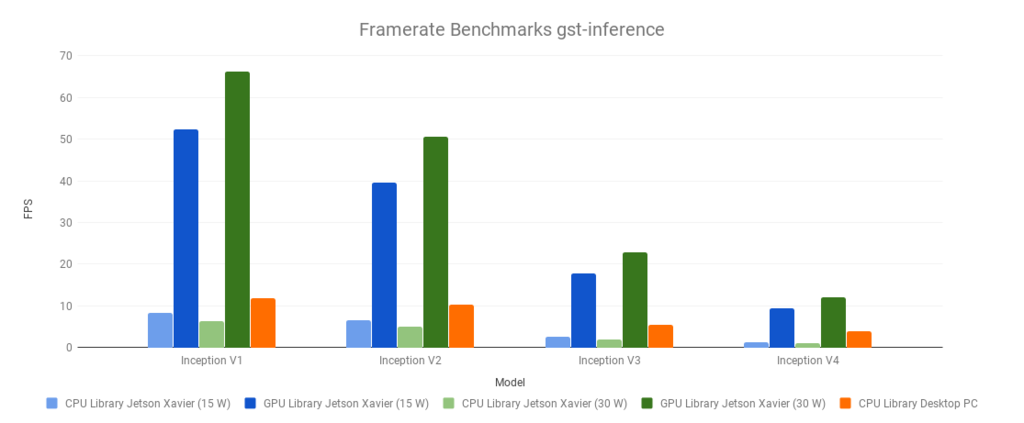

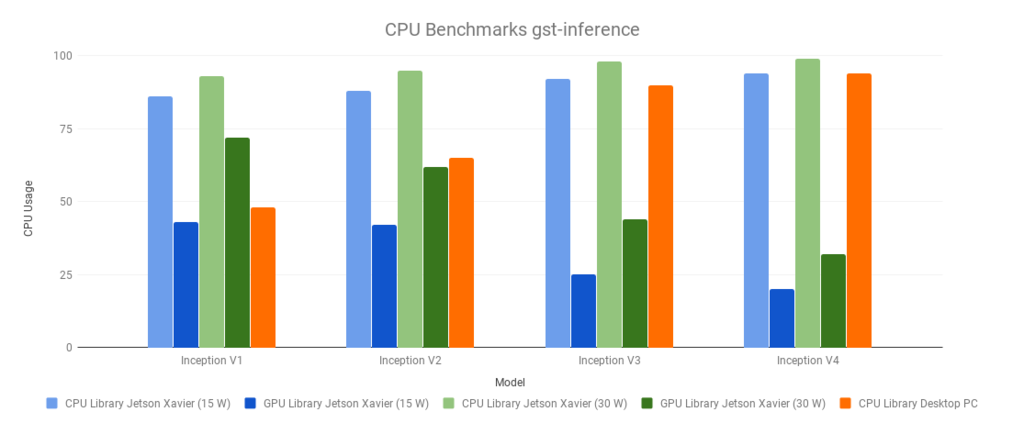

| Desktop PC | CPU Library | |

|---|---|---|

| Model | Framerate | CPU Usage |

| Inception V1 | 11.89 | 48 |

| Inception V2 | 10.33 | 65 |

| Inception V3 | 5.41 | 90 |

| Inception V4 | 3.81 | 94 |

| Jetson Xavier (15W) | CPU Library | GPU Library | ||

|---|---|---|---|---|

| Model | Framerate | CPU Usage | Framerate | CPU Usage |

| Inception V1 | 8.24 | 86 | 52.3 | 43 |

| Inception V2 | 6.58 | 88 | 39.6 | 42 |

| Inception V3 | 2.54 | 92 | 17.8 | 25 |

| Inception V4 | 1.22 | 94 | 9.4 | 20 |

| Jetson Xavier (30W) | CPU Library | GPU Library | ||

|---|---|---|---|---|

| Model | Framerate | CPU Usage | Framerate | CPU Usage |

| Inception V1 | 6.41 | 93 | 66.27 | 72 |

| Inception V2 | 5.11 | 95 | 50.59 | 62 |

| Inception V3 | 1.96 | 98 | 22.95 | 44 |

| Inception V4 | 0.98 | 99 | 12.14 | 32 |

Framerate

CPU Usage

TensorFlow Lite Benchmarks

FPS measurement

CPU usage measurement

Test benchmark video

The following video was used to perform the benchmark tests.

To download the video press right click on the video and select 'Save video as' and save this in your computer.

ONNXRT Benchmarks

The Desktop PC had the following specifications:

- Intel(R) Core(TM) Core i7-7700HQ CPU @ 2.80GHz

- 12 GB RAM

- Linux 4.15.0-106-generic x86_64 (Ubuntu 16.04)

- GStreamer 1.8.3

The following was the GStreamer pipeline used to obtain the results:

# MODELS_PATH has the following structure

#/path/to/models/

#├── InceptionV1_onnxrt

#│ ├── graph_inceptionv1_info.txt

#│ ├── graph_inceptionv1.onnx

#│ └── labels.txt

#├── InceptionV2_onnxrt

#│ ├── graph_inceptionv2_info.txt

#│ ├── graph_inceptionv2.onnx

#│ └── labels.txt

#├── InceptionV3_onnxrt

#│ ├── graph_inceptionv3_info.txt

#│ ├── graph_inceptionv3.onnx

#│ └── labels.txt

#├── InceptionV4_onnxrt

#│ ├── graph_inceptionv4_info.txt

#│ ├── graph_inceptionv4.onnx

#│ └── labels.txt

#├── TinyYoloV2_onnxrt

#│ ├── graph_tinyyolov2_info.txt

#│ ├── graph_tinyyolov2.onnx

#│ └── labels.txt

#└── TinyYoloV3_onnxrt

# ├── graph_tinyyolov3_info.txt

# ├── graph_tinyyolov3.onnx

# └── labels.txt

model_array=(inceptionv1 inceptionv2 inceptionv3 inceptionv4 tinyyolov2 tinyyolov3)

model_upper_array=(InceptionV1 InceptionV2 InceptionV3 InceptionV4 TinyYoloV2 TinyYoloV3)

MODELS_PATH=/path/to/models/

INTERNAL_PATH=onnxrt

EXTENSION=".onnx"

gst-launch-1.0 \

filesrc location=$VIDEO_PATH num-buffers=600 ! decodebin ! videoconvert ! \

perf print-arm-load=true name=inputperf ! tee name=t t. ! videoscale ! queue ! net.sink_model t. ! queue ! net.sink_bypass \

${model_array[i]} backend=onnxrt name=net \

model-location="${MODELS_PATH}${model_upper_array[i]}_${INTERNAL_PATH}/graph_${model_array[i]}${EXTENSION}" \

net.src_bypass ! perf print-arm-load=true name=outputperf ! videoconvert ! fakesink sync=false

FPS Measurements

CPU Load Measurements

Test benchmark video

The following video was used to perform the benchmark tests.

To download the video press right click on the video and select 'Save video as' and save this in your computer.

TensorRT Benchmarks

FPS Measurements

CPU Load Measurements