|

|

| Line 28: |

Line 28: |

| | ==GstInference Benchmarks Overview== | | ==GstInference Benchmarks Overview== |

| | | | |

| − | There are two versions of GstInference: a CPU-based non-accelerated version that you can download for free from the below mentioned links :<br>

| + | [[File:With coral intelligence.png|800px|frameless|center]] |

| | | | |

| − | * [https://shop.ridgerun.com/products/tensorflow-cpu-binaries-for-nvidia-xavier-boards-jetpack-4-2?_pos=18&_sid=5954f53ef&_ss=r NVIDIA<sup>®</sup>Jetson Xavier™] <br> | + | By using GstInference as the interface between Google’s Coral board and GStreamer, users can: |

| − | * [https://shop.ridgerun.com/products/tensorflow-cpu-binaries-for-nvidia-tx2-boards-jetpack-4-2?_pos=20&_sid=5954f53ef&_ss=r NVIDIA<sup>®</sup>Jetson™ TX2 platform] <br> | + | * Easily prototype GStreamer pipelines with common and basic GStreamer tools such as gst-launch and GStreamer Daemon. |

| − | * [https://shop.ridgerun.com/products/tensorflow-cpu-binaries-for-nvidia-nano-boards-jetpack-4-2-2?_pos=5&_sid=a9b6e34ab&_ss=r NVIDIA<sup>®</sup>Jetson Nano™ platform] <br> | + | * Easily test and benchmark TFLite models using GStreamer with Google's Coral board. |

| | + | * Enable a world of possibilities to use Google’s Coral board with video feeds from cameras, video files and network streams, and process the prediction information (detection, classification, estimation, segmentation) to monitor events and trigger actions. |

| | + | * Develop intelligent media servers with recording, streaming, capture, playback and display features. |

| | + | * Abstract GStreamer complexity in terms of buffers and events handling. |

| | + | * Abstract TensorFlow Lite complexity and configuration. |

| | + | * Make use of GstInference helper elements and API to visualize and easily extract readable prediction information. |

| | | | |

| − | A GPU-based hardware-accelerated version uses the GPU to pump up the performance of the algorithms and provides a dramatic improvement in the system as depicted in the figure below. You can download this accelerated version (supports GPU and CPU both) for free from the below mentioned links: <br>

| + | GstInference can also be used in other platforms (Jetson, Desktop). |

| − | | |

| − | * [https://shop.ridgerun.com/products/tensorflow-gpu-binaries-for-nvidia-xavier-boards-jetpack-4-2-2?_pos=15&_sid=c9ceae099&_ss=r NVIDIA<sup>®</sup>Jetson Xavier™] <br>

| |

| − | * [https://shop.ridgerun.com/products/tensorflow-gpu-binaries-for-nvidia-tx2-boards-jetpack-4-2-2?_pos=16&_sid=5954f53ef&_ss=r NVIDIA<sup>®</sup>Jetson™ TX2 platform] <br>

| |

| − | * [https://shop.ridgerun.com/products/tensorflow-gpu-binaries-for-nvidia-nano-boards-jetpack-4-2?_pos=17&_sid=5954f53ef&_ss=r NVIDIA<sup>®</sup>Jetson Nano™ platform] <br>

| |

| − | | |

| − | or you can [https://www.ridgerun.com/contact contact us] if you have any questions.

| |

| | | | |

| | For further information you can check: [[GstInference/Benchmarks|Benchmarks]] page. | | For further information you can check: [[GstInference/Benchmarks|Benchmarks]] page. |

| | | | |

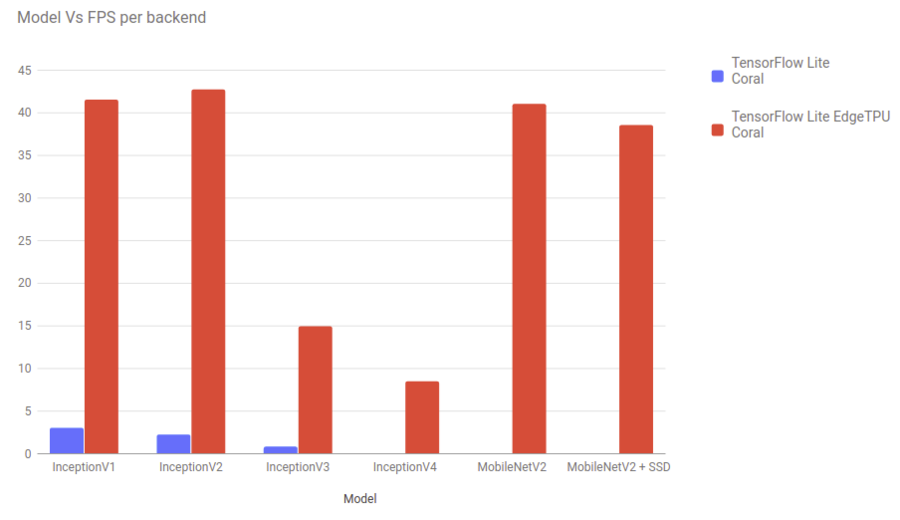

| | + | == Framerate == |

| | + | [[File:Model vs fps coral.png|900px|frameless|center]] |

| | | | |

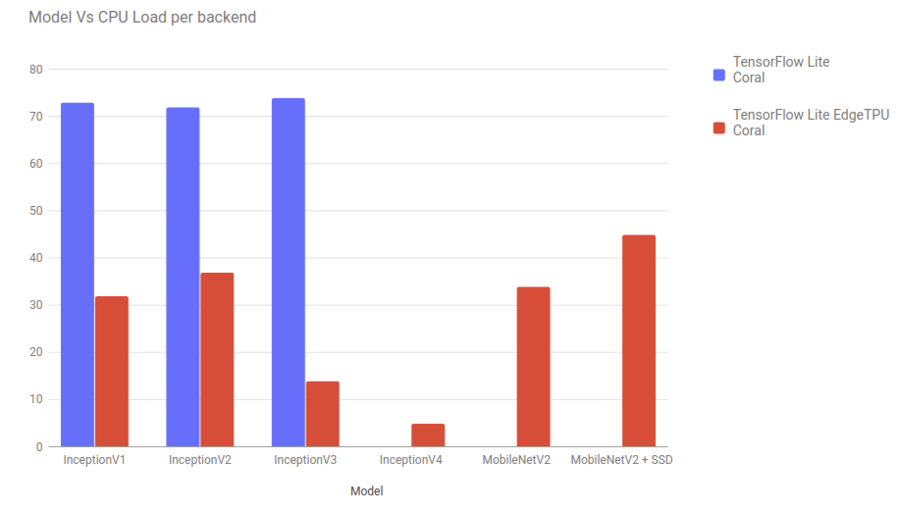

| − | === FPS Measurements === | + | == CPU Usage == |

| − | | + | [[File:Model vs cpu coral.png|900px|frameless|center]] |

| − | <html>

| |

| − | | |

| − | <style>

| |

| − | .button {

| |

| − | background-color: #008CBA;

| |

| − | border: none;

| |

| − | color: white;

| |

| − | padding: 15px 32px;

| |

| − | text-align: center;

| |

| − | text-decoration: none;

| |

| − | display: inline-block;

| |

| − | font-size: 16px;

| |

| − | margin: 4px 2px;

| |

| − | cursor: pointer;

| |

| − | }

| |

| − | </style>

| |

| − | | |

| − | <div id="chart_fps_coral" style="margin: auto; width: 800px; height: 500px;"></div>

| |

| − | | |

| − | <script>

| |

| − | google.charts.load('current', {'packages':['corechart', 'bar']});

| |

| − | google.charts.setOnLoadCallback(drawStuffCoralFps);

| |

| − |

| |

| − | function drawStuffCoralFps() {

| |

| − | | |

| − | var chartDiv_Fps_Coral = document.getElementById('chart_fps_coral');

| |

| − | | |

| − | var table_models_fps_coral = google.visualization.arrayToDataTable([

| |

| − | ['Model', //Column 0

| |

| − | 'TensorFlow Lite \n Coral',

| |

| − | 'TensorFlow Lite EdgeTPU \n Coral'], //Column 1

| |

| − | ['InceptionV1', 3.11, 41.5], //row 1

| |

| − | ['InceptionV2', 2.31, 42], //row 2

| |

| − | ['InceptionV3', 0.9, 15.2], //row 3

| |

| − | ['InceptionV4', 0, 8.41], //row 4

| |

| − | ['TinyYoloV2', 0, 0], //row 5

| |

| − | ['TinyYoloV3', 0, 0] //row 6

| |

| − | ]);

| |

| − | var Coral_materialOptions_fps = {

| |

| − | width: 900,

| |

| − | chart: {

| |

| − | title: 'Model Vs FPS per backend',

| |

| − | },

| |

| − | series: {

| |

| − | },

| |

| − | axes: {

| |

| − | y: {

| |

| − | distance: {side: 'left',label: 'FPS'}, // Left y-axis.

| |

| − | }

| |

| − | }

| |

| − | };

| |

| − | | |

| − | var materialChart_coral_fps = new google.charts.Bar(chartDiv_Fps_Coral);

| |

| − | view_coral_fps = new google.visualization.DataView(table_models_fps_coral);

| |

| − | | |

| − | function drawMaterialChart() {

| |

| − | var materialChart_coral_fps = new google.charts.Bar(chartDiv_Fps_Coral);

| |

| − | materialChart_coral_fps.draw(table_models_fps_coral, google.charts.Bar.convertOptions(Coral_materialOptions_fps));

| |

| − | | |

| − | init_charts();

| |

| − | }

| |

| − | function init_charts(){

| |

| − | view_coral_fps.setColumns([0,1, 2]);

| |

| − | materialChart_coral_fps.draw(view_coral_fps, Coral_materialOptions_fps);

| |

| − | }

| |

| − | drawMaterialChart();

| |

| − | }

| |

| − | | |

| − | </script>

| |

| − | | |

| − | </html>

| |

| − | | |

| − | === CPU Load Measurements ===

| |

| − | | |

| − | <html>

| |

| − | | |

| − | <style>

| |

| − | .button {

| |

| − | background-color: #008CBA;

| |

| − | border: none;

| |

| − | color: white;

| |

| − | padding: 15px 32px;

| |

| − | text-align: center;

| |

| − | text-decoration: none;

| |

| − | display: inline-block;

| |

| − | font-size: 16px;

| |

| − | margin: 4px 2px;

| |

| − | cursor: pointer;

| |

| − | }

| |

| − | </style>

| |

| − | | |

| − | <div id="chart_cpu_coral" style="margin: auto; width: 800px; height: 500px;"></div>

| |

| − | | |

| − | <script>

| |

| − | google.charts.load('current', {'packages':['corechart', 'bar']});

| |

| − | google.charts.setOnLoadCallback(drawStuffCoralCpu);

| |

| − |

| |

| − | function drawStuffCoralCpu() {

| |

| − | | |

| − | var chartDiv_Cpu_Coral = document.getElementById('chart_cpu_coral');

| |

| − | | |

| − | var table_models_cpu_coral = google.visualization.arrayToDataTable([

| |

| − | ['Model', //Column 0

| |

| − | 'TensorFlow Lite \n Coral',

| |

| − | 'TensorFlow Lite EdgeTPU \n Coral'], //Column 1

| |

| − | ['InceptionV1', 73, 32], //row 1

| |

| − | ['InceptionV2', 72, 32], //row 2

| |

| − | ['InceptionV3', 74, 12], //row 3

| |

| − | ['InceptionV4', 0, 6], //row 4

| |

| − | ['TinyYoloV2', 0, 0], //row 5

| |

| − | ['TinyYoloV3', 0, 0] //row 6

| |

| − | ]);

| |

| − | var Coral_materialOptions_cpu = {

| |

| − | width: 900,

| |

| − | chart: {

| |

| − | title: 'Model Vs CPU Load per backend',

| |

| − | },

| |

| − | series: {

| |

| − | },

| |

| − | axes: {

| |

| − | y: {

| |

| − | distance: {side: 'left',label: 'CPU Load'}, // Left y-axis.

| |

| − | }

| |

| − | }

| |

| − | };

| |

| − | | |

| − | var materialChart_coral_cpu = new google.charts.Bar(chartDiv_Cpu_Coral);

| |

| − | view_coral_cpu = new google.visualization.DataView(table_models_cpu_coral);

| |

| − | | |

| − | function drawMaterialChart() {

| |

| − | var materialChart_coral_cpu = new google.charts.Bar(chartDiv_Cpu_Coral);

| |

| − | materialChart_coral_cpu.draw(table_models_cpu_coral, google.charts.Bar.convertOptions(Coral_materialOptions_cpu));

| |

| − | | |

| − | init_charts();

| |

| − | }

| |

| − | function init_charts(){

| |

| − | view_coral_cpu.setColumns([0,1, 2]);

| |

| − | materialChart_coral_cpu.draw(view_coral_cpu, Coral_materialOptions_cpu);

| |

| − | }

| |

| − | drawMaterialChart();

| |

| − | }

| |

| − | | |

| − | </script>

| |

| − | | |

| − | </html>

| |

| | | | |

| | === GstInference Videos === | | === GstInference Videos === |