GstInference - Introduction

Make sure you also check GstInference's companion project: R2Inference |

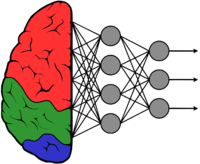

Deep Learning has revolutionized classic computer vision techniques to enable even more intelligent and autonomous systems. Multimedia frameworks, such as GStreamer, are a basic complement of automatic recognition and classification systems. GstInference is an ongoing open-source project from Ridgerun Engineering that allows easy integration of deep learning networks into your existing pipeline.

Contents

General Concepts

Software Stack

The following diagram shows how GstInference plays along other software and hardware modules.

- HW Modules

- A deep learning model can be inferred in different hardware units. In the most general case, even though performance won't be great, the general purpose CPU may be used. This is ideal for quick prototyping without requiring specialized hardware.

- One of the most popular processors to infer models is the GPGPU. Platforms such as CUDA or OpenCL have enabled the concurrent processing power of these units to execute very efficiently deep learning models.

- More recently embedded systems are now equipped with TPUs (Tensor Processing Units) or DLAs (Deep Learning Accelerators) which are HW units whose architecture is designed to host deep learning models.

- Third party backends

- There are a variety of machine learning frameworks that may be used for deep learning inference. Among the most popular we can mention TensorFlow, Caffe, TensorRT, NCSDK, etc...

- They serve as abstractions for a (or several) HW unit(s). For example, TensorFlow may be compiled to make use the CPU or the GPU. Similarly, TensorRT may be configured to use the GPU or the DLA.

- This provides the benefit of exposing a single interface for several hardware modules.

- R2Inference

- R2Inference is an open source project by RidgeRun that serves as an abstraction layer in C/C++ for a variety of machine learning frameworks.

- As such, a single C/C++ application may work with a Caffe or TensorFlow model, for example.

- This is specially useful for hybrid solutions, where multiple models need to be inferred. R2Inference may be able to execute one model on the DLA and another on the CPU, for instance.

- GstInference

- GstInference is the Gstreamer wrapper over R2Inference. It provides base classes, utilities and a set of elements to bring inference to a Gstreamer pipeline.

- Each model architecture requires custom image pre-processing and output interpretation. Similarly, each model has a predefined input format.

- Each model architecture (i.e: GoogLeNet) is represented as an independent Gstreamer element (GstGoogleNet). A single element may infer models from different backends, transparently.

GstInference Hierarchy

The following diagram shows how different GstInference modules are related. Details have been omitted for simplicity.

- GstVideoInference

- Is the base class from which all custom architecture elements inherit from. It handles everything related to R2Inference and provides two virtual methods for child classes: pre_process and post_process.

- The pre_process virtual method allows the subclass to perform some sort of pre-processing to the input image. For example, subtract the mean and multiply by a standard deviation.

- The post_process virtual method allows the subclass to interpret the output prediction, create the respective GstInferenceMeta and associate it to the output buffer.

- GstGoogleNet GstResNet GstTinyYolo...

- These subclasses represent a custom deep learning architecture. They provide specific implementations for the pre_process and post_process virtual methods.

- The same architecture element may infer models from several architectures.

- GstInferenceMeta

- When inference has been made, the specific architecture subclass interprets the output prediction and translates it to a GstInferenceMeta.

- The different GstInferenceMeta have fields for probabilities, bounding boxes and the different object classes the model was trained for.

- GstInferenceAllocator

- There may be HW accelerators with special memory requirements. To fulfill these, GstVideoInference will propose an allocator to the upstream elements. This allocator will be encapsulated by R2Inference, and will vary according to the specific backend being used.

Anatomy of a GstInference element

Every GstInference element will have a structure as depicted in the following image.