GstInference - Example Applications - Classification

Make sure you also check GstInference's companion project: R2Inference |

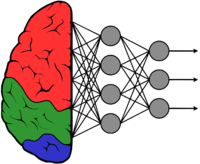

This example receives an input video file and classifies each frame into one of 1000 possible classes. For each classified frame the application captures the signal emitted by GstInference, forwarding the prediction to a placeholder for external logic. Simultaneously, the pipeline displays the captured frames with the associated label in a window. Not that the image currently being displayed not necessarily matches de one being handled by the signal. The display is meant for visualization and debugging purposes.

The classification architecture being used by the example is InceptionV4 trained using the Large Scale Visual Recognition Challenge 2012 (ILSVRC2012) Dataset. A pre-trained model can be downloaded from the GstInference Model Zoo

This examples serves both as an example and as a starting point for a classification application.

Building the Example

The example builds along the GstInference project. Make sure you follow the instructions in Building the Plug-In to make sure all the dependencies are correctly fulfilled.

Once the project is built the example may be built independently by running make within the example directory.

1 cd tests/examples/classification

2 make

The example is not meant to be installed.

Running the Example

The classification application provides a series of cmdline options to control de behavior of the example. The basic usage is:

./classification -m MODEL -f FILE -b BACKEND [-v]

- -m|--model

- Mandatory. Path to the InceptionV4 trained model

- -f|--file

- Mandatory. Path to the video file to be used

- -b|--backed

- Mandatory. Name of the backed to be used. See Supported Backends for a list of possible options.

- -v

- Optional. Run verbosely.

You may always run --help for further details.

./classification --help Usage: classification [OPTIONS] - GstInference Classification Example Help Options: -h, --help Show help options --help-all Show all help options --help-gst Show GStreamer Options Application Options: -v, --verbose Be verbose -m, --model Model path -f, --file File path -b, --backend Backend used for inference, example: tensorflow

Extending the Application

Troubleshooting