Image Stitching for NVIDIA Jetson - User Guide - Controlling the Stitcher

| Image Stitching for NVIDIA®Jetson™ | |

|---|---|

| |

| Image Stitching for NVIDIA Jetson Basics | |

|

|

|

| Getting Started | |

|

|

|

| User Guide | |

|

|

|

| Compiling OpenCV for Image Stitching | |

|

|

|

| Examples | |

|

|

|

| 360 Video | |

|

|

|

| Performance | |

|

|

|

| Contact Us |

|

This page serves as a guide to configure the stitcher in order to meet different application requirements.

The stitcher uses the following parameters to determine its runtime behavior:

- Border Width: Border width for the blender.

- Left-Center Homography: Left-center homography from the stitching of three images.

- Right-Center Homography: Right-center homography from the stitching of two or three images.

- async-homography: Enable the async estimation of the homographies.

- async-homography-time: Time in milliseconds for the estimation of the homography.

- fov: Field of view of the cameras.

- overlap: Overlap between the images.

- stitching-quality-treshold: Stitching quality treshold, between 0 and 1, where 1 is the best score. This threshold does not allow new homographies bellow this value.

All of the properties should be configured at start-up. Currently, the stitcher is able to operate in either two or three image stitching modes. For the two image stitching mode, the left image is kept as a reference and the right one is transformed, for the three image case, the centre image is the reference.

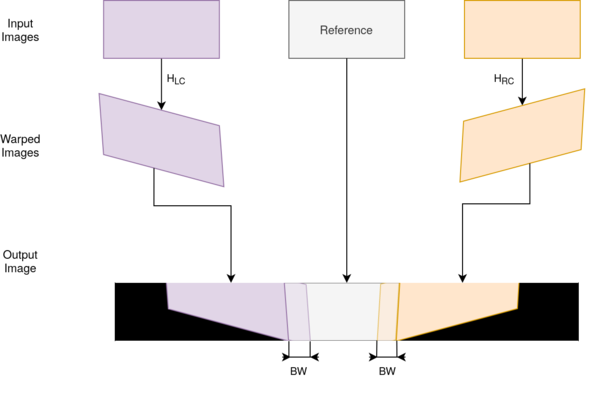

In the following image the effect of these parameters can be seen:

The first step on the stitching is the image warping, this uses the Homography for the corresponding image to transform the input image in such a way as it matches the reference image. Depending on the camera setup this can introduce distortion on the input image, but note that the effect on the previous image is exaggerated.

If you want to learn how to calculate this visit the following page.

The second step is copying over the images over to the output buffer, this is handled by the homography and no parameter is involved in this process.

Finally, the stitch is blended, this is marked with the washed-out purple and orange in the previous image. This section is not copied over from the input images but is a mix of both of these and is controlled by the border-width parameter. This is especially useful when adjacent cameras don't have the same exposure.

Warning: If the homography between the two images is not optimal or the cameras have too much distortion a too large border width can introduce ghosting.

Example Pipeline

Check the following simple pipeline to see how each parameter is used:

LC_HOMOGRAPHY="{\

\"h00\": 7.3851e-01, \"h01\": 1.0431e-01, \"h02\": 1.4347e+03, \

\"h10\":-1.0795e-01, \"h11\": 9.8914e-01, \"h12\":-9.3916e+00, \

\"h20\":-2.3449e-04, \"h21\": 3.3206e-05, \"h22\": 1.0000e+00}"

RC_HOMOGRAPHY="{\

\"h00\": 7.3851e-01, \"h01\": 1.0431e-01, \"h02\": 1.4347e+03, \

\"h10\":-1.0795e-01, \"h11\": 9.8914e-01, \"h12\":-9.3916e+00, \

\"h20\":-2.3449e-04, \"h21\": 3.3206e-05, \"h22\": 1.0000e+00}"\

BORDER_WIDTH=10

gst-launch-1.0 -e cudastitcher name=stitcher \

left-center-homography="$LC_HOMOGRAPHY" \

right-center-homography="$RC_HOMOGRAPHY" \

border-width=$BORDER_WIDTH \

nvarguscamerasrc maxperf=true sensor-id=0 ! nvvidconv ! stitcher.sink_0 \

nvarguscamerasrc maxperf=true sensor-id=1 ! nvvidconv ! stitcher.sink_1 \

nvarguscamerasrc maxperf=true sensor-id=2 ! nvvidconv ! stitcher.sink_2 \

stitcher. ! perf print-arm-load=true ! queue ! nvvidconv ! nvoverlaysink

You can find more complex examples here.