Birds Eye View - Introduction - Research

| Birds Eye View |

|---|

|

| Introduction |

| Getting the Code |

| Getting Started |

| Examples |

| Library Docs |

| Performance |

| Contact Us |

Contents

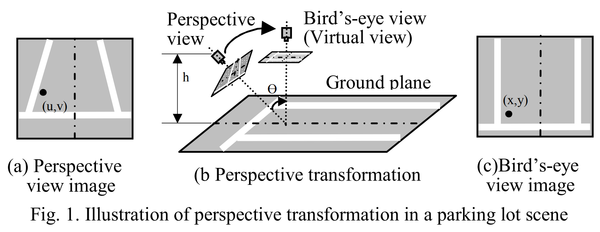

Introduction

In general, camera-on-vehicle has serious perspective effect as Fig. 1(a). Because of the perspective effect, the driver can’t feel distance correctly and advanced image processing or analysis becomes difficult also. Consequently, as Fig. 1(b), perspective transformation is necessary. The raw images have to be transferred into bird’s-eye view as Fig. 1(c). [2]

To obtain the output bird-s eye view image a transformation using the following projection matrix can be used. It maps the relationship between pixel (x,y) of bird's eye view image and pixel (u,v) from the input image.

[math]\displaystyle{ \begin{bmatrix} x'\\ y'\\ w' \end{bmatrix} = \begin{bmatrix} a_{11} & a_{12} & a_{13}\\ a_{21} & a_{22} & a_{23}\\ a_{31} & a_{32} & a_{33} \end{bmatrix} \begin{bmatrix} u\\ v\\ w \end{bmatrix} \;\; where \;\;\;\; x = \frac{x'}{w'} \;\; and \;\;\;\; y = \frac{y'}{w'}. }[/math]

The transformation is usually referred as Inverse Perspective Mapping (IPM) [3]. IPM takes as input the frontal view, applies a homography and creates a top-down view of the captured scene by mapping pixels to a 2D frame (Bird's eye view).

In practice, IPM works great in the immediate proximity of car, for example, and assuming the road surface is planar. Geometric properties of objects in the distance are affected unnaturally by this non-homogeneous mapping, as shown in Figure 2 left image. This limits the performane of applications in terms of accuracy and distance where they can be applied reliably. The right image can be achieved by applying several post transformation techniques like Incremental Spatial Transformer [4]. However, this project uses a basic IPM approach as a demonstration.

There are 3 assumptions when working without post transformation techniques:

- The camera is in a fixed position with respect to the road.

- The road surface is planar.

- The road surface is free of obstacles.

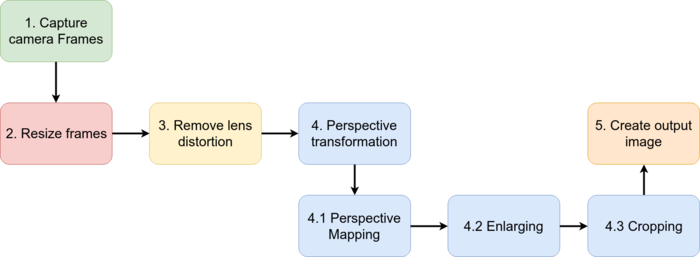

RidgeRun BEV Demo Workflow

The following image shows the basic processing path used by the BEV system.

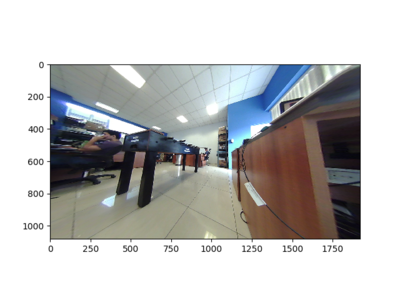

- Capture camera frames: Obtain frames from video source. 4 cameras with 180 degrees view angle.

- Resize frames: Resize input frames to desired input size.

- Remove lens distortion: Apply lens undistortion algorithm to remove the fisheye effect.

- Perspective transformation: Use the IPM

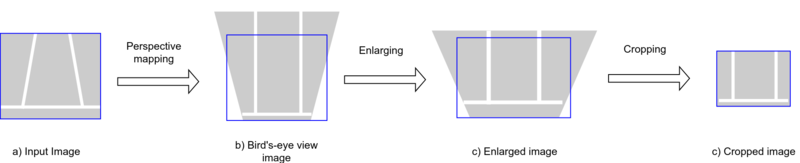

- Perspective mapping: Map the points from the front view image to a 2D top image (BEV)

- Enlarging: Enlarge the image to search for the required region of interest (ROI)

- Cropping: Crop the output of enlargement process to adjust the needed view.

- Create a final top view image

Removing fisheye distortion

Wide angle cameras are needed to obtain the max point of view and to achieve the Bird's Eye View perspective. Usually these cameras provide fish eye image which needs to be transform to remove the distortion. After the camera calibration process was done, done parameters are received:

- K: Output 3x3 floating-point camera matrix

[math]\displaystyle{ K= \begin{bmatrix} f_{x} & 0 & c_{x}\\ 0 & f_{y} & c_{y}\\ 0 & 0 & 1 \end{bmatrix} }[/math]

- D: Output vector of distortion coefficients

[math]\displaystyle{ D = [K_{1},K_{2},K_{3},K_{4}] }[/math]

As stated in the OpenCV documentation, let P be a point in 3D of coordinates X in the world reference frame (stored in the matrix X) The coordinate vector of P in the camera reference frame is

[math]\displaystyle{ Xc = R X + T }[/math]

where R is the rotation matrix corresponding to the rotation vector om: R = rodrigues(om); call x, y and z the 3 coordinates of Xc:

[math]\displaystyle{ x = Xc_{1}, \;\; y = Xc_{2}, \;\; z = Xc_{3} }[/math]

The pinhole projection coordinates of P is [a; b] where

[math]\displaystyle{ a = \frac{x}{z}, \;\; b = \frac{y}{z}, \;\; r^2 = a^2 + b^2, \;\; \theta = a \; tan(r) }[/math]

The fisheye distortion is defined by:

[math]\displaystyle{ \theta_{d} = \theta(1+k_{1}\theta^2+k_{2}\theta^4+k_{3}\theta^6+k_{4}\theta^8) }[/math]

The distorted point coordinates are [x'; y'] where

[math]\displaystyle{ x' = (\theta_{d} / r ) a , \;\; y' = (\theta_{d} / r ) b }[/math]

Perspective Mapping