Full Body Pose Estimation for Sports Analysis - 3D Pose Estimation

WORK IN PROGRESS. Please Contact RidgeRun OR email to support@ridgerun.com if you have any questions. |

This wiki summarizes the documentation of the 3D pose estimation library. It contains information such as system design and usage.

Contents

Introduction

The 3D pose estimation library consists of a python module that provides the user with the ability to estimate the three-dimensional human pose from the camera feed.

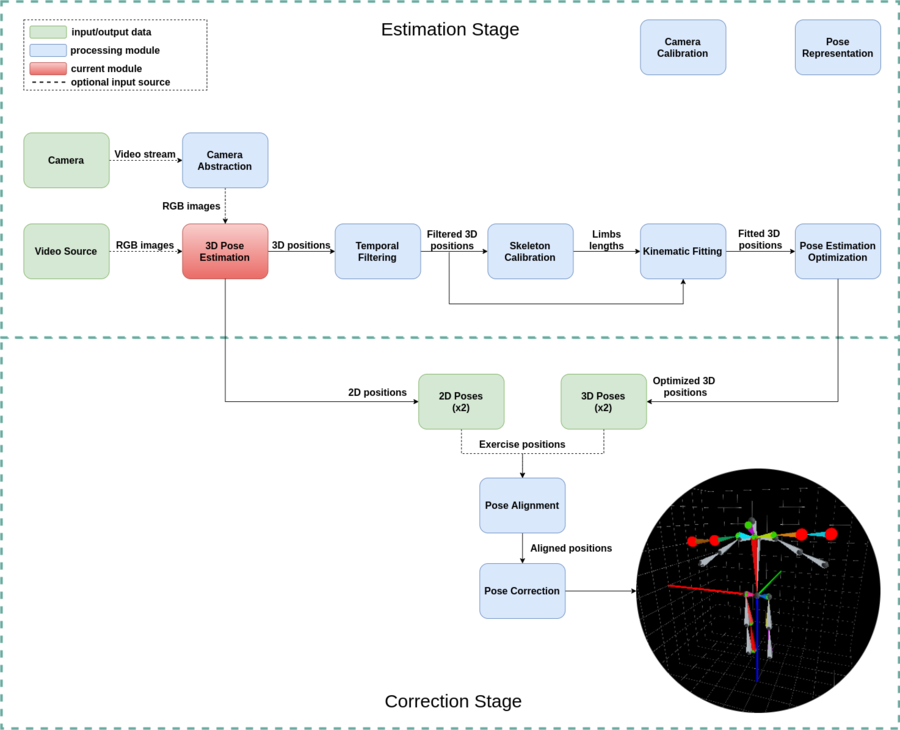

System design

In order to solve the multi-camera 3D human pose estimation problem, two different approaches were proposed. One of the approaches is based on the use of a neural network to infer the 2D position of body joints out of multiple camera frames, to later using the camera calibration data to triangulate the 3D position of said joints. The other approach focuses on inferring the 3D pose out of different camera frames, to later using the confidence score of each prediction to average the 3D human pose.

Approach 1: 2D pose estimation + triangulation

The strategy proposed on this solution to the 3D pose estimation problem consists of using a neural network to predict the 2D position of the human body joints on each of the pictures captured by different cameras. Then, by using the corresponding camera calibration parameters, the system triangulates each joint's three-dimensional position on the world coordinate system.

To estimate the 2D position of the body joints on the input camera frames, the ILovePose's implementation of Fast Human Pose Estimation was used. After the 2D pose estimation library processes the input images, the estimated 2D position of the body joints are triangulated by the 3D reconstruction module developed using some of the tools provided by OpenCV.

Approach 2: 3D pose estimation + averaging

The second solution proposed is based on the use of a neural network to directly predict the 3D pose from each of the camera frames. Later, the camera calibration parameters are used to transform the prediction from the camera's coordinate system to the world's coordinates system. Also, a classic point set registration method is used to obtain the best transform between the resulting pose predictions from each of the frames, later using it to refine the transformation between the cameras coordinate systems. This 3D pose estimator uses the Real-time 3D Multi-person Pose Estimation implementation by Daniil-Osokin to infer the 3D pose out of each of the camera frames provided as input. Then, each of the estimated poses gets transformed relative to the camera coordinate system specified as the origin. To perform the said transformation, the system uses the rotation and translation vectors computed during multi-camera system calibration.

The resulting pose that is returned by the system gets computed by averaging the 3D positions of the joints estimated for each frame. Each joint position is weighted on the average by its confidence score, provided by the network.

The system not only provides the user with a 3D pose estimation but also helps to correct the transformation between other cameras coordinate systems and the origin camera coordinate system. To achieve said correction the system uses a point set registration method to compute the optimal transformation for pose alignment between the inferred poses and updates the rotation and translation parameters using a low pass filter.