Full Body Pose Estimation for Sports Analysis - About

WORK IN PROGRESS. Please Contact RidgeRun OR email to support@ridgerun.com if you have any questions. |

Contents

Introduction

The full-body pose estimation for sports analysis library is a set of Python modules intended to carry out the whole pose estimation and pose correction processes. These processes include the camera calibration, video capturing, the pose estimation itself, the estimation refinement, the pose alignment and the pose correction. The main objective of this library is to further use it as a starting point for human motion analysis in sports. However, the majority of modules are designed in a way that they can be reused in other applications, besides this one.

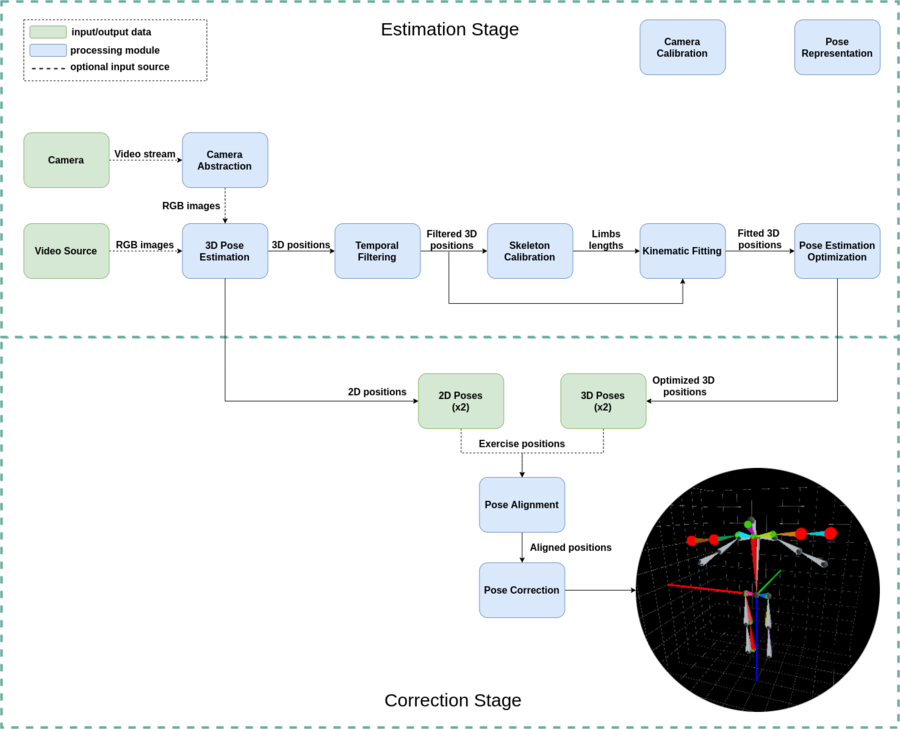

A general view of the whole system can be seen in Figure 1.

Workflow Description

In the diagram, it can be seen that there is a flow between some of the modules. This is the workflow of the functional units of the pose estimation process, however, we have other modules in the corner that are not part of the general workflow, but play an important role in the whole process. Next, we will describe briefly the role of each one of the modules above:

This module is in charge of providing an interface for instancing and using different camera types. It is related to the frame capture in the workflow since it provides the camera configuration according to the type of camera to be used. This is one of the modules that may be reusable in other applications that require camera interaction.

This module provides a way to relate a camera frame with what it is being actually captured in the real world. A camera is considered calibrated when its calibration parameters are known. Therefore, the camera calibration process is a procedure that allows the determination of the camera's parameters. This is also related to the frame capture in the workflow since it is a process that should be done before starting the recording stage, in order to have accurate pose predictions. This is also a reusable module for any other application that has camera interaction.

This is the module that provides the estimation of the three-dimensional human pose from the input frames, using a deep neural network. The output of this module may include some noise due to the network's accuracy or the recording process. In order to overcome that noise, its output is passed to the following modules for refinement. In this case, the deep neural network is already trained with human poses, which limits this module to work only with human skeleton types. However, it can also be reused in other related to human pose estimation applications.

In this module, the sequence of frames is smoothed through a low pass adaptive filter, with the purpose of reducing the constantly jumping positions of the joints due to the previous stage's accuracy. This is another module that can be reused in other applications to filter the 3D positions of any object since it is not attached to work only with skeletons.

This is another module out of the general workflow, but important as well. It is intended to model the movement of any type of skeleton by using a relative representation. By using its relative representation it is possible to adjust the predicted pose to a fixed skeleton using rotation angles. This module is necessary for the skeleton calibration and kinematic fitting since both of them need to know with which type of skeleton they are working with. The pose representation module was created to design any type of skeleton besides humans, which provides high flexibility for its use in other applications.

This other module is intended to obtain the limb lengths of the skeleton whose pose is going to be estimated. This process is necessary in order to apply the kinematic fitting to a calibrated skeleton, which will adjust the pose prediction to the subject body, and will ignore any noise related to limbs' length variations. This process is meant to be executed in the first iterations of the workflow until the skeleton is considered calibrated. Once the process finishes, it will output the skeleton measures to the kinematic fitting stage and will stop calibrating, which means that in the following iterations the flow will go directly from the filter to the kinematic fitting. This module is also reusable with any other type of skeleton, and it is meant to be used along with the pose representation module.

The kinematic fitting module is intended to receive the filtered 3D positions from the pose estimator and adjust them to the user limb lengths while considering the anatomic constraints of the human body. This process is done through a deep network model that applies kinematic chains that model the skeleton pose with three-dimensional rotations and translations. The output of this module will be the pose with all the refinements applied and the possibility of transferring its angles to any other human skeleton. Since this module uses a deep network model it is bounded to use human skeletons, but to fix this, we also provide the model training steps in order to make it reusable with any other type of skeletons, along with the pose representation module.

The pose optimization module provides the functionality of minimizing the re-projection error, given the camera calibration parameters. It consumes the average 3D pose from a multi-view pose estimation system, as well as the 2D predictions with their respective confidence scores. Then, using a gradient based optimization method implemented as a computational graph, the module not only minimizes the re-projection error, but also assures the pose is viable given the human body capabilities.

The pose alignment module belongs to the correction stage of the workflow and it is intended to align two videos of two different people performing the same exercise. For this step it is required to define who will be the person performing correctly the exercise (trainer) and who will need the corrections (trainee). This process is done by applying temporal and spatial alignment techniques in order to map the movements from the trainer to the trainee. This module can work with both 2D and 3D positions, and performs a different preprocessing step for each one of them. The outputs of this module are the aligned videos and they serve as a starting point for the comparison in the pose correction process.

The final module of the workflow is the pose correction, where the errors in the movement of the aligned videos are determined and then processed to generate corrections for the user in order to improve. This step provides the correction in 3D space, therefore it applies some mapping techniques in order to obtain a 3D representation from a 2D input. Then, it computes the distance between the movements of both subjects and determines whether it is required to correct or not the movement. When corrections are required it will show a 3D representation of the movement and the specific joints that need to be improved.