Full Body Pose Estimation for Sports Analysis - Pose Alignment

WORK IN PROGRESS. Please Contact RidgeRun OR email to support@ridgerun.com if you have any questions. |

Introduction

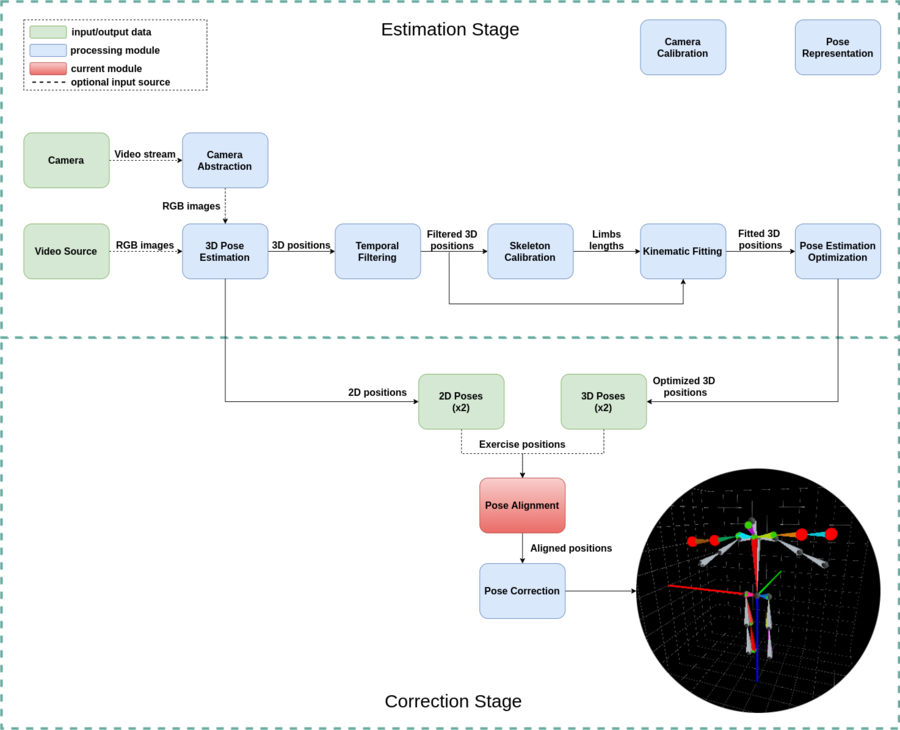

The pose alignment module is intended to receive the 2D or 3D positions from the pose estimation of the videos of 2 different people performing the same type of exercise in each one. From these 2 videos it is assumed that one of them is performing the exercise correctly (the trainer) and the other one is doing the same exercise but might require corrections (the trainee). The idea of this module is to align the trainer and trainee movements in order to decide which corrections needs to do the trainee in order to perform the exercise correctly.

How it Works

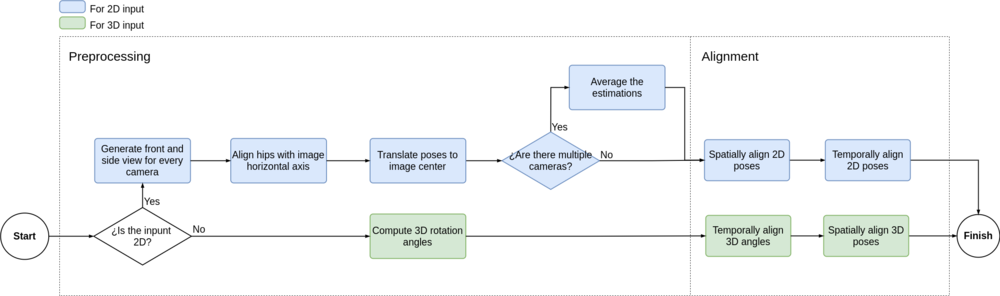

This module is able to work with both 2D and 3D dimensions, thus it needs a different kind of processing for each one of them. The steps done in this module are divided into 2 main stages, the preprocessing stage and the alignment stage. These stages and their subprocessing steps are shown in the next flow diagram.

Preprocessing

This step is meant to prepare the poses data according to their dimensions in order to be aligned in the next stage. This process is applied in the same way for both the trainer and trainee videos. In the next subsections we will explain how every module shown in the flow diagram works.

2D: Front and Side Views Generation

The front and side views of the performed movements are used as a way of obtaining that extra perspective or depth that 2D images lack of. To obtain these views we use the 2D Motion Retargeting model that allows to combine the motion, skeleton and viewpoint of 3 different videos into 1 sequence that contains the motion of the person in the first video, the skeleton dimensions of the second one and the viewpoint of the third one. The front and side views are generated by using this model and combining the trainer motion and the trainer skeleton with a predetermined video of a person standing in a front and side position from the camera viewpoint, since we only want to modify the viewpoint and not the movements or body. This same process is then applied also to the trainee videos. From this step we generate a front and side view for both the trainer and the trainee, and further alignment processing steps are performed over the front and side views of each one. The next gif show a representation of these views.

2D: Hips Alignment with Horizontal Image Axis

As part of the normalization steps, we align the 2D vector that goes from the left hip to right one with the X axis, in order to correct diagonal orientations of the camera when the videos were recorded. This is done by computing the angle between the hips vector and the X axis and then rotating all the joints positions with than angle.

2D: Translation of Poses to the Image Center

The other normalization step is about moving the skeleton in the image to the center of this one. For this step we translate the skeleton pelvis from any position in the image to the center, which in our case is (320,240) since our images are of 640x480, and then move the other joints as well to maintain the distances to the pelvis before it was moved.

2D: Average of the Estimation

Since we allow videos that were recorded with multiple synchronized cameras, we need a way to reduce the estimations for all of them into one. To do these we average the joints positions in a same frame for every side and front view, assuming that we have a front and side view for every camera that was used to record the same video. This step is only done when multiple cameras were used to record a video.

3D: Rotation Angles Computing

This is the only step required when inputs have 3 dimensions, since the pose estimator already ensures that they are normalized. In this case all joint positions are relative to the pelvis because it is the center of the coordinated system. However, since coordinates depend on skeleton dimensions we prefer to use the angles representing the pose for the alignment process. For obtaining these angles for every pose we use the kinematic fitting module that allows to map a coordinates representation to an angles representation.

Alignment

The second stage of this module is the alignment itself, which is divided in temporal and spatial alignment. This stage receives as input the preprocessed trainer and trainee videos, however the original input data of trainer is no longer used after this stage, since we will be using only its movements but transferred to the trainee body. We will name the trainee body with the trainer movements as traineeT from now on.

Both 2D and 3D data require temporal and spatial alignment, but they differ in the order of this steps, as shown in the flow diagram. This difference is due to the spatial alignment approach used for each one, which will be explained in the next subsections.

2D: Spatial Alignment

In both cases the spatial alignment is required in order to compare the trainee and the traineeT movements. For the 2D case, the spatial alignment is done before the temporal alignment because the 2D Motion Retargeting model already takes into account some temporal conditions inside. This algorithm is the same used for front and side views generation, but in this case we generate the traineeT by combining the front view trainer motion with the front view trainee skeleton and the front viewpoint, and the same for side views.

2D: Temporal Alignment

After the spatial alignment we have to temporally align the original trainee sequence with the traineeT sequence in order to fix any differences that may have left the spatial alignment even if it considers also temporal conditions. To do the temporal alignment we use the FastDTW library which implements the Dynamic Time Warping algorithm in Python. This algorithm creates a cost matrix in order to obtain the mapping between frames that represents the least cost. This matrix is an NxM matrix where N and M are the number of frames of each sequence to be aligned respectively, and the values of each cell are the ones defined by the cost function. For the 2D case we use the cost function:

[math]\displaystyle{ C(x,y) = \frac{1}{15}\sum_{m=0}^{14}{||x_m - y_m||}\;\;\;\;\;\;\;\;\;(1) }[/math]

where x and y are the poses for the trainee and the traineeT respectively and m is the joint number.

The temporally aligned result sequences for front and side view have the same amount of frames so it is enough to do the temporal alignment of the front view to obtain the mapping of the side one too. Once this process is finished the we have the alignment between the trainer and traineeT videos to be compared in the pose correction stage.

3D: Temporal Alignment

In the 3D case we have as input the rotation angles of the trainer and trainee. Since we are working with angles the cost function of Dynamic Time Warping algorithm requires an additional step. Due to the periodic nature of the angles, it is the same 0 or 360 degrees, however, if we perform the numeric subtraction it seems there are 360 degrees of difference, which would affect the cost value. To avoid that we modify the cost function (1) by using the next equation:

[math]\displaystyle{ C(x,y) = \frac{1}{15}\sum_{m=0}^{14}{||min \theta_m||}\;\;\;\;\;\;\;\;\;(2) }[/math]

where:

[math]\displaystyle{ min\theta_m = ((x_m - y_m + 180) mod 360) - 180 }[/math]

This ensures that we compute always the least difference between the angles x and y. Once, we have the mapping between sequences we can perform the spatial alignment.

3D: Spatial Alignment

In the 3D case we use a different approach for the spatial alignment, since we use our Pose Representation module to generate the traineeT sequence by transferring the angles of the trainer movements in a skeleton that has the measures of the trainee. This is a naive approach because we are assuming that skeleton measures between subjects are few enough to say that the same angles will produce almost the same coordinates for each joint in both bodies. To do this angles transfer we need to have the movements already aligned temporally, which is why for the 3D case the temporal and spatial alignments order are inverted. Once the process is done we obtain the coordinates of the traineeT sequence to further compare it with the trainee in the next pose correction module.