Getting started with TI Jacinto 7 Edge AI - Demos - C++ Demos - Semantic Segmentation

Getting started with TI Jacinto 7 Edge AI RidgeRun documentation is currently under development. |

| Getting started with TI Jacinto 7 Edge AI | ||||||

|---|---|---|---|---|---|---|

| ||||||

| Introduction | ||||||

|

|

||||||

| GStreamer | ||||||

|

|

||||||

| Demos | ||||||

|

||||||

| Reference Documentation | ||||||

| Contact Us |

Contents

Semantic segmentation demo

Requirements

- A sample video in the J7's /opt/edge_ai_apps/data/videos/ directory.

Run the semantic segmentation demo example

- Navigate to the python apps directory:

cd /opt/edge_ai_apps/apps_cpp

- Create a directory to store the output files:

mkdir out

- Run the demo:

./bin/Release/app_semantic_segmentation -m ../models/segmentation/TFL-SS-254-deeplabv3-mobv2-ade20k-512x512 -i ../data/videos/Cats.mp4 -o ./out/sem_%d.jpg

The video name under /opt/edge_ai_apps/data/videos/ might be different, change if necessary. |

- The demo will start running. The command line will look something like the following:

Error creating thumbnail: Unable to save thumbnail to destination

Figure 1. Terminal output.

- After all the video frames are done processing, navigate to the out directory:

cd out

There should be several images named sem_<number>.jpg as a result of the semantic segmentation model.

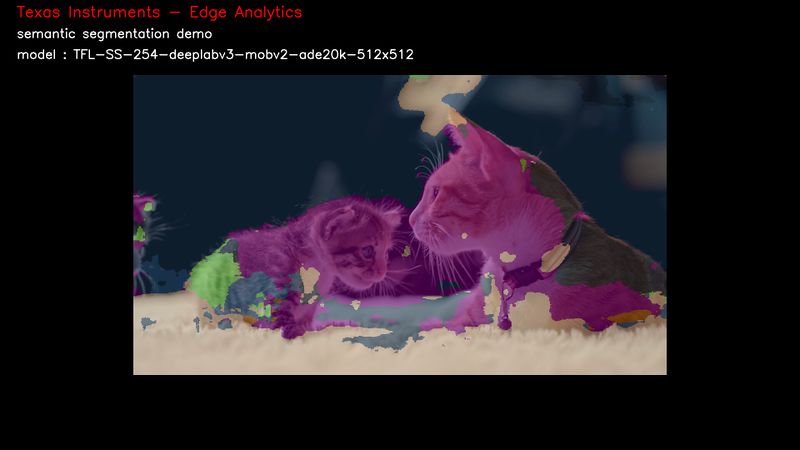

- Figure 2 shows an example of how these images should look like:

Figure 2. Semantic segmentation output example.

There are multiple input and output configurations available. For example, in this example, video input and an image output was specified.

For more information about configuration arguments please refer to the Configuration arguments section below.

Configuration arguments

-h, --help show this help message and exit

-m MODEL, --model MODEL

Path to model directory (Required)

ex: ./image_classification.py --model ../models/classification/$(model_dir)

-i INPUT, --input INPUT

Source to gst pipeline camera or file

ex: --input v4l2 - for camera

--input ./images/img_%02d.jpg - for images

printf style formating will be used to get file names

--input ./video/in.avi - for video input

default: v4l2

-o OUTPUT, --output OUTPUT

Set gst pipeline output display or file

ex: --output kmssink - for display

--output ./output/out_%02d.jpg - for images

--output ./output/out.avi - for video output

default: kmssink

-d DEVICE, --device DEVICE

Device name for camera input

default: /dev/video2

-c CONNECTOR, --connector CONNECTOR

Connector id to select output display

default: 39

-u INDEX, --index INDEX

Start index for multiple file input output

default: 0

-f FPS, --fps FPS Framerate of gstreamer pipeline for image input

default: 1 for display and video output 12 for image output

-n, --no-curses Disable curses report

default: Disabled